User:Andre Castro/2.1/research-experiments-log: Difference between revisions

Andre Castro (talk | contribs) (Created page with "=Experiment's Log= One experiment per day ==03.10.2012== Sharing a book library for day. ==Steps== # put my calibre ebook library on machine online 24h per day # start calibre...") |

Andre Castro (talk | contribs) No edit summary |

||

| (41 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=Experiment's Log= | =Experiment's Log= | ||

<div style="background-color:#FF3366;"> | |||

==Sharing my digital library== | |||

===Steps=== | |||

# put my calibre ebook library on machine online for 24h | |||

# start calibre content server | |||

$ calibre-server | |||

which point to my Calibre-library, and uses port machine_ip:8080 | |||

# check library remotely | # check library remotely | ||

# | Problem!! Calibre library is only accessible on the local LAN where I am at. http://www.mobileread.com/forums/showthread.php?t=160387 | ||

# | |||

* in order to access the library remotely I have to have calibre installed on a server. | |||

* calibre server on a LAN becomes a bit redundant, perhaps is handy for grabbing the books to ereaders and exchange them with people that are near one (on the same LAN), but one cannot say is yet a strong strategy for sharing books with someone in another part of the world. | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==Opening and modifying and epub== | |||

Too much talk about ebooks, witout actually looking at the insides of one. That's what I will do, will and create a new one with a section of the original. | |||

* choose a book: [http://www.gutenberg.org/ebooks/4018 Japanese Fairy Tales by Yei Theodora Ozaki] | |||

===dissecting the epub=== | |||

unzip the epub: there are 2 directories; | |||

A) 4018/ - content dir<br/> | |||

A.1) html ,css - html content and style <br/> | |||

A.2) '''content.opf''' - Open Packaging Format metadata file (can be called anything, but ''content.opf'' is the convention) - specifies the location of all the contents in the book + the metadata (in xml)< br/> | |||

A.2.1) ''metadata'': required terms:title and identifier(the identifier must be a unique value, although it's up to the digital book creator to define that unique value)<br/> | |||

A.2.2) ''manifest'': all the content files par of the book<br/> | |||

A.2.3) ''spine'': indicates the order files they appear in the ebook - but not extraneous (like begging and end)<br/> | |||

A.2.4) ''guide'': (not required) explains what each section means <br/> | |||

A.3) '''toc.ncx''' - The NCX defines the table of contents, but also metadata (overlaps w/ content.opf)<br/> | |||

A.3.1) ''metadata''- requires:<br/> | |||

** uid: unique ID for the digital book. Should match the dc:identifier in the OPF file. | |||

** depth: the level of the hierarchy in the table of contents | |||

** totalPageCount and maxPageNumber: only to paper books and can be left at 0. | |||

A.3.2) ''navMap'': contains the navPoints <br/> | |||

A.3.2.1) ''navPoint'':<br/> | |||

** playOrder - reading order. (same as itemref elements in the OPF spine). | |||

** navLabel/text describes the title of this book section, a chapter title or number | |||

** content src attribute points to content file. (a file declared in the OPF manifest). (can also point to anchors within XHTML eg: content.html#footnote1.) | |||

A.4)and cover; <br/> | |||

B) '''META-INF/container.xml''' (pointing to content.opf) - EPUB reading systems will look for this file first, as it points to the location of the metadata for the digital book. <br/> | |||

B.2) META-INF can contain file such as digital signatures, encryption, and DRM <br/> | |||

C) '''./mimetype''' - file containing 'application/epub+zip'<br/> | |||

===packaging the epub into an epub+zip file=== | |||

* create the new ZIP archive and add the mimetype file (no compression) | |||

$ zip -0Xq my-book.epub mimetype | |||

* add the remaining items | |||

$ zip -Xr9Dq my-book.epub * | |||

-X and -D minimize extraneous information in the .zip file; -r adds files from dirs | |||

9? q? | |||

===conclusion=== | |||

* in the epub the text overlaps heavily, maybe because I didn't add a font size to the css; (text-height:200%; solved it) | |||

* however in a kindle looks fine. Why?because kindle is imposing its font on the text - 'If you are publishing to Kindle it forces your font into it's own custom font so it doesn't matter'. This show what close the device is. | |||

* even tough the process of creating an epub and its structure are straight-forward, writing the .opf and .ncx without any automated process its a pain (but important to understand the epub's structure) and may easily lead to errors. | |||

* also it seems not the easiest format to experiment with. The need to declare all the files used and the metadata as well as packaging, will make think twice before trying something out. | |||

[[Image:My_Lord_Bag_of_Rice.png|400px]] | |||

===resources=== | |||

http://www.ibm.com/developerworks/xml/tutorials/x-epubtut/index.html | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==epubs - Sigil== | |||

I made a few attempts to work with Sigil to help me work in Spam book project, however its far from ideal. I adds excessive markup content by itself, and makes the whole business quite messy, specially when working with large amount of text, when automated processes are very handy. | |||

What I will try to do today is divide the creation of an epub in 4 stages. | |||

# Having all the content in plain text with mediawiki notation - mostly headings, bold, and italics | |||

# use a parse to generate an html from the plain text file | |||

# Use calibre to convert the html to epub. | |||

* Question: What is the fundamental difference between and ebook and a website? Can't a website, mostly made of text and re-flowable , be a ebook? With perhaps even more potential and easier to experiment with? | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==Character Encoding of a image-pdf== | |||

I wanted to get hold of Licklider ''Libraries of Future''. The only online copy I found was a [http://openlibrary.org/books/OL5942946M/Libraries_of_the_future pdf] constituted of scanned images. Thought this could be a good opportunity to get some hands on character recognition process. | |||

NOTE: PDF MUST BE CONVERTED TO IMAGE AT 300PDI!!! | |||

====Steps==== | |||

* extract separate images out of a pdf | |||

* convert output images files to a TIFF (single-bit uncompressed) so the tesseract (ocr software) can read them. | |||

** using imagemaick | |||

convert -monochrome -density 600 source.pdf page.tif | |||

-density refers to resolution of the scanned image (the higher the better) 600pdi | |||

** using gimp (as documented in http://alexsleat.co.uk/2010/04/12/howto-simple-tesseract-usage-guide-ocr/ ) | |||

* converted the 2 images into a text file using tesseract | |||

tesseract image.tif text | |||

Outputs result in .txt format | |||

===Results=== | |||

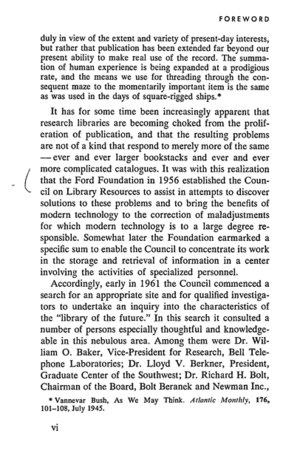

** gimp tiff: resulting text and image | |||

[[File:Page10-g-1.png|thumb|center]] | |||

<pre> | |||

but rather that publication has been extended far beyond our | |||

present ability to make real use of the record. The summa- | |||

tion of human experience is being expanded at a prodigious | |||

rate, and the means we use for threading through the con- | |||

sequent maze to the momentarily important item is the same | |||

as was used in the days of square-rigged ships.* | |||

</pre> | |||

** imagemagick tiff: resulting text and image | |||

[[File:Page-imgck.png|thumb|center]] | |||

<pre> | |||

§;;iuEy ix’: af ihszfé aiaximiai gzimi *»;<~°2:::ii?s,:s§;§-°‘ iéf §§§‘:‘T.°:f:3é‘;‘;i%E3§-°-£§3:3;}I imafiriégis, | |||

bait m1:E:::::* that Apwiiaaiim has bi?-iii} §:E;}i§:€i3fl§€3-ii fa: b€::«,.«~’§;3m?§ mu: | |||

p=:°‘@$e:m sgibifiiiy Ea mam ma} we Sf that rzaaazaig ’E’ha samzmaw | |||

timz Qf Emmazfa m:§§:r§V€.%:1a$ £3 baffsizzzg $.I><Z§&§‘fid€€§ at :5: pradigiaaza | |||

mm, and tha;: X?JE€;?3£:iE.§S ass: far fifamadizfag ifiarmzgfiz ‘mg: mam | |||

wqumii mam‘: ix} th.s::‘r 3:m‘m'2e:,*:12.€z21°§§:; impamgazzt itazm is that: Sams | |||

:13 wzag Lzsmi in am: days mi Szzgzarawrigggad giaipgfi‘ | |||

</pre> | |||

===Conclusion=== | |||

In order to be able to have the whole book translated into text I will have to fine-tune imagemagick conversion to TIFF, so that tesseract does a better job, in character recognition. | |||

Just as to see how would tesseract would react to a scanned TIFF, I scanned a printed text on a table scanner at 600pdi directly to a TIFF file. The result was very good | |||

Here is the both scan and text | |||

[[File:Teste-page001.png|thumb|center]] | |||

<pre> | |||

Introduction | |||

In the preface to A Contribution to the Critique of Political Economy, Marx argues that, ‘at a | |||

certain stage of development, the material productive forces of society come in conflict with | |||

the existing relations of production’? What is possible in the information age is in direct con- | |||

flict with what is permissible. Publishers, film producers and the telecommunication indus- | |||

try conspire with lawmakers to bottle up and sabotage free networks, to forbid information | |||

from circulating outside of their control. The corporations in the recording industry attempt | |||

to forcibly maintain their position as mediators between artists and fans, as fans and artists | |||

merge closer together and explore new ways of interacting. | |||

</pre> | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==Streamline - character Encoding of a image pdf== | |||

Yesterday I was making a crucial mistake, and that's why I have been getting so many encoding errors. I was importing my pdf to tifs at 100dpi, creating a very low-res document, therefore hard for the ocr software to recognize the characters. | |||

So now I have to: | |||

* seperate the entire pdf into seperate pdf files | |||

pdftk Licklider.pdf burst | |||

* remove irrelevant pages (from beginning and end) | |||

* convert each individual pdf to gray(? does it need the gray) 300dpi tifs | |||

convert -units pixelsperinch -density 300x300 -colorspace Gray -depth 8 Licklider_10.pdf Licklider_10-gray-8.tif | |||

for i in pg*.pdf;do convert -units pixelsperinch -density 300x300 -colorspace Gray -depth 8 $i "`basename $i .pdf`.tif";done | |||

// this will take a while now | |||

* convert tif to monochrome | |||

convert Licklider_10-gray-8.tif +dither -monochrome -normalize Licklider_10-gray-8-MONO.tif | |||

for i in pg*.tif;do convert $i +dither -monochrome -normalize "`basename $i .tif`-m.tif";done | |||

* ocr the image using tessaract | |||

tesseract Licklider_10-gray-8-MONO.tif Licklider_10-gray-MONO | |||

for i in *-m.tif;do tesseract $i "`basename $i .tif`"; done | |||

* cat all txts into one | |||

touch full_text; cat *.txt > full_text | |||

* Result: [[File:Licklider-LIBRARIES_OF_THE_FUTURE.txt]] | |||

<pre> | |||

Foreword | |||

THIS REPORT of research on concepts and problems of | |||

“Libraries of the Future†records the result of a two-year | |||

inquiry into the applicability of some of the newer tech- | |||

niques for handling information to what goes at present | |||

by the name of library work — i.e., the operations con- | |||

nected with assembling information in recorded form and | |||

of organizing and making it available for use. | |||

Mankind has been complaining about the quantity of | |||

reading matter and the scarcity of time for reading it at | |||

least since the days of Leviticus, and in our own day | |||

these complaints have become increasingly numerous and | |||

shrill. But as Vannevar Bush pointed out in the article | |||

that may be said to have opened the current campaign | |||

on the “information problem,†| |||

The diï¬iculty seems to be, not so much that we publish un- | |||

V | |||

</pre> | |||

===Conclusion=== | |||

Although, after a bit of tweaking to get the book pages images processed for scaning, the ocr worked well, with only a few glitchs in the text, that need to be emended. A nice way to do this proofreading would be to edit the text while reading it on an ereader. | |||

Besides scanning errors the correction there a few things that need to be done to the .txt before translating it into other formats | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==Preparing the text resulting from ocr== | |||

This process will dependent on the book we are dealing with, it will be hard to generalize. | |||

* <strike>line breaks: [ebook-convert can handle \n ] | |||

They are present throughout the book (including hypenated line breakes) and need to be remove. | |||

<source lang="python"> | |||

import re, os | |||

os.chdir(".") | |||

for files in os.listdir("."): | |||

if files.endswith("-m.txt"): | |||

print files | |||

text_file = open(files, 'r') | |||

text = text_file.read() | |||

# -\n : hyphen followed by new line | |||

p_hyp_line_br = re.compile('-\n') | |||

text = re.sub(p_hyp_line_br, '', text) | |||

# just line breakes | |||

p_line_br = re.compile('\n') | |||

text = re.sub(p_line_br,' ', text) | |||

sub_name_pre = files.split('.') | |||

sub_name = sub_name_pre[0] + '_sub.txt' | |||

sub_file= open(sub_name, 'w') | |||

sub_file.write(text) | |||

sub_file.close() | |||

</source> | |||

</strike> | |||

* page numbers - remove '''[Must be done]''' | |||

* Chapter name at the start of every page - remove '''[Must be done]''' | |||

* images: | |||

In this book the pictures and are followed by "Fig". This allow us to locate the files and locations where the pictures were. | |||

After identifying them, I will remove the gibrish orc has put in their place | |||

Later-on will add them to the epub in their correct location | |||

<source lang="bash"> | |||

#!/ | |||

#file to store the location of the images | |||

touch fig-list.txt | |||

echo "" > fig-list.txt | |||

#search and add the locactions | |||

for i in txt-pgs/*-m.txt #will use old file w/ linebreak, the output is more convinient | |||

do | |||

if grep --quiet 'Fig' $i; then | |||

echo $i >> fig-list.txt; | |||

grep 'Fig' $i >> fig-list.txt; | |||

echo '-------******------******-------' >> fig-list.txt; | |||

fi | |||

done | |||

</source> | |||

* cat all the files | |||

Dam! Just realized that I should have kept the line breaks in Bibliography and Index. | |||

* Quick typo search: think a word processor is probably the best choice for find scannos | |||

* Convert the txt into an epub | |||

ebook-convert cat-book.txt output.epub | |||

For some reason ebook converter is struggling with this task. If I ask for the same for the text with the line breaks it does it without a problem. So there seems no need to remove line-break. It would be great not to have the chapter heading in every page! | |||

[[File:Licklider-15Oct.pdf]] | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==Reflecting on the work of audiences== | |||

As the previous experiments turned out to be way more consuming than I had foreseen. It makes think on our work as audience, our voluntarism, our engagement with non-payed work. Why do we do it? In order be recognized within the community? Do we see the benefits from others voluntary work and want to give something in return? | |||

How could a research of this topic relate to what is currently happening with several book digitization initiatives, where a great number of individuals scan, encode, proof-read, design and distribute e-books? | |||

Are these reactions to Google-Books? Do they emerge from a will to share and disseminate knowledge? Or are they ways to become part of community? | |||

Reading List:<br/> | |||

Hard and Nergi ''Commonwealth''<br /> | |||

Andrejevic ''The work of Being Watched''<br /> | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==Spam emails== | |||

As it is hard to think to experiment with books when one doesn't the have the main ingredient, texts, I decided to look for them: | |||

<source lang="email"> | |||

-------- Original Message -------- | |||

Subject: Contribute with spam emails | |||

Date: Fri, 05 Oct 2012 18:36:49 +0200 | |||

From: andre castro <andrecastro83@gmail.com> | |||

To: undisclosed-recipients:; | |||

Hia, | |||

I am emailing to ask you for a favor. | |||

Do you happen to have received sometime recently spam email, in a quite | |||

personal tone and addressing you? Do think they might be somewhere in | |||

you inbox or spam? | |||

If you do so, or receive one in the next few day, I'd like to ask you, | |||

you to forward them to me as I am putting together a compilation of | |||

those particular spam emails. They can be in any language | |||

Thank you | |||

Best | |||

a | |||

</source> | |||

The replies didn't take long to arrive | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==Reading spam== | |||

The email asking for spam email and its replies got me thinking of what happens to spam email when put under a different context than its original one, perhaps a more bookish form. Do we read them with different eye and pay attention to details that we have previously despised and begin to find some value to them? | |||

I at least do, and find quite compelling to look as these considered minor for of (written) expression, but where also a lot of creativity, humor and invention appears to be put in place, often not for the most correct reasons. | |||

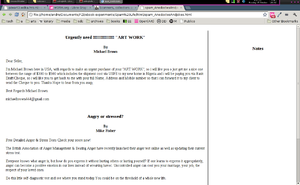

[[File:Spam_Anedoctes.png|thumb]] | |||

View List: | |||

Alessandro Ludovico talk on spam http://www.youtube.com/watch?v=BUNXRWXF-NA | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==An ongoing book== | |||

Lets say I want to go forward with the idea, mentioned on the previous post, of recontextualizing spam under a book format. Could be quite some fun to place a book only consisting of spam on Kindle shop or Lulu, and see if anyone actually buys it. Not despising the fact that I would learn from this process, it seems rather premature, and not exploiting that much the potential this material might have. | |||

This can be a good source material to work on a web-book hybrid. The project could be materialized under the form of a website where the book-website could be in constant transformation, but also 'snapshots' from it could be take could be taken at any moment. | |||

===ideas for the project=== | |||

====contributions==== | |||

* content contributions from anyone: new spam emails could be submitted and included into the project's contents | |||

* notes on the text: anyone could make notes on specific parts of the text, which would become part of the project's notes' content. Eg: user can realize part of a spam email quotes the bible and note that down | |||

* search on the select portions text: using google search, wikipedia, wiktionary. | |||

====snapshots==== | |||

* at any moment the project content could be exported into an epub or pdf. | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==An ongoing book: questions== | |||

How is it different from a project like Booki? | |||

It seems to me that booki's technical structure works well, however both contents and forms seem not that interesting. Perhaps the process through one contributes still has too much weight and obstacles and therefore there isn't much circulation of ideas or a feeling of a community around it. | |||

Wikipedia seems to take that weight away from contributors. They are not forced to write entire articles, but only little pieces of information, like pieces of a puzzle. Perhaps that's why it is so successful in hiring contributors. | |||

Wikipedia articles are also a space for frequent debate. | |||

Wikipedia is allowing articles to be exported as pdf or assembled into a paper book which one can buy. | |||

The difference between the project I am suggesting and these two examples doesn't obviously lie in technical innovations, but it could lie on the content, the form, and the processes involved int these aspects. | |||

It tries not to be a functional service for writing books or constructing encyclopedic knowledge. It rather, will try to look at the potential and specificities of '''marginal material'''. And the form it will try to call for renovated attention, but also reflect the activity around each text, such as notes and discussions. | |||

Read: discussion of M.Fuller w/ Sean Dockray (aaaaaaarg), they refer the importance of community in wikipedia at some point; | |||

</div> | |||

<div style="background-color:#FF3366;"> | |||

==a prototype of the spam book== | |||

Here is a quick and dirty prototype of some of the ideas describe in the 2 previous post | |||

http://pzwart3.wdka.hro.nl/~acastro/spamlife/spam_page.html | |||

</div> | |||

Latest revision as of 09:22, 16 October 2012

Experiment's Log

Sharing my digital library

Steps

- put my calibre ebook library on machine online for 24h

- start calibre content server

$ calibre-server

which point to my Calibre-library, and uses port machine_ip:8080

- check library remotely

Problem!! Calibre library is only accessible on the local LAN where I am at. http://www.mobileread.com/forums/showthread.php?t=160387

- in order to access the library remotely I have to have calibre installed on a server.

- calibre server on a LAN becomes a bit redundant, perhaps is handy for grabbing the books to ereaders and exchange them with people that are near one (on the same LAN), but one cannot say is yet a strong strategy for sharing books with someone in another part of the world.

Opening and modifying and epub

Too much talk about ebooks, witout actually looking at the insides of one. That's what I will do, will and create a new one with a section of the original.

- choose a book: Japanese Fairy Tales by Yei Theodora Ozaki

dissecting the epub

unzip the epub: there are 2 directories;

A) 4018/ - content dir

A.1) html ,css - html content and style

A.2) content.opf - Open Packaging Format metadata file (can be called anything, but content.opf is the convention) - specifies the location of all the contents in the book + the metadata (in xml)< br/>

A.2.1) metadata: required terms:title and identifier(the identifier must be a unique value, although it's up to the digital book creator to define that unique value)

A.2.2) manifest: all the content files par of the book

A.2.3) spine: indicates the order files they appear in the ebook - but not extraneous (like begging and end)

A.2.4) guide: (not required) explains what each section means

A.3) toc.ncx - The NCX defines the table of contents, but also metadata (overlaps w/ content.opf)

A.3.1) metadata- requires:

- uid: unique ID for the digital book. Should match the dc:identifier in the OPF file.

- depth: the level of the hierarchy in the table of contents

- totalPageCount and maxPageNumber: only to paper books and can be left at 0.

A.3.2) navMap: contains the navPoints

A.3.2.1) navPoint:

- playOrder - reading order. (same as itemref elements in the OPF spine).

- navLabel/text describes the title of this book section, a chapter title or number

- content src attribute points to content file. (a file declared in the OPF manifest). (can also point to anchors within XHTML eg: content.html#footnote1.)

A.4)and cover;

B) META-INF/container.xml (pointing to content.opf) - EPUB reading systems will look for this file first, as it points to the location of the metadata for the digital book.

B.2) META-INF can contain file such as digital signatures, encryption, and DRM

C) ./mimetype - file containing 'application/epub+zip'

packaging the epub into an epub+zip file

- create the new ZIP archive and add the mimetype file (no compression)

$ zip -0Xq my-book.epub mimetype

- add the remaining items

$ zip -Xr9Dq my-book.epub *

-X and -D minimize extraneous information in the .zip file; -r adds files from dirs 9? q?

conclusion

- in the epub the text overlaps heavily, maybe because I didn't add a font size to the css; (text-height:200%; solved it)

- however in a kindle looks fine. Why?because kindle is imposing its font on the text - 'If you are publishing to Kindle it forces your font into it's own custom font so it doesn't matter'. This show what close the device is.

- even tough the process of creating an epub and its structure are straight-forward, writing the .opf and .ncx without any automated process its a pain (but important to understand the epub's structure) and may easily lead to errors.

- also it seems not the easiest format to experiment with. The need to declare all the files used and the metadata as well as packaging, will make think twice before trying something out.

resources

http://www.ibm.com/developerworks/xml/tutorials/x-epubtut/index.html

epubs - Sigil

I made a few attempts to work with Sigil to help me work in Spam book project, however its far from ideal. I adds excessive markup content by itself, and makes the whole business quite messy, specially when working with large amount of text, when automated processes are very handy.

What I will try to do today is divide the creation of an epub in 4 stages.

- Having all the content in plain text with mediawiki notation - mostly headings, bold, and italics

- use a parse to generate an html from the plain text file

- Use calibre to convert the html to epub.

- Question: What is the fundamental difference between and ebook and a website? Can't a website, mostly made of text and re-flowable , be a ebook? With perhaps even more potential and easier to experiment with?

Character Encoding of a image-pdf

I wanted to get hold of Licklider Libraries of Future. The only online copy I found was a pdf constituted of scanned images. Thought this could be a good opportunity to get some hands on character recognition process.

NOTE: PDF MUST BE CONVERTED TO IMAGE AT 300PDI!!!

Steps

- extract separate images out of a pdf

- convert output images files to a TIFF (single-bit uncompressed) so the tesseract (ocr software) can read them.

- using imagemaick

convert -monochrome -density 600 source.pdf page.tif

-density refers to resolution of the scanned image (the higher the better) 600pdi

- using gimp (as documented in http://alexsleat.co.uk/2010/04/12/howto-simple-tesseract-usage-guide-ocr/ )

- converted the 2 images into a text file using tesseract

tesseract image.tif text

Outputs result in .txt format

Results

- gimp tiff: resulting text and image

but rather that publication has been extended far beyond our present ability to make real use of the record. The summa- tion of human experience is being expanded at a prodigious rate, and the means we use for threading through the con- sequent maze to the momentarily important item is the same as was used in the days of square-rigged ships.*

- imagemagick tiff: resulting text and image

§;;iuEy ix’: af ihszfé aiaximiai gzimi *»;<~°2:::ii?s,:s§;§-°‘ iéf §§§‘:‘T.°:f:3é‘;‘;i%E3§-°-£§3:3;}I imafiriégis, bait m1:E:::::* that Apwiiaaiim has bi?-iii} §:E;}i§:€i3fl§€3-ii fa: b€::«,.«~’§;3m?§ mu: p=:°‘@$e:m sgibifiiiy Ea mam ma} we Sf that rzaaazaig ’E’ha samzmaw timz Qf Emmazfa m:§§:r§V€.%:1a$ £3 baffsizzzg $.I><Z§&§‘fid€€§ at :5: pradigiaaza mm, and tha;: X?JE€;?3£:iE.§S ass: far fifamadizfag ifiarmzgfiz ‘mg: mam wqumii mam‘: ix} th.s::‘r 3:m‘m'2e:,*:12.€z21°§§:; impamgazzt itazm is that: Sams :13 wzag Lzsmi in am: days mi Szzgzarawrigggad giaipgfi‘

Conclusion

In order to be able to have the whole book translated into text I will have to fine-tune imagemagick conversion to TIFF, so that tesseract does a better job, in character recognition.

Just as to see how would tesseract would react to a scanned TIFF, I scanned a printed text on a table scanner at 600pdi directly to a TIFF file. The result was very good

Here is the both scan and text

Introduction In the preface to A Contribution to the Critique of Political Economy, Marx argues that, ‘at a certain stage of development, the material productive forces of society come in conflict with the existing relations of production’? What is possible in the information age is in direct con- flict with what is permissible. Publishers, film producers and the telecommunication indus- try conspire with lawmakers to bottle up and sabotage free networks, to forbid information from circulating outside of their control. The corporations in the recording industry attempt to forcibly maintain their position as mediators between artists and fans, as fans and artists merge closer together and explore new ways of interacting.

Streamline - character Encoding of a image pdf

Yesterday I was making a crucial mistake, and that's why I have been getting so many encoding errors. I was importing my pdf to tifs at 100dpi, creating a very low-res document, therefore hard for the ocr software to recognize the characters.

So now I have to:

- seperate the entire pdf into seperate pdf files

pdftk Licklider.pdf burst

- remove irrelevant pages (from beginning and end)

- convert each individual pdf to gray(? does it need the gray) 300dpi tifs

convert -units pixelsperinch -density 300x300 -colorspace Gray -depth 8 Licklider_10.pdf Licklider_10-gray-8.tif

for i in pg*.pdf;do convert -units pixelsperinch -density 300x300 -colorspace Gray -depth 8 $i "`basename $i .pdf`.tif";done // this will take a while now

- convert tif to monochrome

convert Licklider_10-gray-8.tif +dither -monochrome -normalize Licklider_10-gray-8-MONO.tif

for i in pg*.tif;do convert $i +dither -monochrome -normalize "`basename $i .tif`-m.tif";done

- ocr the image using tessaract

tesseract Licklider_10-gray-8-MONO.tif Licklider_10-gray-MONO

for i in *-m.tif;do tesseract $i "`basename $i .tif`"; done

- cat all txts into one

touch full_text; cat *.txt > full_text

Foreword THIS REPORT of research on concepts and problems of “Libraries of the Future†records the result of a two-year inquiry into the applicability of some of the newer tech- niques for handling information to what goes at present by the name of library work — i.e., the operations con- nected with assembling information in recorded form and of organizing and making it available for use. Mankind has been complaining about the quantity of reading matter and the scarcity of time for reading it at least since the days of Leviticus, and in our own day these complaints have become increasingly numerous and shrill. But as Vannevar Bush pointed out in the article that may be said to have opened the current campaign on the “information problem,†The diï¬iculty seems to be, not so much that we publish un- V

Conclusion

Although, after a bit of tweaking to get the book pages images processed for scaning, the ocr worked well, with only a few glitchs in the text, that need to be emended. A nice way to do this proofreading would be to edit the text while reading it on an ereader.

Besides scanning errors the correction there a few things that need to be done to the .txt before translating it into other formats

Preparing the text resulting from ocr

This process will dependent on the book we are dealing with, it will be hard to generalize.

line breaks: [ebook-convert can handle \n ]

They are present throughout the book (including hypenated line breakes) and need to be remove.

import re, os

os.chdir(".")

for files in os.listdir("."):

if files.endswith("-m.txt"):

print files

text_file = open(files, 'r')

text = text_file.read()

# -\n : hyphen followed by new line

p_hyp_line_br = re.compile('-\n')

text = re.sub(p_hyp_line_br, '', text)

# just line breakes

p_line_br = re.compile('\n')

text = re.sub(p_line_br,' ', text)

sub_name_pre = files.split('.')

sub_name = sub_name_pre[0] + '_sub.txt'

sub_file= open(sub_name, 'w')

sub_file.write(text)

sub_file.close()

- page numbers - remove [Must be done]

- Chapter name at the start of every page - remove [Must be done]

- images:

In this book the pictures and are followed by "Fig". This allow us to locate the files and locations where the pictures were. After identifying them, I will remove the gibrish orc has put in their place Later-on will add them to the epub in their correct location

#!/

#file to store the location of the images

touch fig-list.txt

echo "" > fig-list.txt

#search and add the locactions

for i in txt-pgs/*-m.txt #will use old file w/ linebreak, the output is more convinient

do

if grep --quiet 'Fig' $i; then

echo $i >> fig-list.txt;

grep 'Fig' $i >> fig-list.txt;

echo '-------******------******-------' >> fig-list.txt;

fi

done

- cat all the files

Dam! Just realized that I should have kept the line breaks in Bibliography and Index.

- Quick typo search: think a word processor is probably the best choice for find scannos

- Convert the txt into an epub

ebook-convert cat-book.txt output.epub

For some reason ebook converter is struggling with this task. If I ask for the same for the text with the line breaks it does it without a problem. So there seems no need to remove line-break. It would be great not to have the chapter heading in every page!

Reflecting on the work of audiences

As the previous experiments turned out to be way more consuming than I had foreseen. It makes think on our work as audience, our voluntarism, our engagement with non-payed work. Why do we do it? In order be recognized within the community? Do we see the benefits from others voluntary work and want to give something in return?

How could a research of this topic relate to what is currently happening with several book digitization initiatives, where a great number of individuals scan, encode, proof-read, design and distribute e-books?

Are these reactions to Google-Books? Do they emerge from a will to share and disseminate knowledge? Or are they ways to become part of community?

Reading List:

Hard and Nergi Commonwealth

Andrejevic The work of Being Watched

Spam emails

As it is hard to think to experiment with books when one doesn't the have the main ingredient, texts, I decided to look for them:

-------- Original Message --------

Subject: Contribute with spam emails

Date: Fri, 05 Oct 2012 18:36:49 +0200

From: andre castro <andrecastro83@gmail.com>

To: undisclosed-recipients:;

Hia,

I am emailing to ask you for a favor.

Do you happen to have received sometime recently spam email, in a quite

personal tone and addressing you? Do think they might be somewhere in

you inbox or spam?

If you do so, or receive one in the next few day, I'd like to ask you,

you to forward them to me as I am putting together a compilation of

those particular spam emails. They can be in any language

Thank you

Best

a

The replies didn't take long to arrive

Reading spam

The email asking for spam email and its replies got me thinking of what happens to spam email when put under a different context than its original one, perhaps a more bookish form. Do we read them with different eye and pay attention to details that we have previously despised and begin to find some value to them?

I at least do, and find quite compelling to look as these considered minor for of (written) expression, but where also a lot of creativity, humor and invention appears to be put in place, often not for the most correct reasons.

View List: Alessandro Ludovico talk on spam http://www.youtube.com/watch?v=BUNXRWXF-NA

An ongoing book

Lets say I want to go forward with the idea, mentioned on the previous post, of recontextualizing spam under a book format. Could be quite some fun to place a book only consisting of spam on Kindle shop or Lulu, and see if anyone actually buys it. Not despising the fact that I would learn from this process, it seems rather premature, and not exploiting that much the potential this material might have.

This can be a good source material to work on a web-book hybrid. The project could be materialized under the form of a website where the book-website could be in constant transformation, but also 'snapshots' from it could be take could be taken at any moment.

ideas for the project

contributions

- content contributions from anyone: new spam emails could be submitted and included into the project's contents

- notes on the text: anyone could make notes on specific parts of the text, which would become part of the project's notes' content. Eg: user can realize part of a spam email quotes the bible and note that down

- search on the select portions text: using google search, wikipedia, wiktionary.

snapshots

- at any moment the project content could be exported into an epub or pdf.

An ongoing book: questions

How is it different from a project like Booki? It seems to me that booki's technical structure works well, however both contents and forms seem not that interesting. Perhaps the process through one contributes still has too much weight and obstacles and therefore there isn't much circulation of ideas or a feeling of a community around it.

Wikipedia seems to take that weight away from contributors. They are not forced to write entire articles, but only little pieces of information, like pieces of a puzzle. Perhaps that's why it is so successful in hiring contributors.

Wikipedia articles are also a space for frequent debate.

Wikipedia is allowing articles to be exported as pdf or assembled into a paper book which one can buy.

The difference between the project I am suggesting and these two examples doesn't obviously lie in technical innovations, but it could lie on the content, the form, and the processes involved int these aspects.

It tries not to be a functional service for writing books or constructing encyclopedic knowledge. It rather, will try to look at the potential and specificities of marginal material. And the form it will try to call for renovated attention, but also reflect the activity around each text, such as notes and discussions.

Read: discussion of M.Fuller w/ Sean Dockray (aaaaaaarg), they refer the importance of community in wikipedia at some point;

a prototype of the spam book

Here is a quick and dirty prototype of some of the ideas describe in the 2 previous post