User:Rita Graca/gradproject/project proposal 6: Difference between revisions

Rita Graca (talk | contribs) |

Rita Graca (talk | contribs) No edit summary |

||

| (48 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

'''<big> | '''<big><big>Project Proposal</big></big>''' <br> | ||

29 January 2020 | |||

| Line 6: | Line 6: | ||

===What do you want to make?=== | ===What do you want to make?=== | ||

In this project, I want to | Is it possible to fight hate within the platforms battlefield? In this project, I want to give attention to community movements that seek to regulate hate on social media. I aim to build a platform to share a series of conversations turned into a podcast. In each episode, I will invite a different person to discuss ways of reducing online hate related to their practice or daily life. I will upload the podcast to a platform where I can explore and prototype different forms of listening. | ||

Online spaces are full of shaming, harassment, hate speech, racism. My interest focuses on the collective consciousness that is urgent to reduce this hate. A proactive approach comes from community strategies that seek to regulate deviant behaviour. User movements follow informal sets of rules which are clear for a specific community but often scatter through different groups and platforms. It is also true that online traces are often lost, movements morphed into others. In an attempt to find evidence of group actions that mitigate hate, I was screenshotting the web. Right now, I feel there is a need for more robust documentation. | |||

My interest | |||

<gallery widths=350px heights=350px> | <gallery widths=350px heights=350px> | ||

File: | File:twittercancel.jpg | Users encouraging the boycott of the musician R. Kelly. Digital vigilantism allows users to denounce hateful content within the platform's structure. | ||

File: | File:witchesmastodon.png | Community rules for ''witches.live''. Another bottom-up strategy that has been receiving a lot of attention is the development of rigorous codes of conduct. | ||

</gallery> | </gallery> | ||

===How do you plan to make it?=== | ===How do you plan to make it?=== | ||

I aim to create a digital audio file series. In each episode, I will be having a conversation with a guest actively involved in | I aim to create a digital audio file series. In each episode, I will be having a conversation with a guest actively involved in moderating social media. I'm touching on topics of digital vigilantism, codes of conduct, personal design tools. Alongside, I will explore ''strategies of care'' – I will prototype different features for the platform where the podcast is shared. These features are my experiences with different ways of listening. | ||

Listening doesn't mean just hearing. The platform can incorporate paraphrasing, reinterpreting, annotating, drawing, uploading, commenting, remixing, transcribing and all different strategies I can explore for active listening. While I celebrate the actions of speaking up, I feel the need to provide the balance of receiving the information. I'm following closely Kate Crawford interest in assuming listening as a metaphor to capture forms of online participation. (Crawford, 2011) I hope this podcast, and the subsequent prototypes of listening, builds a platform for understanding and reflection of our social networks. | |||

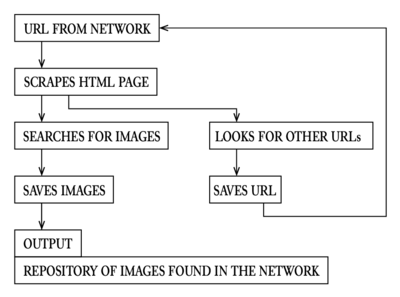

Since the beginning of the project, I've prototyped Twitter bots that help me collect online evidence. I attended a meeting from a research group on digital vigilantism. I reached out to different people and recorded the first audio episodes. I'm in touch with CODARTS students that are helping me produce the musical cues I want to integrate into the platform. I'm learning ''Flask'' and ''Jinja'' to create and maintain a flexible collection of materials online. I've been prototyping ways of active listening. I talked with moderators of different communities. Going forward, I aim to connect and record with more people to have a diverse range of experiences. I will consolidate my strategies of care by understanding what makes more sense to incorporate in the project. I will experience the best way to organise all the information I'm collecting and creating. | |||

<gallery widths=350px heights=350px> | |||

< | File:Bot timeline.png | Bot collecting traces of hashtag activism on Twitter. | ||

</gallery> | |||

===What is your timetable?=== | ===What is your timetable?=== | ||

| Line 54: | Line 43: | ||

===Why do you want to make it?=== | ===Why do you want to make it?=== | ||

'' | ''It's urgent.'' When diving into the subject of social media, the amount of hate is overwhelming. The lack of credibility of the media casts a shadow on genuine social movements and mobilisations online. There's urgency in amplifying authentic conversations. Conversations about fighting hateful behaviour, the changes we aim to see, possible solutions from the platforms, the limitations of user actions. There is so much noise on social media – it becomes urgent to listen. | ||

'' | ''It's contemporary.'' The users have been demanding more reliable platforms. The bottom-up approaches are telling of the conscious need to reduce hate towards others. Social media companies picked out the trend and are providing some changes. In April, TikTok added two new features to promote a safer app experience. In June, Youtube promised to release new features in an attempt to be more transparent with their algorithms, which included reducing video suggestions of supremacists. In July, Twitter collected user feedback and expanded their rules for hateful language against religious groups. | ||

'' | ''It's exciting.'' The real effects of the platforms attempts to reduce hate are debatable and also part of well-thought marketing strategies. And honestly, they are not enough. More than ever, there's a need to reformulate our social platforms and attempt to provide healthier, safer online spaces. As a designer, a media student and a social media user, I feel compelled to join the struggle. | ||

===Who can help you and how?=== | ===Who can help you and how?=== | ||

Past Xpub students like Roel Roscam and Lídia Pereira. They both research decentralised networks known for encouraging different codes of conduct. | |||

Femke Snelting, with the connection with Collective Conditions. This is a worksession from Constant about codes of conduct, bug reports, licenses and complaints. | |||

Clara Balaguer, for her experience on responding to hate on social media, especially online trolling. | |||

Research groups such as the Surveillance, visibility and reputation Master. I will attend at least one of their meetings about digital vigilantism. | |||

Marloes de Valk, especially with the connection with Impakt festival. The programme for this year is called Speculative Interfaces, and it will investigate the interaction and relationship between technology and humans, and how this relationship can alter behaviours. I will attend two days of the festival. | |||

Xpub staff and students, for the variety of useful input. | Xpub staff and students, for the variety of useful input. | ||

| Line 76: | Line 67: | ||

===Relation to previous practice=== | ===Relation to previous practice=== | ||

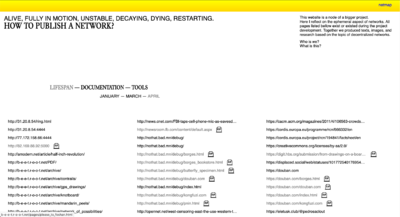

I | In the first year of the programme, XPUB discussed decentralised networks on ''Special Issue 8: The Network We (de)Served''. I understood how creating new platforms and looking for alternatives reveals the desire for bottom-up changes and more active end-users. It became clear that some models of social media propagate limited ideas, and those ideas shape our society. As a project for that same Special Issue, I prototyped some tools that helped me visualise the ideas I was discussing. To facilitate the research with tools is something that I intend to continue for this final project. | ||

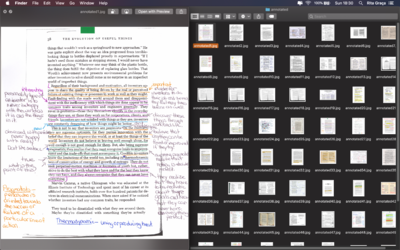

Equally important, throughout the Reading and Writing & Research Methodologies classes, there was big enthusiasm to explore different ways of collective reading. Together we built readers and our annotation systems. In the second trimester, I attempted to train a computer to recognise hand-written notes. My previous work on annotating methods boosted my interest to apply similar features in my final project. | |||

<gallery widths = | <gallery widths=400px heights=300px> | ||

File:Nothatbad1.png| Last year's work on how to publish networks | File:Nothatbad1.png| Last year's work on how to publish networks | ||

File:Tool 1.png| Development of a tool to archive images in networks | File:Tool 1.png| Development of a tool to archive images in networks | ||

File:annotated_eg.png | At the time of the Special Issue 9, a point of interest for everyone was annotations. I was curious if we could train a computer to see all of these traces, so I started prototyping some examples. Annotated example from data set. | |||

</gallery> | </gallery> | ||

===Relation to a larger context=== | ===Relation to a larger context=== | ||

What can we do against violent social platforms filled with hate speech that harms users? A fair answer is to insist on responsibility either from the government, tech companies or international organisations. Laws, such as the NetzDG Law in Germany, are admirable initiatives. The NetzDG law (or the ''Netzwerkdurchsetzungsgesetz'') is controversial, but it aimed to give legal importance to flagging, complaining and reporting inside platforms. Not every country can rely on a democratic government. However, these laws can set an example for so many social media companies that are US based, as well as European data centres. These legal discussions deserve more encouragement. | |||

Users have been putting more pressure on social platforms to act politically towards users, something that these businesses have been avoiding. In the US, publishers such as traditional newspapers curate content, so they have responsibility for what is published. US laws declare that an ''interactive computer service'' is not a publisher. (Communications Decency Act, 1996) This means computer services can't be held accountable for what their users publish. Facebook is a computer service. However, when it starts banning content and deciding what is appropriate content, it's making editorial decisions. There's still some confusion where lies the responsability to moderate hate content. | |||

In 2016, four researchers analysed the policies of fifteen social media platforms. (Pater et al., 2016) At the time, all but Vine and VK, mention the term harassment in their policies. This means that it was already considered problematic behaviour. However, not one of the fifteen platforms was defining what constituted harassment. Is it publishing a personal address online, or is it a hurtful comment? Are there any repercussions for such behaviour? The urge to take control of our online spaces reveals the present concern over growing hateful platforms, the absence of safe places, and an overall lack of accountability. For these reasons, it's stimulating to understand how users are using their informal techniques to regulate hate on their social networks. | |||

| Line 108: | Line 92: | ||

Ananny, M. and Crawford, K. (2018) Seeing without knowing: Limitations of the transparency ideal and its application to algorithmic accountability. ''New Media & Society'', 20 (3): 973–989. | Ananny, M. and Crawford, K. (2018) Seeing without knowing: Limitations of the transparency ideal and its application to algorithmic accountability. ''New Media & Society'', 20 (3): 973–989. | ||

'' | Constant (2019) ''Colective Conditions.'' | ||

Crawford, K. (2011) Listening, not lurking: The neglected form of participation. ''Cultures of participation'', 63 – 74. | |||

''DNL# 13: HATE NEWS. Keynote with Andrea Noel and Renata Avila'' (2018) Film.<br> | ''DNL# 13: HATE NEWS. Keynote with Andrea Noel and Renata Avila'' (2018) Film.<br> | ||

Available at: https://www.youtube.com/watch?v=l2z6jP0Ynwg&list=PLmm_HP_Sb_cTFwQrgkRvP8yqJqerkttpm&index=3 (Accessed: 12 November 2019). | Available at: https://www.youtube.com/watch?v=l2z6jP0Ynwg&list=PLmm_HP_Sb_cTFwQrgkRvP8yqJqerkttpm&index=3 (Accessed: 12 November 2019). | ||

''DNL# 13: HATE NEWS. Panel with Caroline Sinders, Øyvind Strømmen, Cathleen Berger, Margarita Tsomou'' (2018) Film. <br> | |||

Available at: https://www.youtube.com/watch?v=9vTo_4kKqYM&list=PLmm_HP_Sb_cTFwQrgkRvP8yqJqerkttpm&index=6&t=0s (Accessed: 12 November 2019). | |||

Dubrofsky, R.E. and Magnet, S. (2015) ''Feminist surveillance studies.'' Durham: Duke University Press, 221–228. | Dubrofsky, R.E. and Magnet, S. (2015) ''Feminist surveillance studies.'' Durham: Duke University Press, 221–228. | ||

Freeman, J. (2013) The Tyranny of Structurelessness. WSQ: Women’s Studies Quarterly, 41 (3–4): 231–246. | |||

Holmes, K. and Maeda, J. (2018) ''Mismatch: how inclusion shapes design. Simplicity : design, technology, business, life.'' Cambridge, Massachusetts ; London, England: The MIT Press. | Holmes, K. and Maeda, J. (2018) ''Mismatch: how inclusion shapes design. Simplicity : design, technology, business, life.'' Cambridge, Massachusetts ; London, England: The MIT Press. | ||

| Line 121: | Line 110: | ||

Ingraham, C. and Reeves, J. (2016) New media, new panics. ''Critical Studies in Media Communication'', 33 (5): 455–467. | Ingraham, C. and Reeves, J. (2016) New media, new panics. ''Critical Studies in Media Communication'', 33 (5): 455–467. | ||

Pater, J., Kim, M., Mynatt, E., et al. (2016) ''Characterizations of Online Harassment: Comparing Policies Across Social Media Platforms'', 369-374. | |||

Trottier, D. (2019) Denunciation and doxing: towards a conceptual model of digital vigilantism. ''Global Crime'', pp. 1–17. | |||

Winner, L. (1980) Do Artifacts Have Politics? ''Daedalus'', 109(1), 121–136 | Winner, L. (1980) Do Artifacts Have Politics? ''Daedalus'', 109(1), 121–136 | ||

</div> | |||

Latest revision as of 16:51, 10 February 2020

Project Proposal

29 January 2020

What do you want to make?

Is it possible to fight hate within the platforms battlefield? In this project, I want to give attention to community movements that seek to regulate hate on social media. I aim to build a platform to share a series of conversations turned into a podcast. In each episode, I will invite a different person to discuss ways of reducing online hate related to their practice or daily life. I will upload the podcast to a platform where I can explore and prototype different forms of listening.

Online spaces are full of shaming, harassment, hate speech, racism. My interest focuses on the collective consciousness that is urgent to reduce this hate. A proactive approach comes from community strategies that seek to regulate deviant behaviour. User movements follow informal sets of rules which are clear for a specific community but often scatter through different groups and platforms. It is also true that online traces are often lost, movements morphed into others. In an attempt to find evidence of group actions that mitigate hate, I was screenshotting the web. Right now, I feel there is a need for more robust documentation.

How do you plan to make it?

I aim to create a digital audio file series. In each episode, I will be having a conversation with a guest actively involved in moderating social media. I'm touching on topics of digital vigilantism, codes of conduct, personal design tools. Alongside, I will explore strategies of care – I will prototype different features for the platform where the podcast is shared. These features are my experiences with different ways of listening.

Listening doesn't mean just hearing. The platform can incorporate paraphrasing, reinterpreting, annotating, drawing, uploading, commenting, remixing, transcribing and all different strategies I can explore for active listening. While I celebrate the actions of speaking up, I feel the need to provide the balance of receiving the information. I'm following closely Kate Crawford interest in assuming listening as a metaphor to capture forms of online participation. (Crawford, 2011) I hope this podcast, and the subsequent prototypes of listening, builds a platform for understanding and reflection of our social networks.

Since the beginning of the project, I've prototyped Twitter bots that help me collect online evidence. I attended a meeting from a research group on digital vigilantism. I reached out to different people and recorded the first audio episodes. I'm in touch with CODARTS students that are helping me produce the musical cues I want to integrate into the platform. I'm learning Flask and Jinja to create and maintain a flexible collection of materials online. I've been prototyping ways of active listening. I talked with moderators of different communities. Going forward, I aim to connect and record with more people to have a diverse range of experiences. I will consolidate my strategies of care by understanding what makes more sense to incorporate in the project. I will experience the best way to organise all the information I'm collecting and creating.

What is your timetable?

September, October — Ground my interests, make clear what I want to work on by researching and finding projects. Fast prototyping.

November, December — Project Proposal is written so my scope is set. Have a more specific direction for the prototypes. Who is my audience? Engage with users.

January — Allow the feedback from the assessment and the break to feed new inputs to the project. Organize a workshop (Py.rate.chnic sessions) which will allow other people to experiment and talk about the project.

February, March — Put my prototypes together to create a bigger platform. The project will expand from small experiments to a combined project. How will people engage with my project? Think about distribution, amplification and contribution from others!

April, May — Written thesis is delivered. Focus on the project. Test my prototypes, perform them or put them online. Is it useful to organise more workshops or conversations around the subject?

June, July — Finish everything: conclusion of the final project. Prepare the presentation.

Why do you want to make it?

It's urgent. When diving into the subject of social media, the amount of hate is overwhelming. The lack of credibility of the media casts a shadow on genuine social movements and mobilisations online. There's urgency in amplifying authentic conversations. Conversations about fighting hateful behaviour, the changes we aim to see, possible solutions from the platforms, the limitations of user actions. There is so much noise on social media – it becomes urgent to listen.

It's contemporary. The users have been demanding more reliable platforms. The bottom-up approaches are telling of the conscious need to reduce hate towards others. Social media companies picked out the trend and are providing some changes. In April, TikTok added two new features to promote a safer app experience. In June, Youtube promised to release new features in an attempt to be more transparent with their algorithms, which included reducing video suggestions of supremacists. In July, Twitter collected user feedback and expanded their rules for hateful language against religious groups.

It's exciting. The real effects of the platforms attempts to reduce hate are debatable and also part of well-thought marketing strategies. And honestly, they are not enough. More than ever, there's a need to reformulate our social platforms and attempt to provide healthier, safer online spaces. As a designer, a media student and a social media user, I feel compelled to join the struggle.

Who can help you and how?

Past Xpub students like Roel Roscam and Lídia Pereira. They both research decentralised networks known for encouraging different codes of conduct.

Femke Snelting, with the connection with Collective Conditions. This is a worksession from Constant about codes of conduct, bug reports, licenses and complaints.

Clara Balaguer, for her experience on responding to hate on social media, especially online trolling.

Research groups such as the Surveillance, visibility and reputation Master. I will attend at least one of their meetings about digital vigilantism.

Marloes de Valk, especially with the connection with Impakt festival. The programme for this year is called Speculative Interfaces, and it will investigate the interaction and relationship between technology and humans, and how this relationship can alter behaviours. I will attend two days of the festival.

Xpub staff and students, for the variety of useful input.

Relation to previous practice

In the first year of the programme, XPUB discussed decentralised networks on Special Issue 8: The Network We (de)Served. I understood how creating new platforms and looking for alternatives reveals the desire for bottom-up changes and more active end-users. It became clear that some models of social media propagate limited ideas, and those ideas shape our society. As a project for that same Special Issue, I prototyped some tools that helped me visualise the ideas I was discussing. To facilitate the research with tools is something that I intend to continue for this final project.

Equally important, throughout the Reading and Writing & Research Methodologies classes, there was big enthusiasm to explore different ways of collective reading. Together we built readers and our annotation systems. In the second trimester, I attempted to train a computer to recognise hand-written notes. My previous work on annotating methods boosted my interest to apply similar features in my final project.

Relation to a larger context

What can we do against violent social platforms filled with hate speech that harms users? A fair answer is to insist on responsibility either from the government, tech companies or international organisations. Laws, such as the NetzDG Law in Germany, are admirable initiatives. The NetzDG law (or the Netzwerkdurchsetzungsgesetz) is controversial, but it aimed to give legal importance to flagging, complaining and reporting inside platforms. Not every country can rely on a democratic government. However, these laws can set an example for so many social media companies that are US based, as well as European data centres. These legal discussions deserve more encouragement.

Users have been putting more pressure on social platforms to act politically towards users, something that these businesses have been avoiding. In the US, publishers such as traditional newspapers curate content, so they have responsibility for what is published. US laws declare that an interactive computer service is not a publisher. (Communications Decency Act, 1996) This means computer services can't be held accountable for what their users publish. Facebook is a computer service. However, when it starts banning content and deciding what is appropriate content, it's making editorial decisions. There's still some confusion where lies the responsability to moderate hate content.

In 2016, four researchers analysed the policies of fifteen social media platforms. (Pater et al., 2016) At the time, all but Vine and VK, mention the term harassment in their policies. This means that it was already considered problematic behaviour. However, not one of the fifteen platforms was defining what constituted harassment. Is it publishing a personal address online, or is it a hurtful comment? Are there any repercussions for such behaviour? The urge to take control of our online spaces reveals the present concern over growing hateful platforms, the absence of safe places, and an overall lack of accountability. For these reasons, it's stimulating to understand how users are using their informal techniques to regulate hate on their social networks.

References

Ananny, M. and Crawford, K. (2018) Seeing without knowing: Limitations of the transparency ideal and its application to algorithmic accountability. New Media & Society, 20 (3): 973–989.

Constant (2019) Colective Conditions.

Crawford, K. (2011) Listening, not lurking: The neglected form of participation. Cultures of participation, 63 – 74.

DNL# 13: HATE NEWS. Keynote with Andrea Noel and Renata Avila (2018) Film.

Available at: https://www.youtube.com/watch?v=l2z6jP0Ynwg&list=PLmm_HP_Sb_cTFwQrgkRvP8yqJqerkttpm&index=3 (Accessed: 12 November 2019).

DNL# 13: HATE NEWS. Panel with Caroline Sinders, Øyvind Strømmen, Cathleen Berger, Margarita Tsomou (2018) Film.

Available at: https://www.youtube.com/watch?v=9vTo_4kKqYM&list=PLmm_HP_Sb_cTFwQrgkRvP8yqJqerkttpm&index=6&t=0s (Accessed: 12 November 2019).

Dubrofsky, R.E. and Magnet, S. (2015) Feminist surveillance studies. Durham: Duke University Press, 221–228.

Freeman, J. (2013) The Tyranny of Structurelessness. WSQ: Women’s Studies Quarterly, 41 (3–4): 231–246.

Holmes, K. and Maeda, J. (2018) Mismatch: how inclusion shapes design. Simplicity : design, technology, business, life. Cambridge, Massachusetts ; London, England: The MIT Press.

Ingraham, C. and Reeves, J. (2016) New media, new panics. Critical Studies in Media Communication, 33 (5): 455–467.

Pater, J., Kim, M., Mynatt, E., et al. (2016) Characterizations of Online Harassment: Comparing Policies Across Social Media Platforms, 369-374.

Trottier, D. (2019) Denunciation and doxing: towards a conceptual model of digital vigilantism. Global Crime, pp. 1–17.

Winner, L. (1980) Do Artifacts Have Politics? Daedalus, 109(1), 121–136