User:Pedro Sá Couto/Prototyping 3rd: Difference between revisions

| (17 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=Folder to Workshop= | |||

https://git.xpub.nl/pedrosaclout/Workshop_Folder | |||

==Makefile== | |||

<source lang="bash"> | |||

src=$(shell ls *.jpeg) | |||

pdf=$(src:%.jpeg=%.pdf) | |||

pdf: $(pdf) | |||

zapspaces: | |||

rename "s/ /_/" * | |||

rename "s/\.jpg/.jpeg/" * | |||

# Scan.pdf: Scan.jpeg | |||

# tesseract Scan.jpeg Scan -l eng pdf | |||

%.pdf: %.jpeg | |||

tesseract $*.jpeg $* -l eng pdf | |||

# %.ppm: %.jpeg | |||

# convert $*.jpeg $*.ppm | |||

# | |||

# %.un.jpeg: %.un.ppm | |||

# convert $*.un.ppm $*.un.jpeg | |||

# | |||

# %.un.jpeg: %.jpeg | |||

# convert $*.jpeg tmp.ppm | |||

# unpaper tmp.ppm tmp2.ppm | |||

# convert tmp2.ppm $*.un.jpeg | |||

# rm tmp.ppm | |||

# rm tmp2.ppm | |||

# | |||

# #debug vars | |||

# print-%: | |||

# @echo '$*=$($*)' | |||

</source> | |||

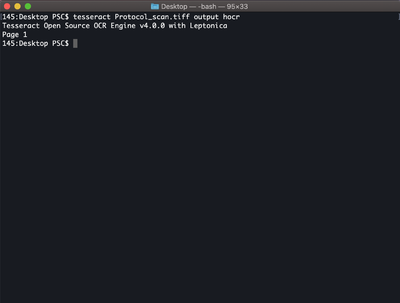

==OCR all jpegs== | |||

<source lang="bash"> | |||

#!/bin/bash | |||

cd "$(dirname "$0")" | |||

make zapspaces | |||

make | |||

</source> | |||

==Merge all the pdfs together== | |||

<source lang="bash"> | |||

#!/bin/bash | |||

cd "$(dirname "$0")" | |||

pdftk *.pdf cat output newfile.pdf | |||

</source> | |||

=Understanding how to use Json+Libgen API + Monoskop= | |||

<source lang="bash"> | |||

#"using an api" in python | |||

#Prototyping 29 MAY 2019 | |||

#Piet Zwart Institute | |||

import sys | |||

import json | |||

from json import loads | |||

from urllib.request import urlopen | |||

from pprint import pprint | |||

def get_json (url): | |||

#save the json to a variable | |||

file = urlopen(url) | |||

#prints only an object "<http.client.HTTPResponse object at 0x103e1c860>" | |||

print (file) | |||

#you need to read the object | |||

#b means bites that have to be decoded into a string | |||

text = file.read().decode("utf-8") | |||

#check the type of the object | |||

pprint(type(text)) | |||

#see the string | |||

pprint(text) | |||

#turn the string into a more structured object type like a dictionary | |||

#json loads asks for a string input | |||

#save it to a variable | |||

data = json.loads(text) | |||

pprint(len(data)) | |||

pprint(data) | |||

return data | |||

libgendata = get_json("http://gen.lib.rus.ec/json.php?ids=1&fields=*") | |||

monoskopdata = get_json("https://monoskop.org/api.php?action=query&list=allcategories&format=json&apiversion=2") | |||

#use the item in a for loop | |||

for item in libgendata: | |||

#print the item type | |||

pprint(type(item)) | |||

#look at the keys | |||

pprint(item.keys()) | |||

#print only the title | |||

pprint(item["title"]) | |||

# #use this if we are not sure because it wont break the code | |||

# print(item.get("title")) | |||

#its already a dictionary | |||

cats = monoskopdata["query"]["allcategories"] | |||

#use the item in a for loop | |||

for item in cats: | |||

#print the item type | |||

pprint(type(item)) | |||

print(item["*"]) | |||

</source> | |||

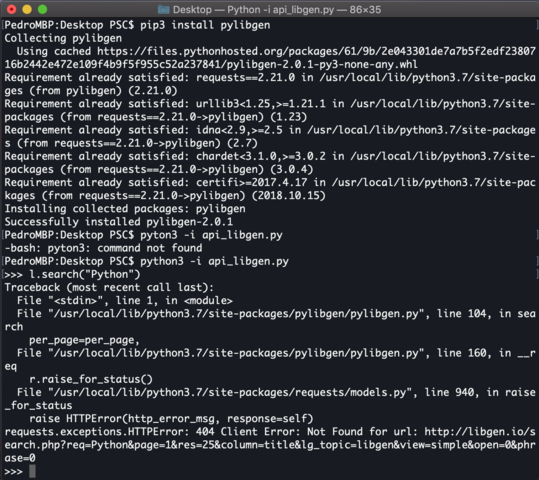

=pylibgen Libgen API for python library= | |||

==A fork from "https://github.com/joshuarli/pylibgen" —> libgen.io URL was outdated.== | |||

<gallery mode="packed" heights="320px"> | |||

File:404notworking.png | |||

File:404notworking2.png | |||

</gallery> | |||

<br> | |||

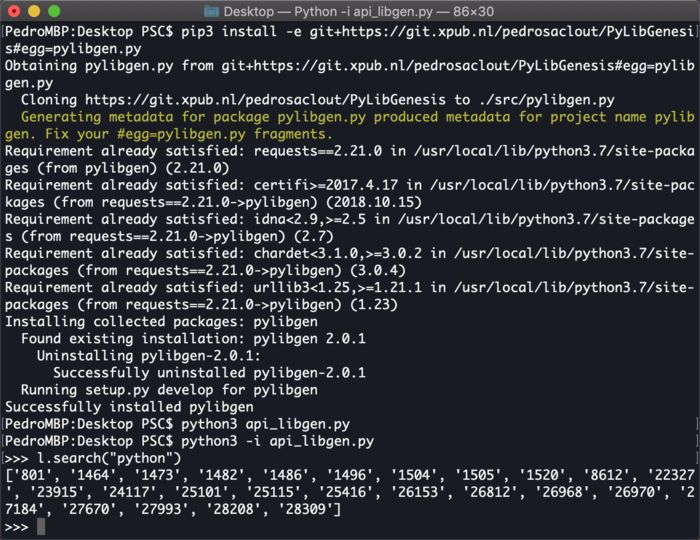

==Now links to —> http://gen.lib.rus.ec/== | |||

pylibgen test on Python 3.7, and can be installed via : | |||

*pip3 install -e git+https://git.xpub.nl/pedrosaclout/PyLibGenesis#egg=pylibgen.py | |||

<source lang="python"> | |||

import pprint | |||

import pylibgen | |||

from pylibgen import Library | |||

import requests | |||

bookname = input("Book name to search: ") | |||

l = Library() | |||

ids = l.search(bookname) | |||

# print(ids) | |||

idstourl = ",".join(ids ) | |||

print(idstourl) | |||

</source> | |||

[[File:pylibworking.png||700px|frameless|center]] | |||

=Watermarks, downloading, deleting= | =Watermarks, downloading, deleting= | ||

https://pad.xpub.nl/p/IFL_2018-05-13 | https://pad.xpub.nl/p/IFL_2018-05-13 | ||

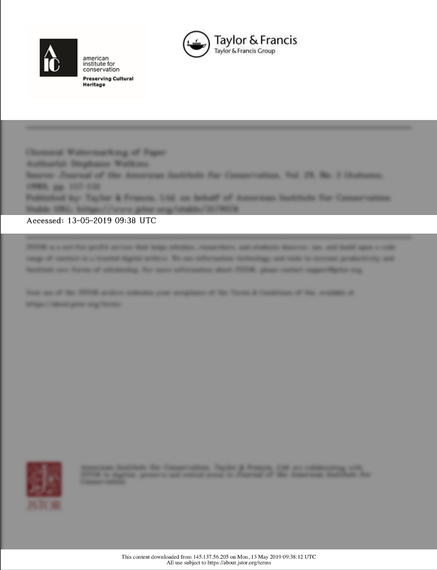

===JSOR (Ithaka Harbors, Inc) publishers=== | ===JSOR (Ithaka Harbors, Inc) publishers=== | ||

* download an article from http://jstor.org/ from within | * download an article from http://jstor.org/ from within the school´s internet | ||

* search for watermarks on the PDF | * search for watermarks on the PDF | ||

* look for some leads in https://sourceforge.net/p/pdfedit/mailman/message/27874955/ — https://github.com/kanzure/pdfparanoia/issues?utf8=%E2%9C%93&q=jstor | * look for some leads in https://sourceforge.net/p/pdfedit/mailman/message/27874955/ — https://github.com/kanzure/pdfparanoia/issues?utf8=%E2%9C%93&q=jstor | ||

<gallery mode="packed" heights=" | ===JSTOR Downloader=== | ||

#While working around the watermarks that JSTOR leaves in their PDFs, we understood that in their website, one can have a preview through the whole document without any of these watermarks. The idea began to download it directly from these preview images rather than actually downloading it from a JSTOR account. | |||

#https://www.jstor.org/stable/23267102?Search=yes&resultItemClick=true&searchText=tea&searchUri=%2Faction%2FdoBasicSearch%3FQuery%3Dtea%26amp%3Bacc%3Don%26amp%3Bfc%3Doff%26amp%3Bwc%3Don%26amp%3Bgroup%3Dnone&ab_segments=0%2Fl2b-basic-1%2Frelevance_config_with_tbsub_l2b&refreqid=search%3A9fd0deff8d3258de87d3b54d6dfad664&seq=1#metadata_info_tab_contents the idea is to create a script that iterates through the url sequence number("seq=i") | |||

#https://git.xpub.nl/pedrosaclout/jsort_scrape | |||

<source lang="python"> | |||

# import libraries | |||

from selenium import webdriver | |||

from selenium.webdriver.common.keys import Keys | |||

import os | |||

import time | |||

import datetime | |||

from pprint import pprint | |||

import requests | |||

import multiprocessing | |||

import base64 | |||

i = 1 | |||

while True: | |||

try: | |||

# URL iterates through the sequence number | |||

url = ("https://www.jstor.org/stable/23267102?Search=yes&resultItemClick=true&searchText=tea&searchUri=%2Faction%2FdoBasicSearch%3FQuery%3Dtea%26amp%3Bacc%3Don%26amp%3Bfc%3Doff%26amp%3Bwc%3Don%26amp%3Bgroup%3Dnone&ab_segments=0%2Fl2b-basic-1%2Frelevance_config_with_tbsub_l2b&refreqid=search%3A9fd0deff8d3258de87d3b54d6dfad664&" + "seq=%i#metadata_info_tab_contents"%i) | |||

# Tell Selenium to open a new Firefox session | |||

# and specify the path to the driver | |||

driver = webdriver.Firefox(executable_path=os.path.dirname(os.path.realpath(__file__)) + '/geckodriver') | |||

# Implicit wait tells Selenium how long it should wait before it throws an exception | |||

driver.implicitly_wait(10) | |||

driver.get(url) | |||

time.sleep(3) | |||

# get the image bay64 code | |||

img = driver.find_element_by_css_selector('#page-scan-container.page-scan-container') | |||

src = img.get_attribute('src') | |||

# check if source is correct | |||

# pprint(src) | |||

# strip type from Javascript to base64 string only | |||

base64String = src.split(',').pop(); | |||

pprint(base64String) | |||

# decode base64 string | |||

imgdata = base64.b64decode(base64String) | |||

# save the image | |||

filename = ('page%i.gif'%i) | |||

with open(filename, 'wb') as f: | |||

f.write(imgdata) | |||

driver.close() | |||

i+=1 | |||

print("DONE! Closing Window") | |||

except: | |||

print("Impossible to print image") | |||

driver.close() | |||

break | |||

time.sleep(1) | |||

</source> | |||

<gallery mode="packed" heights="380px"> | |||

File:blur01.png | File:blur01.png | ||

File:blur02.png | File:blur02.png | ||

File:indexing.gif | |||

</gallery> | </gallery> | ||

===Verso Books=== | ===Verso Books=== | ||

Latest revision as of 18:43, 15 June 2019

Folder to Workshop

https://git.xpub.nl/pedrosaclout/Workshop_Folder

Makefile

src=$(shell ls *.jpeg)

pdf=$(src:%.jpeg=%.pdf)

pdf: $(pdf)

zapspaces:

rename "s/ /_/" *

rename "s/\.jpg/.jpeg/" *

# Scan.pdf: Scan.jpeg

# tesseract Scan.jpeg Scan -l eng pdf

%.pdf: %.jpeg

tesseract $*.jpeg $* -l eng pdf

# %.ppm: %.jpeg

# convert $*.jpeg $*.ppm

#

# %.un.jpeg: %.un.ppm

# convert $*.un.ppm $*.un.jpeg

#

# %.un.jpeg: %.jpeg

# convert $*.jpeg tmp.ppm

# unpaper tmp.ppm tmp2.ppm

# convert tmp2.ppm $*.un.jpeg

# rm tmp.ppm

# rm tmp2.ppm

#

# #debug vars

# print-%:

# @echo '$*=$($*)'

OCR all jpegs

#!/bin/bash

cd "$(dirname "$0")"

make zapspaces

make

Merge all the pdfs together

#!/bin/bash

cd "$(dirname "$0")"

pdftk *.pdf cat output newfile.pdf

Understanding how to use Json+Libgen API + Monoskop

#"using an api" in python

#Prototyping 29 MAY 2019

#Piet Zwart Institute

import sys

import json

from json import loads

from urllib.request import urlopen

from pprint import pprint

def get_json (url):

#save the json to a variable

file = urlopen(url)

#prints only an object "<http.client.HTTPResponse object at 0x103e1c860>"

print (file)

#you need to read the object

#b means bites that have to be decoded into a string

text = file.read().decode("utf-8")

#check the type of the object

pprint(type(text))

#see the string

pprint(text)

#turn the string into a more structured object type like a dictionary

#json loads asks for a string input

#save it to a variable

data = json.loads(text)

pprint(len(data))

pprint(data)

return data

libgendata = get_json("http://gen.lib.rus.ec/json.php?ids=1&fields=*")

monoskopdata = get_json("https://monoskop.org/api.php?action=query&list=allcategories&format=json&apiversion=2")

#use the item in a for loop

for item in libgendata:

#print the item type

pprint(type(item))

#look at the keys

pprint(item.keys())

#print only the title

pprint(item["title"])

# #use this if we are not sure because it wont break the code

# print(item.get("title"))

#its already a dictionary

cats = monoskopdata["query"]["allcategories"]

#use the item in a for loop

for item in cats:

#print the item type

pprint(type(item))

print(item["*"])

pylibgen Libgen API for python library

A fork from "https://github.com/joshuarli/pylibgen" —> libgen.io URL was outdated.

Now links to —> http://gen.lib.rus.ec/

pylibgen test on Python 3.7, and can be installed via :

- pip3 install -e git+https://git.xpub.nl/pedrosaclout/PyLibGenesis#egg=pylibgen.py

import pprint

import pylibgen

from pylibgen import Library

import requests

bookname = input("Book name to search: ")

l = Library()

ids = l.search(bookname)

# print(ids)

idstourl = ",".join(ids )

print(idstourl)

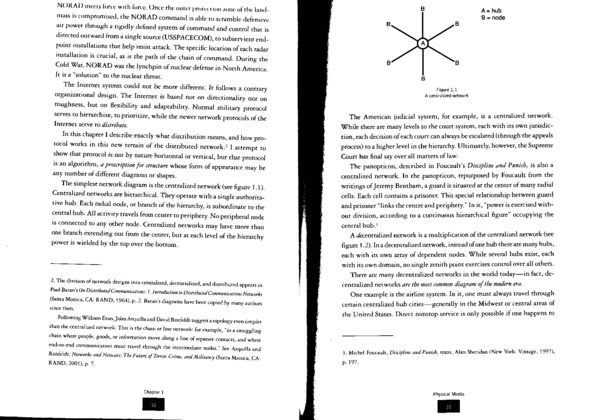

Watermarks, downloading, deleting

https://pad.xpub.nl/p/IFL_2018-05-13

JSOR (Ithaka Harbors, Inc) publishers

- download an article from http://jstor.org/ from within the school´s internet

- search for watermarks on the PDF

- look for some leads in https://sourceforge.net/p/pdfedit/mailman/message/27874955/ — https://github.com/kanzure/pdfparanoia/issues?utf8=%E2%9C%93&q=jstor

JSTOR Downloader

- While working around the watermarks that JSTOR leaves in their PDFs, we understood that in their website, one can have a preview through the whole document without any of these watermarks. The idea began to download it directly from these preview images rather than actually downloading it from a JSTOR account.

- https://www.jstor.org/stable/23267102?Search=yes&resultItemClick=true&searchText=tea&searchUri=%2Faction%2FdoBasicSearch%3FQuery%3Dtea%26amp%3Bacc%3Don%26amp%3Bfc%3Doff%26amp%3Bwc%3Don%26amp%3Bgroup%3Dnone&ab_segments=0%2Fl2b-basic-1%2Frelevance_config_with_tbsub_l2b&refreqid=search%3A9fd0deff8d3258de87d3b54d6dfad664&seq=1#metadata_info_tab_contents the idea is to create a script that iterates through the url sequence number("seq=i")

- https://git.xpub.nl/pedrosaclout/jsort_scrape

# import libraries

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

import os

import time

import datetime

from pprint import pprint

import requests

import multiprocessing

import base64

i = 1

while True:

try:

# URL iterates through the sequence number

url = ("https://www.jstor.org/stable/23267102?Search=yes&resultItemClick=true&searchText=tea&searchUri=%2Faction%2FdoBasicSearch%3FQuery%3Dtea%26amp%3Bacc%3Don%26amp%3Bfc%3Doff%26amp%3Bwc%3Don%26amp%3Bgroup%3Dnone&ab_segments=0%2Fl2b-basic-1%2Frelevance_config_with_tbsub_l2b&refreqid=search%3A9fd0deff8d3258de87d3b54d6dfad664&" + "seq=%i#metadata_info_tab_contents"%i)

# Tell Selenium to open a new Firefox session

# and specify the path to the driver

driver = webdriver.Firefox(executable_path=os.path.dirname(os.path.realpath(__file__)) + '/geckodriver')

# Implicit wait tells Selenium how long it should wait before it throws an exception

driver.implicitly_wait(10)

driver.get(url)

time.sleep(3)

# get the image bay64 code

img = driver.find_element_by_css_selector('#page-scan-container.page-scan-container')

src = img.get_attribute('src')

# check if source is correct

# pprint(src)

# strip type from Javascript to base64 string only

base64String = src.split(',').pop();

pprint(base64String)

# decode base64 string

imgdata = base64.b64decode(base64String)

# save the image

filename = ('page%i.gif'%i)

with open(filename, 'wb') as f:

f.write(imgdata)

driver.close()

i+=1

print("DONE! Closing Window")

except:

print("Impossible to print image")

driver.close()

break

time.sleep(1)

Verso Books

- Create an account in https://www.versobooks.com/

- Download book as epub, a free one will do, for instance https://www.versobooks.com/books/2772-verso-2017-mixtape

- search for watermarks on the EPUB

- Look at the Institute for Biblio-Immunology -- First Communique: https://pastebin.com/raw/E1xgCUmb for more leads. https://www.booxtream.com/

Tesseract, OCR, Book scan

<script type="text/javascript">

//store all class 'ocr_line' in 'lines'

var lines = document.querySelectorAll(".ocr_line");

//loop through each element in 'lines'

for (var i = 0; i < lines.length; i++){

var line = lines[i];

console.log(line.title)

//split the content of 'title' every space and store the list in 'parts'

var parts = line.title.split(" ");

console.log(parts);

// width and height starts from the side

var left = parseInt(parts[1], 10);

var top = parseInt(parts[2], 10);

var width = (parseInt(parts[3], 10) - left);

var height = (parseInt(parts[4], 10) - top);

// create a style element with the content selected from the list 'parts'

line.style = "position: absolute; left: " + parts[1] + "px; top: " + parts[2] + "px; width: " + width + "px; height: " + height + "px; border: 5px solid lightblue";

var words = line.querySelectorAll(".ocrx_word");

for (var e = 0; e < words.length; e++){

var span = words[e];

console.log(span.title)

var parts = span.title.split(" ");

console.log(parts);

var wleft = parseInt(parts[1], 10);

var wtop = parseInt(parts[2], 10);

var wwidth = (parseInt(parts[3], 10) - wleft);

var wheight = (parseInt(parts[4], 10) - wtop);

span.style = "position: absolute; left: " + (wleft - left) + "px; top: " + (wtop - top) + "px; width: " + wwidth + "px; height: " + wheight + "px; border: 2px solid purple";

}

}