User:Alexander Roidl/master notes: Difference between revisions

No edit summary |

(→Model) |

||

| (11 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

==References == | ==References == | ||

====Berger, J., 2008. Ways of Seeing, 01 edition. ed. Penguin Classics, London.==== | ====Berger, J., 2008. Ways of Seeing, 01 edition. ed. Penguin Classics, London.==== | ||

what we see is different from our words (we see before we speak) | |||

seeing is dependent on knowledge | |||

The camera changed how we see (also the old paintings) | |||

They are in more than one place now, on your screen. | |||

Image once belonged to a fixed place (like church) | |||

* surrounding is part of the image | |||

* now the image is coming to you | |||

* the image travels | |||

What is the original painting? | |||

* The price (which depends upon it being genuine) makes it impressive / mysterious again | |||

The uninterrupted silence / stillness of the image | |||

How music / sound changes the meaning of images | |||

* as soon as an image is transmittable its meaning is likely to be manipulated or transformed | |||

Ideas very much related to Walter Benjamins concept of aura and the Art in the Age of mechanical reproduction. | |||

>> The way we see is influenced by images and vice versa. As the image changes, as already explored by Walter Benjamin, also its cultural implication changes. So we can see from this that changes in image production can have a major impact on culture. I see the same change happening to cultural production. | |||

====Bridle, J., 2018. New Dark Age: Technology and the End of the Future. Verso, London ; Brooklyn, NY.==== | ====Bridle, J., 2018. New Dark Age: Technology and the End of the Future. Verso, London ; Brooklyn, NY.==== | ||

| Line 23: | Line 48: | ||

Also, Bridle is describing the complexity of the technology that surrounds us. Unlike before the computers we naturally understood how certain things worked, we understand not even parts of the simplest things that we use daily. The postal system is quite graspable for all of us, while only few might understand what happens when we send an E–Mail. So Bridle asks: If we don’t know how they interconnect, work and who has the power over them how should we effect those systems. | Also, Bridle is describing the complexity of the technology that surrounds us. Unlike before the computers we naturally understood how certain things worked, we understand not even parts of the simplest things that we use daily. The postal system is quite graspable for all of us, while only few might understand what happens when we send an E–Mail. So Bridle asks: If we don’t know how they interconnect, work and who has the power over them how should we effect those systems. | ||

In my opinion Bridle gives a great insight in how technology interconnects with our life and he asks the pressing questions of our more and more digitised world. While Bridle says that »learning to code might not be enough«, I think that this sounds like an excuse of lurking deeper in questions regarding the structure of algorithms like machine learning. I think it is still important to trace back the fundamental structures of code, that is also connected to questions of language and politics itself. Bridle is not connecting on this very zoomed-in level of code, but so he does socially and politically. When Bridle is talking about the physicality I see a lot of connections to »A Prehistory of the Cloud« Tung-Hui Hu, which seems a very complimentary reading | In my opinion Bridle gives a great insight in how technology interconnects with our life and he asks the pressing questions of our more and more digitised world. While Bridle says that »learning to code might not be enough«, I think that this sounds like an excuse of lurking deeper in questions regarding the structure of algorithms like machine learning. I think it is still important to trace back the fundamental structures of code, that is also connected to questions of language and politics itself. Bridle is not connecting on this very zoomed-in level of code, but so he does socially and politically. When Bridle is talking about the physicality I see a lot of connections to »A Prehistory of the Cloud« Tung-Hui Hu, which seems a very complimentary reading in these terms. | ||

>> In his recent work Bridle shows the impact of technology and its political side. He formulates how algorithms influence our life and how we are not able to understand these models that we build of the world. I find this lack of understanding an important startingpoint for my work. | |||

| Line 36: | Line 63: | ||

> takes you from surprise egg video to masturbating micky mouse > tags/titles/algorithms take you to strange place (endless loop) | > takes you from surprise egg video to masturbating micky mouse > tags/titles/algorithms take you to strange place (endless loop) | ||

>> This shows how algorithms can create their own weird system (Reminds me also of a work by Sebastien Schmieg, where he looped through amazon recommondations). | |||

====Gerstner, K., 1964. Designing Programmes.==== | ====Gerstner, K., 1964. Designing Programmes.==== | ||

| Line 54: | Line 83: | ||

I think that what Gerstner describes is a very interesting approach in seeing the world as a program – but one might also wonder: is the world always reducable to a simple program? This is also something that James Bridle criticises in his recent book new dark age. We only try to describe reality with algorithms, we are creating models of the world, while we forget looking at the real world. Despite this the programmatic approach like we see it in Gerstners work or also in the OuLiPo group is a way to find new interpretations or representations of traditional matter, like literature, music or art. | I think that what Gerstner describes is a very interesting approach in seeing the world as a program – but one might also wonder: is the world always reducable to a simple program? This is also something that James Bridle criticises in his recent book new dark age. We only try to describe reality with algorithms, we are creating models of the world, while we forget looking at the real world. Despite this the programmatic approach like we see it in Gerstners work or also in the OuLiPo group is a way to find new interpretations or representations of traditional matter, like literature, music or art. | ||

>> Creating form through algorithm is an important aspect of Gerstners work as well as I plan it for my thesis. It is an early approach of understanding algorithms as a tool to describe visual forms. | |||

====Flusser, V., 2011. Into the Universe of Technical Images, 1 edition. ed. Univ Of Minnesota Press, Minneapolis.==== | ====Flusser, V., 2011. Into the Universe of Technical Images, 1 edition. ed. Univ Of Minnesota Press, Minneapolis.==== | ||

| Line 84: | Line 115: | ||

Next he is talking about keys (the instruments that influence the particles, that make them graspable again) | Next he is talking about keys (the instruments that influence the particles, that make them graspable again) | ||

>> This text is useful for a basic understanding of the technical images that surround us. Flusser examines carefully what it means that images change their function and representation and even understanding. | |||

====Gere, C., 2008. Digital Culture, 2nd Revised edition edition. ed. Reaktion Books, London.==== | ====Gere, C., 2008. Digital Culture, 2nd Revised edition edition. ed. Reaktion Books, London.==== | ||

Digital Culture by Chalie Gere investigates on how culture of digitisation turned into digital culture. | |||

She describes the history if computation and how | |||

Turing was inventing the generic computing. | |||

War and post war development pushed the computer further starting of from cryptographic needs for the war. Therefore it was possible for new technology to arise rapidly. | |||

| Line 119: | Line 157: | ||

Calculating Border between points | Calculating Border between points | ||

>> This is, i guess, a very unconventional academic reading, as it is more a tutorial-book for machine learning algorithms. But still it is very helpful in understanding what is going on under the hood of these new algorithms. Secondly it also serves with some interesting terminologies that would be interesting to relate how we talk about images and machine learning. Features in images, models, hidden layers, neural network, deep dream, deep learning, generalization, underfitting, overfitting… | |||

====Lev Manovich: Database as symbolic Form==== | ====Lev Manovich: Database as symbolic Form==== | ||

| Line 131: | Line 171: | ||

In conclusion Manovich discusses two very significant points of how the word is being represented in new media: one, the interface that lays on top and second the database that is behind it. His comparison with the narrative is crucial in order to understand how the new media changes our perception of information, which eventually creates the world as we know it. | In conclusion Manovich discusses two very significant points of how the word is being represented in new media: one, the interface that lays on top and second the database that is behind it. His comparison with the narrative is crucial in order to understand how the new media changes our perception of information, which eventually creates the world as we know it. | ||

>> This was a very important reading for me to understand how databases and narratives connect, and how forms of representation / storage change the way we perceive the world. | |||

====Hu, T.-H., 2016. A Prehistory of the Cloud, Reprint edition. ed. The MIT Press, Cambridge, Massachusetts.==== | ====Hu, T.-H., 2016. A Prehistory of the Cloud, Reprint edition. ed. The MIT Press, Cambridge, Massachusetts.==== | ||

| Line 146: | Line 190: | ||

more fundamental economic shift away from waged labor and toward what | more fundamental economic shift away from waged labor and toward what | ||

Maurizio Lazzarato terms the economy of “ immaterial labor ”« (p.39) | Maurizio Lazzarato terms the economy of “ immaterial labor ”« (p.39) | ||

====https://www.nytimes.com/2018/03/06/technology/google-artificial-intelligence.html==== | |||

How researchers try to make decisions of AI algorithms possible | |||

Decisions made by AI are complex to understand | |||

Researchers at Google try to reveal the layers by visualising them | |||

They don’t include anyone outside > only trying to make better algorithms for more complex problems | |||

>> Interesting example of trying to build another algorithm to fix the old one … funny reading … but also ……… google | |||

==Model== | |||

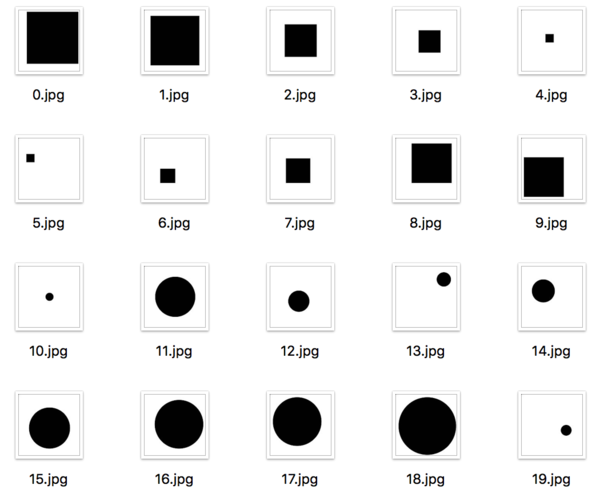

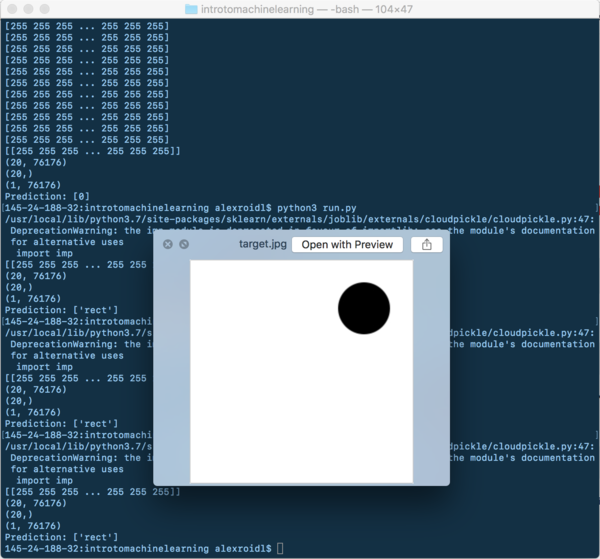

===Learning Machine Learning=== | |||

[[File:Screen Shot 2018-10-04 at 00.28.51.png|600px|unframed]] | |||

[[File:Screen Shot 2018-10-02 at 11.49.24.png|600px|unframed]] | |||

<pre> | |||

#from preamble import * | |||

from sklearn.datasets import load_iris | |||

from sklearn.model_selection import train_test_split | |||

import pandas as pd | |||

#import matplotlib.pyplot as plt | |||

import numpy as np | |||

import PIL | |||

from os import listdir | |||

def list_files(directory, extension): | |||

return (f for f in listdir(directory) if f.endswith('.' + extension)) | |||

directory = '/Users/alexroidl/xpub/introtomachinelearning/images' | |||

files = list_files(directory, "jpg") | |||

arr_data = [] | |||

for f in files: | |||

# Convert Image to array | |||

img = PIL.Image.open("images/"+f).convert("L") | |||

arr = np.array(img) | |||

arr = arr.reshape(-1) | |||

arr_data.append(arr) | |||

arr_data=np.array(arr_data) | |||

arr_target = np.array(["rect","rect","rect","rect","rect","rect","rect","rect","rect","rect","circle","circle","circle", | |||

"circle","circle","circle","circle","circle","circle","circle"]) | |||

img = PIL.Image.open("target.jpg").convert("L") | |||

arr_new = np.array(img) | |||

arr_new = arr_new.reshape(-1) | |||

arr_new= arr_new.reshape(1, -1) | |||

x_train, x_test, y_train, y_test = train_test_split( | |||

arr_data,arr_target , random_state=0) | |||

from sklearn.neighbors import KNeighborsClassifier | |||

# number of points it relates to in the graph | |||

knn = KNeighborsClassifier(n_neighbors=2) | |||

knn.fit(arr_data, arr_target) | |||

prediction = knn.predict(arr_new) | |||

print("Prediction: {}".format(prediction)) | |||

</pre> | |||

===Image Generation=== | |||

[[File:Myimage.gif|thumbnail]] | |||

jpeg.alexroidl.de | |||

Latest revision as of 23:07, 17 October 2018

References

Berger, J., 2008. Ways of Seeing, 01 edition. ed. Penguin Classics, London.

what we see is different from our words (we see before we speak)

seeing is dependent on knowledge

The camera changed how we see (also the old paintings)

They are in more than one place now, on your screen.

Image once belonged to a fixed place (like church)

- surrounding is part of the image

- now the image is coming to you

- the image travels

What is the original painting?

- The price (which depends upon it being genuine) makes it impressive / mysterious again

The uninterrupted silence / stillness of the image

How music / sound changes the meaning of images

- as soon as an image is transmittable its meaning is likely to be manipulated or transformed

Ideas very much related to Walter Benjamins concept of aura and the Art in the Age of mechanical reproduction.

>> The way we see is influenced by images and vice versa. As the image changes, as already explored by Walter Benjamin, also its cultural implication changes. So we can see from this that changes in image production can have a major impact on culture. I see the same change happening to cultural production.

Bridle, J., 2018. New Dark Age: Technology and the End of the Future. Verso, London ; Brooklyn, NY.

In the book »New Dark Age« from 2018, James Bridle explains how technology has changed influenced out life so far and how it will change our future.

Bridle explains how technology has transformed but our understanding hasn't. He states, that we are deeply enmeshed in our technological systems therefore it is not possible for us to think outside of them. This puts us in a rather complicated and weird position.

By explaining the example of weather forecasting he investigates on how technology tries to represent the reality and how it fails: Since weather forecasting started out, we gathered more and more data, built ever growing models of our world and invented better algorithms. But this models start to fail. This becomes obvious when looking at the predictions for weather which become ever worse, due to climate change. From big data humans try to create the perfect model of the world. This makes us believe that we know ever more about our world, but instead the opposite is the case. We look only at the models and forget the look at the real world. And furthermore Bridle states that these models are overwhelming and demoralising due to their complexity.

This is how due to Bridle we might enter a new dark age, where we start to know less and less about the world, which is not necessary bad. Next Bridle is talking about biased algorithms, with an example of Software that is being used in American courts to calculate the sentences. We believe that Software is neutral and it it leads us to better decisions about the world. But that isn’t the case: These systems are biased. The court software for instance was based on historical data, which is obviously biased and so is the software. So people figured out that this algorithm would prefer white people and give them shorter sentences, while people of colour had much worse predictions.

What we can learn from this due to Bridle is that a democratisation of this technologies in necessary. So that not only technicians, that have a very specific skill-set would be involved in building software. Bridle is also talking about AI and how this advanced computational methods lead to new questions in the digital realms. Bridle comes up with another example that explains how computer work in the field of machine learning. The computing power is ever since compared to those of mankind. An well-known example is humans playing chess against computers. The most well known match might be Deep Blue versus Garry Kasparov. In 1997 Deep Blue was the first computer to defeat a world champion. The algorithms to this game was quite understandable. The computer just calculated many steps ahead and therefore »out-thought« the Kasparov. In 2006 another game took part »AlphaGo versus Lee Sedol« this time in Go instead of chess, as this was considered the barrier of intelligence that computers couldn’t reach yet. The result: AlphaGo won utterly. The interesting difference between the game from 1997 and 2006 is that the way these algorithms work have changed. While Deep Blue decisions where easy to follow, AlphaGo is based on machine learning, learning from real live examples and later on playing repeatedly against itself. AlphaGo created a model and we can’t understand how it made its decisions. For instance it made a few moves that were stunning and new to the Go game but turned out to be one of the best moves ever. So Bridle points out how computers are not only anymore thinking ahead of but they start to think completely different from us.

Bridle underlines how it is not only a question of technicians but rather a political question how we can use that machines in our service rather then against us. Also, Bridle is describing the complexity of the technology that surrounds us. Unlike before the computers we naturally understood how certain things worked, we understand not even parts of the simplest things that we use daily. The postal system is quite graspable for all of us, while only few might understand what happens when we send an E–Mail. So Bridle asks: If we don’t know how they interconnect, work and who has the power over them how should we effect those systems.

In my opinion Bridle gives a great insight in how technology interconnects with our life and he asks the pressing questions of our more and more digitised world. While Bridle says that »learning to code might not be enough«, I think that this sounds like an excuse of lurking deeper in questions regarding the structure of algorithms like machine learning. I think it is still important to trace back the fundamental structures of code, that is also connected to questions of language and politics itself. Bridle is not connecting on this very zoomed-in level of code, but so he does socially and politically. When Bridle is talking about the physicality I see a lot of connections to »A Prehistory of the Cloud« Tung-Hui Hu, which seems a very complimentary reading in these terms.

>> In his recent work Bridle shows the impact of technology and its political side. He formulates how algorithms influence our life and how we are not able to understand these models that we build of the world. I find this lack of understanding an important startingpoint for my work.

The nightmare videos of childrens' YouTube — and what's wrong with the internet today | James Bridle (talk)

- What are the possibilities of these new weird technologies?

- Deep strangeness / lack of understanding

- Algorithmically driven culture

- Even if you are human you have to behave like a machine just to survive

- Autoplay is automatically on

> takes you from surprise egg video to masturbating micky mouse > tags/titles/algorithms take you to strange place (endless loop)

>> This shows how algorithms can create their own weird system (Reminds me also of a work by Sebastien Schmieg, where he looped through amazon recommondations).

Gerstner, K., 1964. Designing Programmes.

The book Designing Programs by Karl Gerstner was published in 1964. Gerstner proposes a different framework for problem solving.

Instead of solving a problem for a single case, he is thinking of programs that solve problems for a variable number of times. He is defining multiple definitions of the »program« and how it generates various outcomes dependent on its outcome and algorithm.

»To describe to problem is part of the solution«(p.20). In the following he is describing a series of works (specifically logos) that follow a very simple systematic. He traces back the idea of an algorithmic design to old Cathedra churches, where the decoration is based on »an exact program of constants and variants«. Therefore all the windows are different and full of variation while maintaining the original style. Gerstner tries to describe this program next to the image of the windows with his own words (based on written language). He does so with all the following examples. The chapter «integral typography« Gerstner analyses type as a mathematical system that can be described by programs.

He explains the possibilities of creating a very complex system based on simple rules, with an example of the simple devision of a square. With a form simple as this it would be possible to use the intersections, lines, surfaces, diagonals etc… to build generative patterns.

He is trying to take existing design principles, like the grid, and describes them as a program itself – generating a very complex, transformable grid which he calls »mobile grid«. Next Gerstner brings up the concepts of programs as literature, where he is generating poems based on very simple rules. The technic he uses is not based on a real algorithm as we know it. It is not executed by an computer, but rather executed by a human, who selects from a list of »commands«. (In a very OuLiPo-ian way) The same thing can be done with music or photo-collages, as Gerstner describes. Furthermore he dives into 3D applications, also for typography and last but not least he also relates his programatic idea to the selection of colors, creating color-systems.

I think that what Gerstner describes is a very interesting approach in seeing the world as a program – but one might also wonder: is the world always reducable to a simple program? This is also something that James Bridle criticises in his recent book new dark age. We only try to describe reality with algorithms, we are creating models of the world, while we forget looking at the real world. Despite this the programmatic approach like we see it in Gerstners work or also in the OuLiPo group is a way to find new interpretations or representations of traditional matter, like literature, music or art.

>> Creating form through algorithm is an important aspect of Gerstners work as well as I plan it for my thesis. It is an early approach of understanding algorithms as a tool to describe visual forms.

Flusser, V., 2011. Into the Universe of Technical Images, 1 edition. ed. Univ Of Minnesota Press, Minneapolis.

The technical image is very different from the traditional image. The image is embedded into cultural context, especially what he considers as the traditional image, which is mostly composed out of symbols. These symbols need the deciphered, in order to understand the meaning of the image. Flusser claims that humans abstract more and more. He lists 5 steps of cultural history that lead to the technical image in the end. This shall also explain the difference between the what Flusser calls traditional and technical image. First: Animals and primitive People embedded into a four dimensional time-space continuum. »It is the level of concrete experience.« (p. 6) Second: The humans that preceded us. The human is interacting with objects and shaping them.

Third: The human that is able to imagine and translate it into a »imaginary, two-dimensional mediation zone« (p. 6): the traditional image (cave paintings). Fourth: The stage of »understanding and explanation, the historical level« based on the invention of linear text. Fifth: »This is the level of calculation and computation, the level of technical images«. The image is (compared to the traditional image) not based on imagination anymore, but it is founded on the power by the automation of an apparatus. Flusser explains this cultural history as an ongoing abstraction, an alienation of the human from the concrete.

While going through all those steps as stated above, Flusser underlines a loos of dimensions. When the first one was still placed in a 4 dimensional space, the second one already reduces that reality to a 3 dimensional one, the one of the object. The 3rd one takes place in a two dimensional image and the 4th is reduced to a single dimensioned line of text. Now, in the last step we will find ourselfs in a zero-dimensional representation of reality that is based on points (the pixel). Particles that shape swarms.

Flusser disagrees with the idea that a photograph represents it’s photographed object in an unchanged way. The world is becoming to abstract and thus unlifeable. »To live, one must try to make the universe and consciousness concrete.« (S.15)

»a technical image is: a blindly realized possibility, something invisible that has blindly become visible.« (S.16)

»So the basis for the emerging universe and emerging consciousness is the calculation of probability.« (p.17)

He formulates the idea of the apparatus and the human as part of its functions »That is to say, then, that not only the gesture but also the intention of the photographer is a function of the apparatus.« (p. 20)

The technical image is a collaboration between apparatus and human being. Technical image needs to be programmed and then deprogrammed. It is to turn particles into and surface. (While it is not a real surface, only a raster / simulation of it) So the meaning of the technical image is radically different from the traditional.

Next he is talking about keys (the instruments that influence the particles, that make them graspable again)

>> This text is useful for a basic understanding of the technical images that surround us. Flusser examines carefully what it means that images change their function and representation and even understanding.

Gere, C., 2008. Digital Culture, 2nd Revised edition edition. ed. Reaktion Books, London.

Digital Culture by Chalie Gere investigates on how culture of digitisation turned into digital culture. She describes the history if computation and how Turing was inventing the generic computing. War and post war development pushed the computer further starting of from cryptographic needs for the war. Therefore it was possible for new technology to arise rapidly.

Müller, A.C., Guido, S., 2016. Introduction to Machine Learning with Python: A Guide for Data Scientists, 1 edition. ed. O’Reilly Media, Sebastopol, CA.

A technical / practical guide to using data and machine learning algorithms

- Supervised vs. unsupervised learning

- Supervised = in and output are given

- Unsupervised = only input is given

Features

- The describing values of training data

Overfitting / Underfitting

- Overfitting = too many features

- Unterfitting = too less features

- always in relation to the size of the training set

Generalisation

- How good is the model dealing with data outside of the training set?

Evaluation of the model

- How do you know of the model works? The training is split in training and test data, the accuracy on the test data is used as an indicator for the performance of the model.

K Nearest Neighbour

K = number of neighbours (e.g. 3 points)

K Mean Calculating Border between points

>> This is, i guess, a very unconventional academic reading, as it is more a tutorial-book for machine learning algorithms. But still it is very helpful in understanding what is going on under the hood of these new algorithms. Secondly it also serves with some interesting terminologies that would be interesting to relate how we talk about images and machine learning. Features in images, models, hidden layers, neural network, deep dream, deep learning, generalization, underfitting, overfitting…

Lev Manovich: Database as symbolic Form

In the article »Database as symbolic Form« Lev Manovich describes the attributes of databases as a new way to perceive and structure the world and he explains its relation to the narrative.

He begins his article with the definition of what a database is. According to him it is a structured collection of data. There are many different types as for instance the hierarchical one, networks, relational and object-oriented databases and there is a variation in how data is being stored in them. To point out the current state of databases he brings forward the example of a virtual museum, that would have a database as a backend. You would be able to view, search or sort this data, for example by date, by artist, by country or by any other thinkable metadata. This leads him to the conclusion that a database can be read in multiple ways. Manovich elaborates further on this by comparing the database with the narrative as we know it from books or the cinema: »Many new media objects do not tell stories; they don’t have a beginning or an end; in fact, they don’t have any development, thematically, formally or otherwise, which would organize their elements into a sequence. Instead, they are collections of individual items, where every item has the same significance as any other.« A database is a new way to structure the world and our experience of it. He also names webpages, that are hierarchically structured via tags, as a form of database, containing links, images, text or video. With this example the dynamic aspect of a database becomes visible, meaning that you can add, edit or delete any element at any time. This also means that a website is never complete.

Furthermore Manovich examines the differences of narrative and database. The database »represents the world as a list of items«, while the narrative »creates a cause-and-effect trajectory of seemingly unordered items«. He sees the database as the enemy of the narrative. As they both claim the exclusive way of explaining the world, they are not able to co-exist. But he also sees some similarities and intersections between the both of them. In computer games for instance you follow a certain narrative, although it is based on a database. This is what he later names as the interactive narrative. And he also shows examples of databases even before the time of new media for example in books like photo albums or encyclopedias.

Manovich also takes into account the interface through which we perceive the data that lays beneath. This interface can reveal the database in different forms, creating unique narratives for each user. In the last part of the text Manovich makes connections between art, the cinema and the database-logic. A movie editor for instance is selecting his material like a computer user would from a database and creates one fixed narrative with it.

In conclusion Manovich discusses two very significant points of how the word is being represented in new media: one, the interface that lays on top and second the database that is behind it. His comparison with the narrative is crucial in order to understand how the new media changes our perception of information, which eventually creates the world as we know it.

>> This was a very important reading for me to understand how databases and narratives connect, and how forms of representation / storage change the way we perceive the world.

Hu, T.-H., 2016. A Prehistory of the Cloud, Reprint edition. ed. The MIT Press, Cambridge, Massachusetts.

(Chapter 1 – 3)

In »A Prehistory of the Cloud« Tung-Hui Hu investigates network structures and how they influence our lifes. The book reveals several layers of cloud-computation: The infrastructure of the network, the visualisation, the data storage and big data / data mining.

Hu traces the physicality of the web by pointing at the cables that connect the internet, running along old train tracks in America and data centres that used to be former military buildings. He deciphers »the Cloud« not only as a metaphor literal metaphor but also an representation for the madness to collect data and to connect.The Cloud as a symptom of paranoia. Hu dates back the start of this excess for connection to the Cold War. People started to think about decentralised ways of interconnectedness, as they noticed that the net tends to be monopolised. Also Artists worked on this issue like the Ant Farm-Collective.

Furthermore Hu is thinking about the concept of time sharing. While before 1960 it was impossible to own your own »personal« computer. This idea became first implemented as a simulation, where you would share one machine (therefore also time and processing power) with multiple others, but the computer reacted in real time by switching between processes. Hu points out how this concept translated into cloud computing »virtually«. The idea of individual users that don’t cross each others path is part of the cloud concept. Furthermore Hu is trying to figure out the immediate need to outsource data and the linked risk of data and control loss.

I think it is quite interesting how Hu brings together this vague imagination of the cloud and its physical manifestation. It reveals the threat of getting caught in a system where we are programmed to become »the user« and have lost every sense of our data, while trying to find better algorithms to resolve problems that may not anymore be resolved programmatically. Solutions may rather be sought for in the real world.

»time-sharing was part of a larger and more fundamental economic shift away from waged labor and toward what Maurizio Lazzarato terms the economy of “ immaterial labor ”« (p.39)

https://www.nytimes.com/2018/03/06/technology/google-artificial-intelligence.html

How researchers try to make decisions of AI algorithms possible

Decisions made by AI are complex to understand

Researchers at Google try to reveal the layers by visualising them

They don’t include anyone outside > only trying to make better algorithms for more complex problems

>> Interesting example of trying to build another algorithm to fix the old one … funny reading … but also ……… google

Model

Learning Machine Learning

#from preamble import *

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

import pandas as pd

#import matplotlib.pyplot as plt

import numpy as np

import PIL

from os import listdir

def list_files(directory, extension):

return (f for f in listdir(directory) if f.endswith('.' + extension))

directory = '/Users/alexroidl/xpub/introtomachinelearning/images'

files = list_files(directory, "jpg")

arr_data = []

for f in files:

# Convert Image to array

img = PIL.Image.open("images/"+f).convert("L")

arr = np.array(img)

arr = arr.reshape(-1)

arr_data.append(arr)

arr_data=np.array(arr_data)

arr_target = np.array(["rect","rect","rect","rect","rect","rect","rect","rect","rect","rect","circle","circle","circle",

"circle","circle","circle","circle","circle","circle","circle"])

img = PIL.Image.open("target.jpg").convert("L")

arr_new = np.array(img)

arr_new = arr_new.reshape(-1)

arr_new= arr_new.reshape(1, -1)

x_train, x_test, y_train, y_test = train_test_split(

arr_data,arr_target , random_state=0)

from sklearn.neighbors import KNeighborsClassifier

# number of points it relates to in the graph

knn = KNeighborsClassifier(n_neighbors=2)

knn.fit(arr_data, arr_target)

prediction = knn.predict(arr_new)

print("Prediction: {}".format(prediction))

Image Generation

jpeg.alexroidl.de