User:Manetta/media-objects/annotated-training-datasets: Difference between revisions

No edit summary |

No edit summary |

||

| (7 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

<div style="width: | <div style="width:850px;"> | ||

[[File:Mb-algorithmic-and-data-divine.png]] <br> | [[File:Mb-algorithmic-and-data-divine.png]] <br> | ||

<small>from [http://algopop.tumblr.com/ algopop], a tumblr by Matthew Plummer-Fernandez </small> | |||

<br><br> | |||

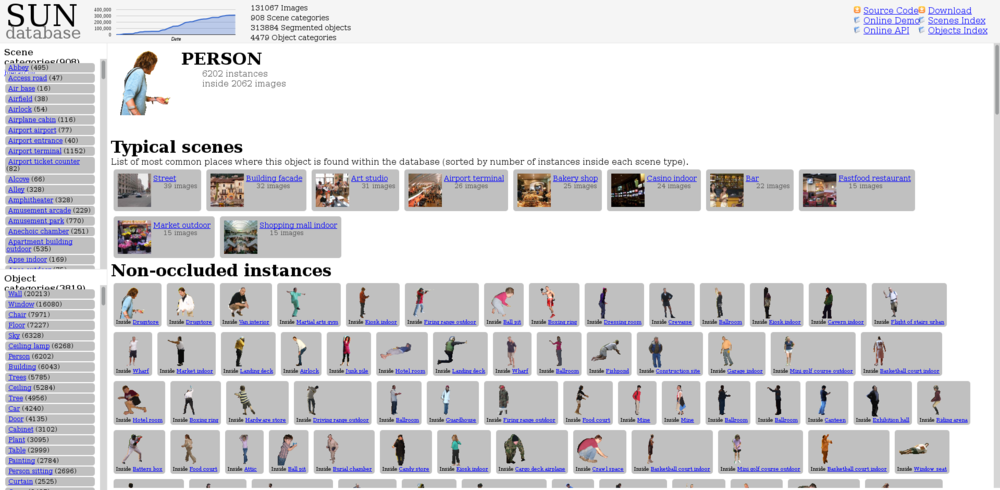

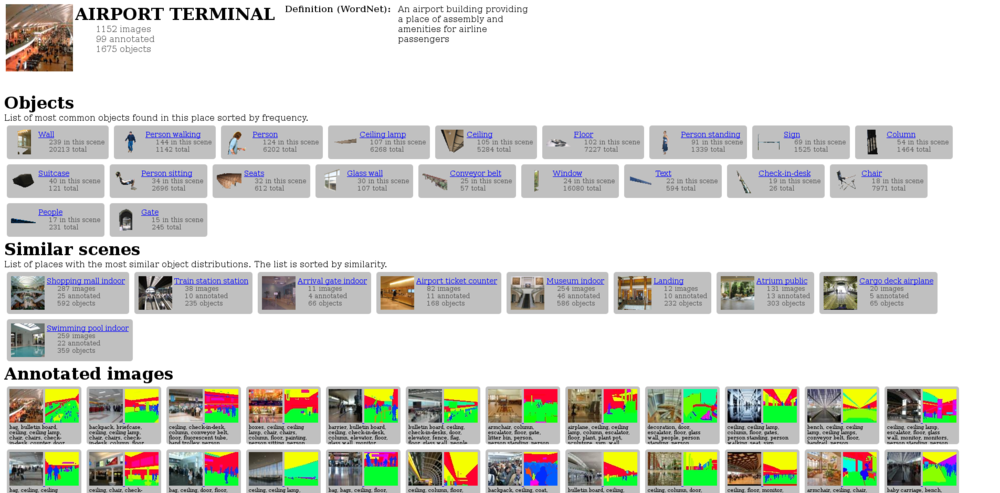

==SUN database== | ==SUN database== | ||

[http://groups.csail.mit.edu/vision/SUN/ link to the SUN database] | [http://groups.csail.mit.edu/vision/SUN/ link to the SUN database] | ||

'' "The goal of the SUN database project is to provide researchers in computer vision, human perception, cognition and neuroscience, machine learning and data mining, computer graphics and robotics, with a comprehensive collection of annotated images covering a large variety of environmental scenes, places and the objects within."'' | |||

visual dataset <br /> | |||

of annotated images <br /> | |||

* scenes | |||

* objects | |||

that are used to train <br /> | |||

an image recognition algorithm <br /> | |||

to function as a universal (visual) language | |||

------------------------------------- | |||

problemetic: <br /> | |||

* universal (visual) language<br /> | |||

--> problems are revealed in .dictionary file | |||

* classification & taxonomies/ontologies | |||

* moment between two truth-systems | |||

--> while making these datasets, the question of classification & taxonomy arises again | |||

for researchers to reconsider (again) <br /> | |||

--> reminds me of the ''speculative realism'' focus on a '''non-human-centred''' classification of 'things' | |||

--> (but does that apply to this dataset? does it have another center, how to name that center?) | |||

--> as machines get naturalized more and more, and these pieces of software are trying to come closer | |||

and closer to humans, the action of the software is performed within the computer........ | |||

and so the world is perceived from out of that computer system......... | |||

firstly applied onto '''digital images'''...... <br /> | |||

--> this dataset could be an alternative classification of 'things' .... | |||

--> possible to speculate about the consequences of such categorization ?<br /> | |||

--> as the software is only looking at existing examples, it would not be able to recognize | |||

any 'new' and 'unknown' objects<br/ > | |||

==SUN database abstract:== | ==SUN database abstract:== | ||

| Line 16: | Line 48: | ||

Images are annotated with words (using the WordNet lexicon), which creates a certain truth of the recognition algorithm. The images are the basis for a 'representational learning' system, which means that the system is trained by scanning annotated example images, and comparing them with eachother. | Images are annotated with words (using the WordNet lexicon), which creates a certain truth of the recognition algorithm. The images are the basis for a 'representational learning' system, which means that the system is trained by scanning annotated example images, and comparing them with eachother. | ||

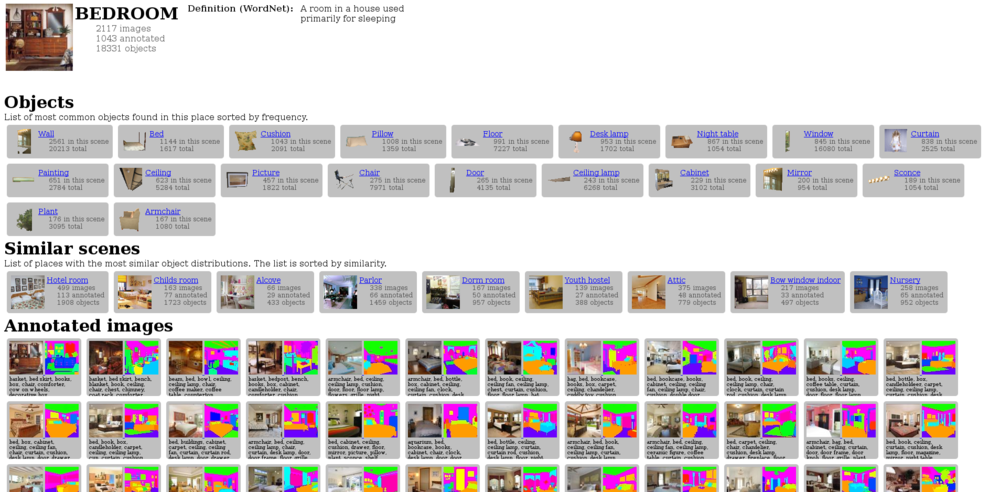

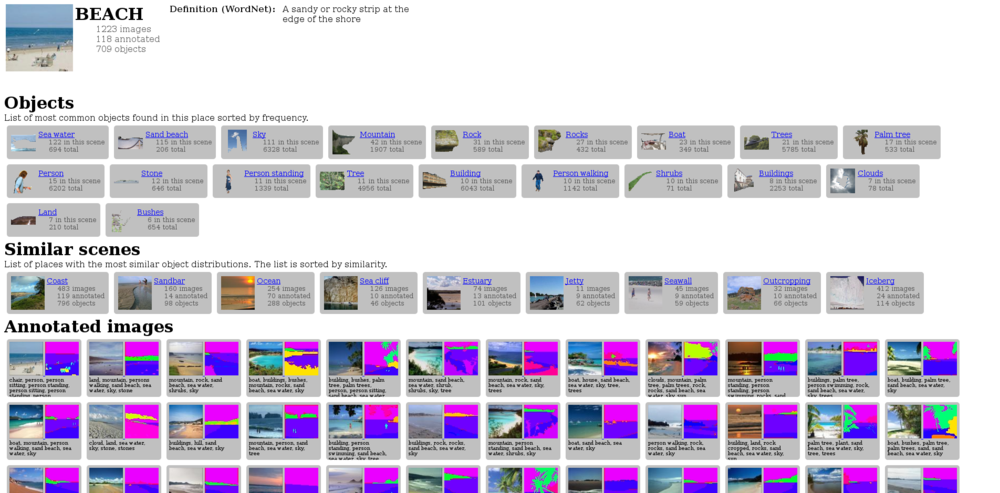

==SUN database structure== | ==SUN database structure== | ||

| Line 34: | Line 67: | ||

The '''scenes''' who appeared most often are: | The '''scenes''' who appeared most often are: | ||

* living room (2385) | |||

* bedroom (2117) | * bedroom (2117) | ||

* kitchen (1755) | |||

* beach (1223) | * beach (1223) | ||

* dining room (1187) | |||

* airport terminal (1152) | * airport terminal (1152) | ||

* castle (1126) | * castle (1126) | ||

* church outdoor (1058) | * church outdoor (1058) | ||

* house (972) | |||

* bathroom (956) | |||

* playground (909) | * playground (909) | ||

* conference room (872) | |||

| Line 67: | Line 106: | ||

The | -------------- | ||

The SUN research project trains algorithms with the dataset, and their first results show the following accuracy [http://vision.princeton.edu/projects/2010/SUN/classification397.html (link)] : | |||

* riding_arena → 94% | |||

* sauna → 94% | |||

* sky → 92% | |||

* wave → 90% | |||

* car_interior/frontseat → 88% | |||

* pagoda → 88% | |||

* volleyball_court/indoor → 86% | |||

* tennis_court/indoor → 86% | |||

* underwater/coral_reef → 84% | |||

* bow_window/outdoor → 82% | |||

* cockpit → 80% | |||

* limousine_interior → 80% | |||

* rock_arch → 80% | |||

* squash_court → 80% | |||

* florist_shop/indoor → 78% | |||

* pantry → 78% | |||

* ocean → 76% | |||

* skatepark → 76% | |||

* electrical_substation → 74% | |||

* oast_house → 74% | |||

* oilrig → 74% | |||

* parking_garage/indoor → 74% | |||

* podium/outdoor → 74% | |||

* subway_interior → 74% | |||

-------------- | -------------- | ||

These organizations and structering of objects and scenes are an attempt to create an universal (visual) language that could enable algorithms to recognize any depicted scene. Algorithms that would use this dataset, will base their results on a 'truth' that is defined by this collection of images. The hierarchy-tree and most common appearing scenes and objects create a hierarchy of importance of the dataset, which influences the 'truth' of any outcome. As the outcome of a recognition algorithm would also be considered as true, the act of annotating the image-collection is a moment in between two 'truth systems' (as Femke Snelting phrased it during Cqrrelations 2015). | These organizations and structering of objects and scenes are an attempt to create an universal (visual) language that could enable algorithms to recognize any depicted scene. Algorithms that would use this dataset, will base their results on a 'truth' that is defined by this collection of images. The hierarchy-tree and most common appearing scenes and objects create a hierarchy of importance of the dataset, which influences the 'truth' of any outcome. As the outcome of a recognition algorithm would also be considered as true, the act of annotating the image-collection is a moment in between two 'truth systems' (as Femke Snelting phrased it during Cqrrelations 2015). | ||

The | |||

------------------ | |||

The scenes are accompanied with a dictionary file, in which annotaters also comment on their classification process. For example: | |||

''a — abbey --> a Christian monastery or convent under the government of an Abbot or an Abbess | |||

'We should think about combining nunnery, monastery, priory, and abbey (all abbeys and priories are either nunneries or monasteries, and I'm not sure that those latter two are visually distinct)' '' | |||

These comments show the doubts and to-do-lists that came up during the annotating process. This brought me at the idea to make an encyclopedia of consideration. Listing and publishing the comments of the annotators. | |||

Latest revision as of 15:00, 31 May 2015

from algopop, a tumblr by Matthew Plummer-Fernandez

SUN database

"The goal of the SUN database project is to provide researchers in computer vision, human perception, cognition and neuroscience, machine learning and data mining, computer graphics and robotics, with a comprehensive collection of annotated images covering a large variety of environmental scenes, places and the objects within."

visual dataset

of annotated images

- scenes

- objects

that are used to train

an image recognition algorithm

to function as a universal (visual) language

problemetic:

- universal (visual) language

--> problems are revealed in .dictionary file

- classification & taxonomies/ontologies

- moment between two truth-systems

--> while making these datasets, the question of classification & taxonomy arises again for researchers to reconsider (again)

--> reminds me of the speculative realism focus on a non-human-centred classification of 'things' --> (but does that apply to this dataset? does it have another center, how to name that center?) --> as machines get naturalized more and more, and these pieces of software are trying to come closer and closer to humans, the action of the software is performed within the computer........ and so the world is perceived from out of that computer system......... firstly applied onto digital images......

--> this dataset could be an alternative classification of 'things' .... --> possible to speculate about the consequences of such categorization ?

--> as the software is only looking at existing examples, it would not be able to recognize any 'new' and 'unknown' objects

SUN database abstract:

"Scene categorization is a fundamental problem in computer vision. However, scene understanding research has been constrained by the limited scope of currently-used databases which do not capture the full variety of scene categories. Whereas standard databases for object categorization contain hundreds of different classes of objects, the largest available dataset of scene categories contains only 15 classes. In this paper we propose the extensive Scene UNderstanding (SUN) database that contains 899 categories and 130,519 images. We use 397 well-sampled categories to evaluate numerous state-of-the-art algorithms for scene recognition and establish new bounds of performance. We measure human scene classification performance on the SUN database and compare this with computational methods."

Images are annotated with words (using the WordNet lexicon), which creates a certain truth of the recognition algorithm. The images are the basis for a 'representational learning' system, which means that the system is trained by scanning annotated example images, and comparing them with eachother.

SUN database structure

The SUN database (of Princeton University) annotates two types of images: scenes and objects. Objects are always presented with the name of the scene they appeared in.

The objects who appeared most often are:

- wall (20213)

- window (16080)

- chair (7971)

- floor (7227)

- sky (6328)

- ceiling lamp (6268)

- person (6202)

- building (6043)

- trees (5785)

The scenes who appeared most often are:

- living room (2385)

- bedroom (2117)

- kitchen (1755)

- beach (1223)

- dining room (1187)

- airport terminal (1152)

- castle (1126)

- church outdoor (1058)

- house (972)

- bathroom (956)

- playground (909)

- conference room (872)

The scenes are structured in a 3-level hierarchy tree. The two highest levels contain the following hierarchies:

- indoor

* shopping and dining * workplace (office building, factory, lab, etc.) * home or hotel * transportation (vehicle interiors, stations, etc.) * sports and leisure * cultural (art, education, religion, military, law, politics, etc.)

- outdoor, natural

* water, ice, snow * mountains, hills, desert, sky * forest, field, jungle * man-made elements

- outdoor, man-made

* transportation (roads, parking, bridges, boeats, airports, etc.) * cultural or historical buildin.place (military, religious) * sports fields, parks, leisure spaces * industrial and construction * houses, cabins, gardens, and farms * commercial buildings, shops, markets, cities, and towns

The SUN research project trains algorithms with the dataset, and their first results show the following accuracy (link) :

- riding_arena → 94%

- sauna → 94%

- sky → 92%

- wave → 90%

- car_interior/frontseat → 88%

- pagoda → 88%

- volleyball_court/indoor → 86%

- tennis_court/indoor → 86%

- underwater/coral_reef → 84%

- bow_window/outdoor → 82%

- cockpit → 80%

- limousine_interior → 80%

- rock_arch → 80%

- squash_court → 80%

- florist_shop/indoor → 78%

- pantry → 78%

- ocean → 76%

- skatepark → 76%

- electrical_substation → 74%

- oast_house → 74%

- oilrig → 74%

- parking_garage/indoor → 74%

- podium/outdoor → 74%

- subway_interior → 74%

These organizations and structering of objects and scenes are an attempt to create an universal (visual) language that could enable algorithms to recognize any depicted scene. Algorithms that would use this dataset, will base their results on a 'truth' that is defined by this collection of images. The hierarchy-tree and most common appearing scenes and objects create a hierarchy of importance of the dataset, which influences the 'truth' of any outcome. As the outcome of a recognition algorithm would also be considered as true, the act of annotating the image-collection is a moment in between two 'truth systems' (as Femke Snelting phrased it during Cqrrelations 2015).

The scenes are accompanied with a dictionary file, in which annotaters also comment on their classification process. For example:

a — abbey --> a Christian monastery or convent under the government of an Abbot or an Abbess

'We should think about combining nunnery, monastery, priory, and abbey (all abbeys and priories are either nunneries or monasteries, and I'm not sure that those latter two are visually distinct)'

These comments show the doubts and to-do-lists that came up during the annotating process. This brought me at the idea to make an encyclopedia of consideration. Listing and publishing the comments of the annotators.