User:Ruben/Prototyping/Sound and Voice: Difference between revisions

(Created page with "A project using voice recognition ([http://cmusphinx.sourceforge.net/ Pocketsphinx]) with Python. <ref name='tutorial>https://mattze96.safe-ws.de/blog/?p=640</ref> <referenc...") |

No edit summary |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

A project using voice recognition ([http://cmusphinx.sourceforge.net/ Pocketsphinx]) with Python. <ref name='tutorial>https://mattze96.safe-ws.de/blog/?p=640</ref> | A project using voice recognition ([http://cmusphinx.sourceforge.net/ Pocketsphinx]) with Python. <ref name='tutorial>https://mattze96.safe-ws.de/blog/?p=640</ref> | ||

This script has undergone many iterations. | |||

[[File:VoiceDetection1.png|200px|thumbnail|right|ugly graph of the second version]] | |||

The first version merely extracted the spoken pieces. | |||

The second version created an ugly graph to show how many was spoken in a certain part of a film (according to speech recognitions, which often detects things which are not there) | |||

A third version could detect the spoken language using Pocketsphinx. Then it used ffmpeg and imagemagick to extract frames from the film, which are appended into a single image. This image is then overlaid by a black gradient when there is spoken text, as to 'hide' the image. | |||

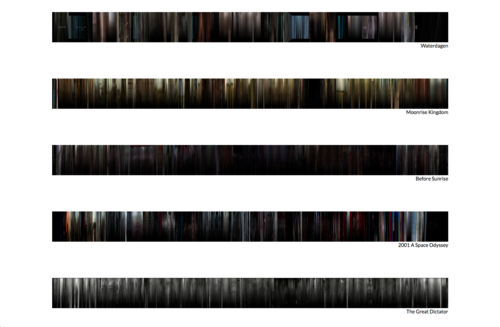

[[File:VoiceDetection2.png|500px|thumbnail|none|A third version]] | |||

The final version again used pocketsphinx to extract the dialogues and ffmpeg to export the frames. Then it counted all the word occurences, and ordered them by frequency. Then the average color of the frames was calculated on the moment the word was (approximately) heard. This was then rendered into a nested treemap using HTML and Javascript: | |||

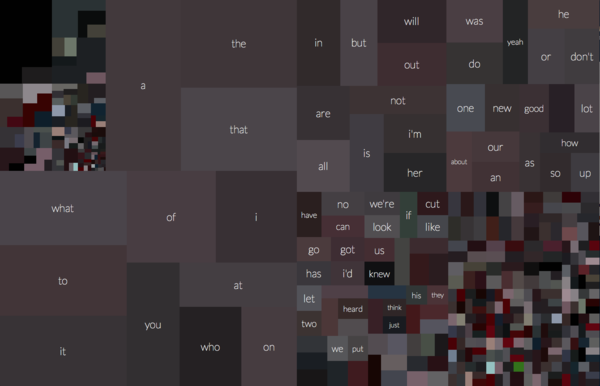

[[File:VoiceDetection3.png|600px|thumbnail|none|Nested treemap of word frequencies. Background color is avg. color of the frame when the word occurred. ]] | |||

<references></references> | <references></references> | ||

Latest revision as of 00:12, 15 January 2015

A project using voice recognition (Pocketsphinx) with Python. [1]

This script has undergone many iterations.

The first version merely extracted the spoken pieces.

The second version created an ugly graph to show how many was spoken in a certain part of a film (according to speech recognitions, which often detects things which are not there)

A third version could detect the spoken language using Pocketsphinx. Then it used ffmpeg and imagemagick to extract frames from the film, which are appended into a single image. This image is then overlaid by a black gradient when there is spoken text, as to 'hide' the image.

The final version again used pocketsphinx to extract the dialogues and ffmpeg to export the frames. Then it counted all the word occurences, and ordered them by frequency. Then the average color of the frames was calculated on the moment the word was (approximately) heard. This was then rendered into a nested treemap using HTML and Javascript: