Distortions on air: Difference between revisions

No edit summary |

No edit summary |

||

| (38 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

The Distortions on Air will be an attempt to navigate through our current (past or future) thoughts, research threads and vocal memories via distorted voices and different languages. To do that we will explore the concepts of 'code-switching' and 'audio mask'. | |||

'Code-switching' occurs when a speaker alternates between two or more languages in the context of a single conversation or situation. There are several reason that somebody uses this technique. The 'audio mask' is a term borrowed from Laurie Anderson and refers to the electronic alteration of the voice. Similar to the theatrical mask, this audio mask served Laurie to transform her present voice into the recorded and polyphonic speech of a machine. She used it to transform to a man on stage. | |||

=Distortions as 'audio masks'= | We will visit different scripts of vocal distortions and reflect together on ideas, that expand to the digital/online realm and datasets, through our collective or individual alter egos. We could alternate from codes we use daily to coding languages, mother tongues, from less formal to more formal settings. The voice you choose may represent you, may not, may be unknown to others, or represent something you are against to. A voice that may be rejected or misinterpreted but helps you speak to the public. You may also use a confusing and ambiguous language, and an accent that sounds annoying to others. | ||

This vocal online dialogue will happen through the exchange of small voice samples that we will produce. Our voice data will be processed and reconsidered. What happens when voice samples separate from us and in what contexts do they travel? Are they accumulated together with others? Do they scatter or disappear? | |||

https://radioactive.w-i-t-m.net/ | |||

notes of today: https://pad.xpub.nl/p/distortions_on_air | |||

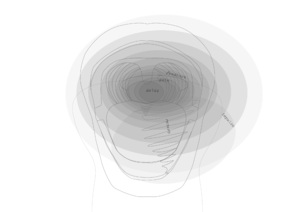

[[File:Imaginative diagram echo.png|thumb|An imaginative diagram explaining a web-audio distortion of vocal inputs online in the project Radio-active Monstrosities https://radioactive.w-i-t-m.net/. The graphic visualizes an 'echo' audio mask. It has been inspired by the Lawrence Abu Hamdan's Code Switching drawing.]]<br> | |||

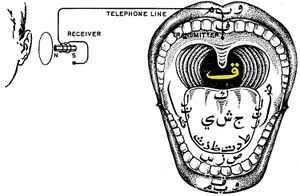

[[File:Codeswitching+image.jpg|thumb|Lawrence Abu Hamdan, Code Switching, 2014]] | |||

::<span style="color:#ffd6e0;">::::::..... ....... ....... ..<i style="font-size:16pt;">D i s t o r t i o n s O n A i r</i>. '''... ..... .::::::::::::..'''.... .</span> [https://radioactive.w-i-t-m.net/ >>>]<br><br> | |||

::[https://pzwiki.wdka.nl/mediadesign/Category:Implicancies <<<]<span style="color:#FFEF97">'''.. .. ::::::..'''..... ....<i>Radio Implicancies - Special Issue #15</i>. .... ... ... . ...'''... ..... ..::::.'''.....</span><br><br> | |||

==Distortions as 'audio masks'== | |||

* <span style="color:#FEDF2E">Lowpass</span> filter: gendered voice on air | * <span style="color:#FEDF2E">Lowpass</span> filter: gendered voice on air | ||

* <span style="color:#FF00FF">Lowpitch</span> filter: from female to male | * <span style="color:#FF00FF">Lowpitch</span> filter: from female to male | ||

* | * <span style="color:blue">Echo</span> filter | ||

* <span style="color:#ffd6e0">Human microphone</span> | * <span style="color:#ffd6e0">Human microphone</span> | ||

==='damaged' voices | clips === | |||

{| class="wikitable" | |||

|- | |||

| {{#Widget:Audio|mp3=https://eaiaiaiaoi.w-i-t-m.net/audio/667-clip-wartimeradio-male-distortion.mp3}} || <span style="color:#FF00FF">[https://www.thisamericanlife.org/667 podcast] Ballout, D. (2019) ‘Good Morning, Kafranbel’, This American Life: Wartime Radio</span> | |||

|- | |||

| {{#Widget:Audio|mp3=https://eaiaiaiaoi.w-i-t-m.net/audio/podcast-shrill-clip-lowpass-voice.mp3}} || <span style="color:#FEDF2E">[https://www.wnycstudios.org/podcasts/otm/episodes/on-the-media-2019-11-22 podcast] Gladstone, B. and Garfield, B. (2019) ‘How Radio Makes Female Voices Sound “Shrill”’, The Disagreement Is The Point. On the Media</span> | |||

|- | |||

| {{#Widget:Audio|mp3=https://eaiaiaiaoi.w-i-t-m.net/audio/Vicki_Sparks_Commentator_BBC%20.mp3}} || <span style="color:#FEDF2E">[https://www.telegraph.co.uk/news/2018/06/25/jason-cundy-womens-voices-high-football-commentary/ article] Singh, A. (2018) ‘Jason Cundy: women’s voices are too high for football commentary’, The Telegraph, 25 June</span><br> | |||

|- | |||

| {{#Widget:Audio|mp3=https://eaiaiaiaoi.w-i-t-m.net/audio/Angela_Davis_Occupy_Wall%20.mp3}} || <span style="color:#ffd6e0">[https://thesocietypages.org/cyborgology/2011/10/06/mic-check-occupy-technology-the-amplified-voice article] Moraine, S. (2011) '“Mic check!”: #occupy, technology & the amplified voice', The Society Pages, 6 October</span> and <span style="color:#ffd6e0">[https://www.youtube.com/watch?v=HlvfPizooII video] Angela Davis Occupy Wall St @ Washington Sq Park Oct 30 2011 General Strike November 2</span><br> | |||

|} | |||

===online scripts (web-audio)=== | |||

Scripts are made with web-audio api: https://developer.mozilla.org/en-US/docs/Web/API/Web_Audio_API | |||

<syntaxhighlight lang="python" line='line'> | <syntaxhighlight lang="python" line='line'> | ||

</syntaxhighlight> | </syntaxhighlight> | ||

====<span style="color:#FEDF2E">Lowpass</span>==== | |||

<syntaxhighlight lang="javascript" line='line'> | |||

<!DOCTYPE html> | |||

<html lang="en" dir="ltr"> | |||

<head> | |||

<meta charset="utf-8"> | |||

<meta name="viewport" content="width=device-width, initial-scale=1"> | |||

<title></title> | |||

</head> | |||

<body> | |||

<p><button id="btnStart">START RECORDING</button> | |||

<button id="btnStop">STOP RECORDING</button> | |||

</p> | |||

<h2>Lowpass</h2> | |||

<audio id="player" controls> | |||

</audio><br> | |||

<script> | |||

let constraintObj = { | |||

audio: true, | |||

video: false | |||

}; | |||

//handle older browsers that might implement getUserMedia in some way | |||

if (navigator.mediaDevices === undefined) { | |||

navigator.mediaDevices = {}; | |||

navigator.mediaDevices.getUserMedia = function(constraintObj) { | |||

let getUserMedia = navigator.webkitGetUserMedia || navigator.mozGetUserMedia; | |||

if (!getUserMedia) { | |||

return Promise.reject(new Error('getUserMedia is not implemented in this browser')); | |||

} | |||

return new Promise(function(resolve, reject) { | |||

getUserMedia.call(navigator, constraintObj, resolve, reject); | |||

}); | |||

} | |||

} else { | |||

navigator.mediaDevices.enumerateDevices() | |||

.then(devices => { | |||

devices.forEach(device => { | |||

console.log(device.kind.toUpperCase(), device.label); | |||

//, device.deviceId | |||

}) | |||

}) | |||

.catch(err => { | |||

console.log(err.name, err.message); | |||

}) | |||

} | |||

navigator.mediaDevices.getUserMedia(constraintObj) | |||

.then(function(mediaStreamObj) { | |||

let audio = document.querySelector('audio'); | |||

audio.onloadedmetadata = function(ev) { | |||

audio.play(); | |||

}; | |||

//add listeners for saving audio | |||

let start = document.getElementById('btnStart'); | |||

let stop = document.getElementById('btnStop'); | |||

let audSave = document.getElementById('player'); | |||

let mediaRecorder = new MediaRecorder(mediaStreamObj); | |||

let chunks = []; | |||

start.addEventListener('click', (ev) => { | |||

mediaRecorder.start(); | |||

console.log(mediaRecorder.state); | |||

}) | |||

stop.addEventListener('click', (ev) => { | |||

mediaRecorder.stop(); | |||

console.log(mediaRecorder.state); | |||

}); | |||

mediaRecorder.ondataavailable = function(ev) { | |||

chunks.push(ev.data); | |||

} | |||

mediaRecorder.onstop = (ev) => { | |||

let blob = new Blob(chunks, { | |||

'type': 'audio/mp3;' | |||

}); | |||

chunks = []; | |||

var context = new AudioContext(); | |||

let audioURL = window.URL.createObjectURL(blob); | |||

// lowpass | |||

source = context.createMediaElementSource(player); | |||

var biquadFilter = context.createBiquadFilter(); | |||

biquadFilter.type = "lowpass"; | |||

biquadFilter.frequency.value = 400; | |||

biquadFilter.gain.value = 25; | |||

source.connect(biquadFilter); | |||

biquadFilter.connect(context.destination); | |||

audSave.src = audioURL; | |||

console.log(audSave.src); | |||

} | |||

}) | |||

.catch(function(err) { | |||

console.log(err.name, err.message); | |||

}); | |||

</script> | |||

</body> | |||

</html> | |||

</syntaxhighlight> | |||

====<span style="color:#FF00FF">Lowpitch</span>==== | |||

<syntaxhighlight lang="javascript" line='line'> | |||

<!DOCTYPE html> | |||

<html lang="en" dir="ltr"> | |||

<head> | |||

<meta charset="utf-8"> | |||

<meta name="viewport" content="width=device-width, initial-scale=1"> | |||

<title></title> | |||

</head> | |||

<body> | |||

<p><button id="btnStart">START RECORDING</button> | |||

<button id="btnStop">STOP RECORDING</button> | |||

</p> | |||

<h2>Lowpitch</h2> | |||

<script> | |||

let constraintObj = { | |||

audio: true, | |||

video: false | |||

}; | |||

//handle older browsers that might implement getUserMedia in some way | |||

if (navigator.mediaDevices === undefined) { | |||

navigator.mediaDevices = {}; | |||

navigator.mediaDevices.getUserMedia = function(constraintObj) { | |||

let getUserMedia = navigator.webkitGetUserMedia || navigator.mozGetUserMedia; | |||

if (!getUserMedia) { | |||

return Promise.reject(new Error('getUserMedia is not implemented in this browser')); | |||

} | |||

return new Promise(function(resolve, reject) { | |||

getUserMedia.call(navigator, constraintObj, resolve, reject); | |||

}); | |||

} | |||

} else { | |||

navigator.mediaDevices.enumerateDevices() | |||

.then(devices => { | |||

devices.forEach(device => { | |||

console.log(device.kind.toUpperCase(), device.label); | |||

//, device.deviceId | |||

}) | |||

}) | |||

.catch(err => { | |||

console.log(err.name, err.message); | |||

}) | |||

} | |||

navigator.mediaDevices.getUserMedia(constraintObj) | |||

.then(function(mediaStreamObj) { | |||

//add listeners for saving audio | |||

let start = document.getElementById('btnStart'); | |||

let stop = document.getElementById('btnStop'); | |||

let mediaRecorder = new MediaRecorder(mediaStreamObj); | |||

let chunks = []; | |||

start.addEventListener('click', (ev) => { | |||

mediaRecorder.start(); | |||

console.log(mediaRecorder.state); | |||

}) | |||

stop.addEventListener('click', (ev) => { | |||

mediaRecorder.stop(); | |||

console.log(mediaRecorder.state); | |||

}); | |||

mediaRecorder.ondataavailable = function(ev) { | |||

chunks.push(ev.data); | |||

} | |||

mediaRecorder.onstop = (ev) => { | |||

let blob = new Blob(chunks, { | |||

'type': 'audio/mp3;' | |||

}); | |||

chunks = []; | |||

var context = new AudioContext(); | |||

let audioURL = window.URL.createObjectURL(blob); | |||

window.fetch(audioURL) | |||

.then(response => response.arrayBuffer()) | |||

.then(arrayBuffer => context.decodeAudioData(arrayBuffer)) | |||

.then(audioBuffer => { | |||

function play() { | |||

context.close() | |||

context = new AudioContext(); | |||

source = context.createBufferSource(); | |||

source.buffer = audioBuffer; | |||

source.playbackRate.value = 0.65; | |||

source.connect(context.destination); | |||

source.start(); | |||

} | |||

play(); | |||

}); | |||

} | |||

}) | |||

.catch(function(err) { | |||

console.log(err.name, err.message); | |||

}); | |||

</script> | |||

</body> | |||

</html> | |||

</syntaxhighlight> | </syntaxhighlight> | ||

==' | ====<span style="color:blue">Echo</span>==== | ||

Download https://gitlab.com/nglk/radioactive/-/blob/master/js/reverb.js and put it in a js folder<br> | |||

<syntaxhighlight lang="javascript" line='line'> | |||

<!DOCTYPE html> | |||

<html lang="en" dir="ltr"> | |||

<head> | |||

<meta charset="utf-8"> | |||

<meta name="viewport" content="width=device-width, initial-scale=1"> | |||

</head> | |||

<body> | |||

<p><button id="btnStart">START RECORDING</button> | |||

<button id="btnStop">STOP RECORDING</button> | |||

</p> | |||

<h2>Echo</h2> | |||

<audio id="player" controls> | |||

</audio><br> | |||

<script src="js/reverb.js"></script> | |||

<script> | |||

var masterNode; | |||

var bypassNode; | |||

var delayNode; | |||

var feedbackNode; | |||

let constraintObj = { | |||

audio: true, | |||

video: false | |||

}; | |||

//handle older browsers that might implement getUserMedia in some way | |||

if (navigator.mediaDevices === undefined) { | |||

navigator.mediaDevices = {}; | |||

navigator.mediaDevices.getUserMedia = function(constraintObj) { | |||

let getUserMedia = navigator.webkitGetUserMedia || navigator.mozGetUserMedia; | |||

if (!getUserMedia) { | |||

return Promise.reject(new Error('getUserMedia is not implemented in this browser')); | |||

} | |||

return new Promise(function(resolve, reject) { | |||

getUserMedia.call(navigator, constraintObj, resolve, reject); | |||

}); | |||

} | |||

} else { | |||

navigator.mediaDevices.enumerateDevices() | |||

.then(devices => { | |||

devices.forEach(device => { | |||

console.log(device.kind.toUpperCase(), device.label); | |||

//, device.deviceId | |||

}) | |||

}) | |||

.catch(err => { | |||

console.log(err.name, err.message); | |||

}) | |||

} | |||

navigator.mediaDevices.getUserMedia(constraintObj) | |||

.then(function(mediaStreamObj) { | |||

let audio = document.querySelector('audio'); | |||

audio.onloadedmetadata = function(ev) { | |||

audio.play(); | |||

}; | |||

//add listeners for saving audio | |||

let start = document.getElementById('btnStart'); | |||

let stop = document.getElementById('btnStop'); | |||

let audSave = document.getElementById('player'); | |||

let mediaRecorder = new MediaRecorder(mediaStreamObj); | |||

let chunks = []; | |||

start.addEventListener('click', (ev) => { | |||

mediaRecorder.start(); | |||

console.log(mediaRecorder.state); | |||

}) | |||

stop.addEventListener('click', (ev) => { | |||

mediaRecorder.stop(); | |||

console.log(mediaRecorder.state); | |||

}); | |||

mediaRecorder.ondataavailable = function(ev) { | |||

chunks.push(ev.data); | |||

} | |||

mediaRecorder.onstop = (ev) => { | |||

let blob = new Blob(chunks, { | |||

'type': 'audio/mp3;' | |||

}); | |||

chunks = []; | |||

var context = new AudioContext(); | |||

let audioURL = window.URL.createObjectURL(blob); | |||

source = context.createMediaElementSource(player); | |||

reverbjs.extend(context); | |||

// 2) Load the impulse response; upon load, connect it to the audio output. | |||

var reverbUrl = "http://reverbjs.org/Library/SportsCentreUniversityOfYork.m4a"; | |||

var reverbNode = context.createReverbFromUrl(reverbUrl, function() { | |||

reverbNode.connect(context.destination); | |||

}); | |||

//create an audio node from the stream | |||

delayNode = context.createDelay(100) | |||

feedbackNode = context.createGain(); | |||

bypassNode = context.createGain(); | |||

masterNode = context.createGain(); | |||

//controls | |||

delayNode.delayTime.value = 3.5; | |||

feedbackNode.gain.value = 0.3; | |||

bypassNode.gain.value = 1; | |||

source.connect(reverbNode); | |||

reverbNode.connect(delayNode); | |||

delayNode.connect(feedbackNode); | |||

feedbackNode.connect(bypassNode); | |||

// delayNode.connect(bypassNode); | |||

bypassNode.connect(masterNode); | |||

masterNode.connect(context.destination); | |||

audSave.src = audioURL; | |||

console.log(audSave.src); | |||

} | |||

}) | |||

.catch(function(err) { | |||

console.log(err.name, err.message); | |||

}); | |||

</script> | |||

</body> | |||

</html> | |||

</syntaxhighlight> | |||

==Code-switching== | |||

Alternate between languages<br> | |||

When using speech recognition tools the accent may not be recognised. | |||

An example that has political consequences is: https://gizmodo.com/experts-worry-as-germany-tests-voice-recognition-softwa-1793424680 | |||

= | ==Exchange voice messages. A dialogue of alter egos== | ||

Use the audio output as mic input to play the voice samples and stream them in jitsi. | |||

* Install JACK and Pavucontrol (Linux) | |||

https://jackaudio.org/downloads/ | |||

*Connect the sources in JACK: | |||

https://puredata.info/docs/PulseAudioSinkJack.jpeg<br> | |||

https://puredata.info/docs/JackRoutingMultichannelAndBrowserAudio/ | |||

* Change/check input/output in sound settings (select the connected source of JACK) | |||

* Refresh jitsi and choose the JACK connected source (select NO to the audio check and jitsi will direct you to a list of sources) | |||

* If it is not working check Pavucontrol. You may need to change the sources in the browser app. | |||

* To change between normal microphone and JACK change the input in the sound settings. This will happen if you want to use the web-audio scripts, record your self and then play the distortion back in jitsi (in Pavucontrol in input devices choose internal microphone when you come back to JACK) | |||

=links of experiments | projects | research= | ==links of experiments | projects | research== | ||

* https://gitlab.com/nglk/radioactive | * https://gitlab.com/nglk/radioactive | ||

* https://pzwiki.wdka.nl/mediadesign/Angeliki/Grad_Scripts | * https://pzwiki.wdka.nl/mediadesign/Angeliki/Grad_Scripts#podcast | ||

* https://gitlab.com/nglk/radioactive_monstrosities | * https://gitlab.com/nglk/radioactive_monstrosities | ||

* https://gitlab.com/nglk/speaking_with_the_machine | * https://gitlab.com/nglk/speaking_with_the_machine | ||

* https://eaiaiaiaoi.w-i-t-m.net/player.php | * https://gitlab.com/nglk/gossip-gaps | ||

* https://eaiaiaiaoi.w-i-t-m.net/player.php (player / sound samples) | |||

* https://radioactive.w-i-t-m.net/ (Vocal distortions / voice samples) | |||

* https://radioslumber.net/ (Gossip Booth) | |||

Latest revision as of 11:54, 13 July 2021

The Distortions on Air will be an attempt to navigate through our current (past or future) thoughts, research threads and vocal memories via distorted voices and different languages. To do that we will explore the concepts of 'code-switching' and 'audio mask'.

'Code-switching' occurs when a speaker alternates between two or more languages in the context of a single conversation or situation. There are several reason that somebody uses this technique. The 'audio mask' is a term borrowed from Laurie Anderson and refers to the electronic alteration of the voice. Similar to the theatrical mask, this audio mask served Laurie to transform her present voice into the recorded and polyphonic speech of a machine. She used it to transform to a man on stage.

We will visit different scripts of vocal distortions and reflect together on ideas, that expand to the digital/online realm and datasets, through our collective or individual alter egos. We could alternate from codes we use daily to coding languages, mother tongues, from less formal to more formal settings. The voice you choose may represent you, may not, may be unknown to others, or represent something you are against to. A voice that may be rejected or misinterpreted but helps you speak to the public. You may also use a confusing and ambiguous language, and an accent that sounds annoying to others.

This vocal online dialogue will happen through the exchange of small voice samples that we will produce. Our voice data will be processed and reconsidered. What happens when voice samples separate from us and in what contexts do they travel? Are they accumulated together with others? Do they scatter or disappear?

https://radioactive.w-i-t-m.net/

notes of today: https://pad.xpub.nl/p/distortions_on_air

- ::::::..... ....... ....... ..D i s t o r t i o n s O n A i r. ... ..... .::::::::::::...... . >>>

- ::::::..... ....... ....... ..D i s t o r t i o n s O n A i r. ... ..... .::::::::::::...... . >>>

- <<<.. .. ::::::....... ....Radio Implicancies - Special Issue #15. .... ... ... . ...... ..... ..::::......

- <<<.. .. ::::::....... ....Radio Implicancies - Special Issue #15. .... ... ... . ...... ..... ..::::......

Distortions as 'audio masks'

- Lowpass filter: gendered voice on air

- Lowpitch filter: from female to male

- Echo filter

- Human microphone

'damaged' voices | clips

| podcast Ballout, D. (2019) ‘Good Morning, Kafranbel’, This American Life: Wartime Radio | |

| podcast Gladstone, B. and Garfield, B. (2019) ‘How Radio Makes Female Voices Sound “Shrill”’, The Disagreement Is The Point. On the Media | |

| article Singh, A. (2018) ‘Jason Cundy: women’s voices are too high for football commentary’, The Telegraph, 25 June | |

| article Moraine, S. (2011) '“Mic check!”: #occupy, technology & the amplified voice', The Society Pages, 6 October and video Angela Davis Occupy Wall St @ Washington Sq Park Oct 30 2011 General Strike November 2 |

online scripts (web-audio)

Scripts are made with web-audio api: https://developer.mozilla.org/en-US/docs/Web/API/Web_Audio_API

Lowpass

<!DOCTYPE html>

<html lang="en" dir="ltr">

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<title></title>

</head>

<body>

<p><button id="btnStart">START RECORDING</button>

<button id="btnStop">STOP RECORDING</button>

</p>

<h2>Lowpass</h2>

<audio id="player" controls>

</audio><br>

<script>

let constraintObj = {

audio: true,

video: false

};

//handle older browsers that might implement getUserMedia in some way

if (navigator.mediaDevices === undefined) {

navigator.mediaDevices = {};

navigator.mediaDevices.getUserMedia = function(constraintObj) {

let getUserMedia = navigator.webkitGetUserMedia || navigator.mozGetUserMedia;

if (!getUserMedia) {

return Promise.reject(new Error('getUserMedia is not implemented in this browser'));

}

return new Promise(function(resolve, reject) {

getUserMedia.call(navigator, constraintObj, resolve, reject);

});

}

} else {

navigator.mediaDevices.enumerateDevices()

.then(devices => {

devices.forEach(device => {

console.log(device.kind.toUpperCase(), device.label);

//, device.deviceId

})

})

.catch(err => {

console.log(err.name, err.message);

})

}

navigator.mediaDevices.getUserMedia(constraintObj)

.then(function(mediaStreamObj) {

let audio = document.querySelector('audio');

audio.onloadedmetadata = function(ev) {

audio.play();

};

//add listeners for saving audio

let start = document.getElementById('btnStart');

let stop = document.getElementById('btnStop');

let audSave = document.getElementById('player');

let mediaRecorder = new MediaRecorder(mediaStreamObj);

let chunks = [];

start.addEventListener('click', (ev) => {

mediaRecorder.start();

console.log(mediaRecorder.state);

})

stop.addEventListener('click', (ev) => {

mediaRecorder.stop();

console.log(mediaRecorder.state);

});

mediaRecorder.ondataavailable = function(ev) {

chunks.push(ev.data);

}

mediaRecorder.onstop = (ev) => {

let blob = new Blob(chunks, {

'type': 'audio/mp3;'

});

chunks = [];

var context = new AudioContext();

let audioURL = window.URL.createObjectURL(blob);

// lowpass

source = context.createMediaElementSource(player);

var biquadFilter = context.createBiquadFilter();

biquadFilter.type = "lowpass";

biquadFilter.frequency.value = 400;

biquadFilter.gain.value = 25;

source.connect(biquadFilter);

biquadFilter.connect(context.destination);

audSave.src = audioURL;

console.log(audSave.src);

}

})

.catch(function(err) {

console.log(err.name, err.message);

});

</script>

</body>

</html>

Lowpitch

<!DOCTYPE html>

<html lang="en" dir="ltr">

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<title></title>

</head>

<body>

<p><button id="btnStart">START RECORDING</button>

<button id="btnStop">STOP RECORDING</button>

</p>

<h2>Lowpitch</h2>

<script>

let constraintObj = {

audio: true,

video: false

};

//handle older browsers that might implement getUserMedia in some way

if (navigator.mediaDevices === undefined) {

navigator.mediaDevices = {};

navigator.mediaDevices.getUserMedia = function(constraintObj) {

let getUserMedia = navigator.webkitGetUserMedia || navigator.mozGetUserMedia;

if (!getUserMedia) {

return Promise.reject(new Error('getUserMedia is not implemented in this browser'));

}

return new Promise(function(resolve, reject) {

getUserMedia.call(navigator, constraintObj, resolve, reject);

});

}

} else {

navigator.mediaDevices.enumerateDevices()

.then(devices => {

devices.forEach(device => {

console.log(device.kind.toUpperCase(), device.label);

//, device.deviceId

})

})

.catch(err => {

console.log(err.name, err.message);

})

}

navigator.mediaDevices.getUserMedia(constraintObj)

.then(function(mediaStreamObj) {

//add listeners for saving audio

let start = document.getElementById('btnStart');

let stop = document.getElementById('btnStop');

let mediaRecorder = new MediaRecorder(mediaStreamObj);

let chunks = [];

start.addEventListener('click', (ev) => {

mediaRecorder.start();

console.log(mediaRecorder.state);

})

stop.addEventListener('click', (ev) => {

mediaRecorder.stop();

console.log(mediaRecorder.state);

});

mediaRecorder.ondataavailable = function(ev) {

chunks.push(ev.data);

}

mediaRecorder.onstop = (ev) => {

let blob = new Blob(chunks, {

'type': 'audio/mp3;'

});

chunks = [];

var context = new AudioContext();

let audioURL = window.URL.createObjectURL(blob);

window.fetch(audioURL)

.then(response => response.arrayBuffer())

.then(arrayBuffer => context.decodeAudioData(arrayBuffer))

.then(audioBuffer => {

function play() {

context.close()

context = new AudioContext();

source = context.createBufferSource();

source.buffer = audioBuffer;

source.playbackRate.value = 0.65;

source.connect(context.destination);

source.start();

}

play();

});

}

})

.catch(function(err) {

console.log(err.name, err.message);

});

</script>

</body>

</html>

Echo

Download https://gitlab.com/nglk/radioactive/-/blob/master/js/reverb.js and put it in a js folder

<!DOCTYPE html>

<html lang="en" dir="ltr">

<head>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

</head>

<body>

<p><button id="btnStart">START RECORDING</button>

<button id="btnStop">STOP RECORDING</button>

</p>

<h2>Echo</h2>

<audio id="player" controls>

</audio><br>

<script src="js/reverb.js"></script>

<script>

var masterNode;

var bypassNode;

var delayNode;

var feedbackNode;

let constraintObj = {

audio: true,

video: false

};

//handle older browsers that might implement getUserMedia in some way

if (navigator.mediaDevices === undefined) {

navigator.mediaDevices = {};

navigator.mediaDevices.getUserMedia = function(constraintObj) {

let getUserMedia = navigator.webkitGetUserMedia || navigator.mozGetUserMedia;

if (!getUserMedia) {

return Promise.reject(new Error('getUserMedia is not implemented in this browser'));

}

return new Promise(function(resolve, reject) {

getUserMedia.call(navigator, constraintObj, resolve, reject);

});

}

} else {

navigator.mediaDevices.enumerateDevices()

.then(devices => {

devices.forEach(device => {

console.log(device.kind.toUpperCase(), device.label);

//, device.deviceId

})

})

.catch(err => {

console.log(err.name, err.message);

})

}

navigator.mediaDevices.getUserMedia(constraintObj)

.then(function(mediaStreamObj) {

let audio = document.querySelector('audio');

audio.onloadedmetadata = function(ev) {

audio.play();

};

//add listeners for saving audio

let start = document.getElementById('btnStart');

let stop = document.getElementById('btnStop');

let audSave = document.getElementById('player');

let mediaRecorder = new MediaRecorder(mediaStreamObj);

let chunks = [];

start.addEventListener('click', (ev) => {

mediaRecorder.start();

console.log(mediaRecorder.state);

})

stop.addEventListener('click', (ev) => {

mediaRecorder.stop();

console.log(mediaRecorder.state);

});

mediaRecorder.ondataavailable = function(ev) {

chunks.push(ev.data);

}

mediaRecorder.onstop = (ev) => {

let blob = new Blob(chunks, {

'type': 'audio/mp3;'

});

chunks = [];

var context = new AudioContext();

let audioURL = window.URL.createObjectURL(blob);

source = context.createMediaElementSource(player);

reverbjs.extend(context);

// 2) Load the impulse response; upon load, connect it to the audio output.

var reverbUrl = "http://reverbjs.org/Library/SportsCentreUniversityOfYork.m4a";

var reverbNode = context.createReverbFromUrl(reverbUrl, function() {

reverbNode.connect(context.destination);

});

//create an audio node from the stream

delayNode = context.createDelay(100)

feedbackNode = context.createGain();

bypassNode = context.createGain();

masterNode = context.createGain();

//controls

delayNode.delayTime.value = 3.5;

feedbackNode.gain.value = 0.3;

bypassNode.gain.value = 1;

source.connect(reverbNode);

reverbNode.connect(delayNode);

delayNode.connect(feedbackNode);

feedbackNode.connect(bypassNode);

// delayNode.connect(bypassNode);

bypassNode.connect(masterNode);

masterNode.connect(context.destination);

audSave.src = audioURL;

console.log(audSave.src);

}

})

.catch(function(err) {

console.log(err.name, err.message);

});

</script>

</body>

</html>

Code-switching

Alternate between languages

When using speech recognition tools the accent may not be recognised.

An example that has political consequences is: https://gizmodo.com/experts-worry-as-germany-tests-voice-recognition-softwa-1793424680

Exchange voice messages. A dialogue of alter egos

Use the audio output as mic input to play the voice samples and stream them in jitsi.

- Install JACK and Pavucontrol (Linux)

https://jackaudio.org/downloads/

- Connect the sources in JACK:

https://puredata.info/docs/JackRoutingMultichannelAndBrowserAudio/

- Change/check input/output in sound settings (select the connected source of JACK)

- Refresh jitsi and choose the JACK connected source (select NO to the audio check and jitsi will direct you to a list of sources)

- If it is not working check Pavucontrol. You may need to change the sources in the browser app.

- To change between normal microphone and JACK change the input in the sound settings. This will happen if you want to use the web-audio scripts, record your self and then play the distortion back in jitsi (in Pavucontrol in input devices choose internal microphone when you come back to JACK)

links of experiments | projects | research

- https://gitlab.com/nglk/radioactive

- https://pzwiki.wdka.nl/mediadesign/Angeliki/Grad_Scripts#podcast

- https://gitlab.com/nglk/radioactive_monstrosities

- https://gitlab.com/nglk/speaking_with_the_machine

- https://gitlab.com/nglk/gossip-gaps

- https://eaiaiaiaoi.w-i-t-m.net/player.php (player / sound samples)

- https://radioactive.w-i-t-m.net/ (Vocal distortions / voice samples)

- https://radioslumber.net/ (Gossip Booth)