User:Laurier Rochon/prototyping/slinkybookreader: Difference between revisions

No edit summary |

No edit summary |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== CGI/Python + D3 js library + markov chains = Slinky Text Reader == | == CGI/Python + D3 js library + markov chains = Slinky Text Reader == | ||

*Check it out [http://pzwart3.wdka.hro.nl/~lrochon/cgi-bin/slinkydink/work.class.cgi?url=http://www.gutenberg.org/cache/epub/14838/pg14838.txt&limit=20 here] | |||

* '''Check it out [http://pzwart3.wdka.hro.nl/~lrochon/cgi-bin/slinkydink/work.class.cgi?url=http://www.gutenberg.org/cache/epub/14838/pg14838.txt&limit=20 here]''' | |||

* You can change the amount of nodes (words) displayed by changing the "limit" param | * You can change the amount of nodes (words) displayed by changing the "limit" param | ||

| Line 10: | Line 16: | ||

== What is does == | == What is does == | ||

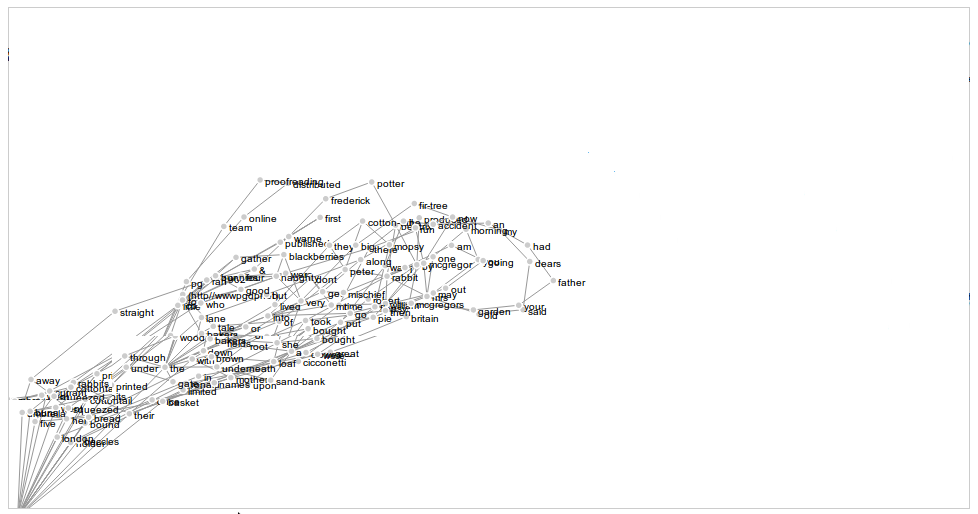

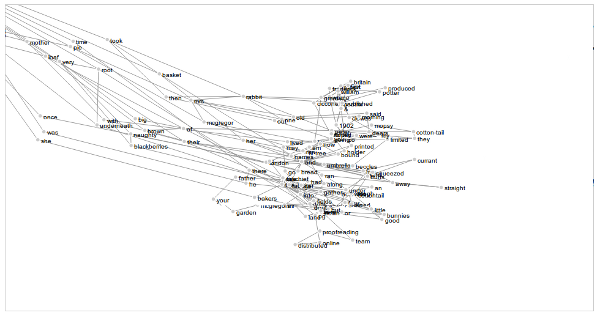

So I was wandering rather aimlessly in the gutenberg database, and couldn't find much that was | So I was wandering rather aimlessly in the [http://www.gutenberg.org/ Gutenberg project database], and couldn't find much that was compelling to me. So I decided to just make a simple "work" parser that would pick apart the different pieces of a text and maybe rearrange them. I created a small class that made a "work" object, on which you could call the markovize() method, which made a [http://en.wikipedia.org/wiki/Markov_chain markov chain] out of it. I thought then, for fun, that I could try to replicate [[User:Laurier_Rochon/prototyping/graphov|the graphs I built last year]], but animated. The idea was that the system would "read" out to you a book, and when a non-unique word occurred, the story would "split" in many paths, so that you can follow the one you preferred. Erm...I can't say the graph reads in that way, but that was the objective. As new nodes are being pumped out, the rest would move away and create a certain narrative that one could follow on the screen. [http://mbostock.github.com/d3/ D3 for js] seemed a good fit for this, as it already has a bunch of fun built-in physics stuff bundled with it. | ||

== What happens, in order == | == What happens, in order == | ||

Latest revision as of 10:20, 27 October 2011

CGI/Python + D3 js library + markov chains = Slinky Text Reader

- Check it out here

- You can change the amount of nodes (words) displayed by changing the "limit" param

- You can change the URL to open/parse by giving in another page (preferably from the Gutenberg collection...there is some cleaning code specific to those files). If you don't specify one at all, it'll read out The Tale of Peter Rabbit, by Beatrix Potter

What is does

So I was wandering rather aimlessly in the Gutenberg project database, and couldn't find much that was compelling to me. So I decided to just make a simple "work" parser that would pick apart the different pieces of a text and maybe rearrange them. I created a small class that made a "work" object, on which you could call the markovize() method, which made a markov chain out of it. I thought then, for fun, that I could try to replicate the graphs I built last year, but animated. The idea was that the system would "read" out to you a book, and when a non-unique word occurred, the story would "split" in many paths, so that you can follow the one you preferred. Erm...I can't say the graph reads in that way, but that was the objective. As new nodes are being pumped out, the rest would move away and create a certain narrative that one could follow on the screen. D3 for js seemed a good fit for this, as it already has a bunch of fun built-in physics stuff bundled with it.

What happens, in order

- checks the url param - uses urlib2 to grab it

- parses the file, creates a markov chain by gathering all the unique words of the text, then pairing every word with an index from the unique words list

- write the whole thing in JSON format using simpleJSON (very nice json lib...). the data structure looks like so {"source":"1","target":"2","word":"bananas"}

- if all of the above occurs smoothly, use JS and jQuery to load up the JSON file

- start writing the SVG nodes (circles), whenever you find a node that already exists, create another link (SVG line) instead of another node

- and a few other things, too...

A few issues encountered along the way

- D3 documentation sucks. I had worked with it before though

- nodes and links : I wanted to write links dynamically as the nodes popped up - but the links are required 2 points to draw from and to. So how do you draw a line to a node that doesn't exist yet? I had make a little change (that took me a while to figure out) to report that drawing to later, after the "target" node is drawn.

- related to previous problem - my data structure reflected links, but I was considering it as "words" of the markov chain. Things didn't add up logically until I wrapped my head around the fact that every json dictionary describes a link, not a node.

Soft

Python shittez

#!/usr/bin/python2.6

#-*- coding:utf-8 -*-

print "Content-Type: text/html"

print

#command-line/query arg/param : http://www.gutenberg.org/cache/epub/14838/pg14838.txt

import urllib2

import cgi

import re

import sys

import simplejson

get = cgi.FieldStorage()

url = get.getlist("url")

limit = get.getlist("limit")

class Work:

#instantiate

def __init__(self):

try:

u = url[0]

except:

u = "http://www.gutenberg.org/cache/epub/14838/pg14838.txt"

try:

#open up the url given

txt = urllib2.urlopen(u).read().strip('')

#cleaning

s = r'\*\*\* .* OF THIS PROJECT GUTENBERG EBOOK .* \*\*\*'

r = re.compile(s)

usable = re.split(r,txt)

story = usable[1]

story = re.sub("\[Illustration\]","",story)

story = re.sub(",|\.|;|:|'|\!|\?","",story)

self.story = story

self.unique_words = self.unique_words()

except:

#error

print "could not load url"

sys.exit()

#print to sdout

def readme(self):

print self.story

#return unique words

def unique_words(self):

unique_words = list(set(self.story.lower().split()))

return unique_words

#return a list of tuples with all words

def markovize(self):

pairs = []

words = self.story.lower().split()

#limit...

words = words[0:int(limit[0])]

a = 0

for w in words:

if a<len(words)-1:

pairs.append([self.unique_words.index(w),self.unique_words.index(words[a+1]),w]);

a=a+1

#this does it a bit differently...unique words first

#for w in self.unique_words:

# matches = [i for i,val in enumerate(words) if val.lower()==w.lower()]

# for match in matches:

# pairs.append([a,match,words[match]])

# a=a+1

jsonfile = open("../../slinkfiles/words","w")

simplejson.dump(pairs, jsonfile)

work = Work()

work.markovize()

print '''<!DOCTYPE html>

<html>

<head>

<title>Slink-A-Dink SVG</title>

<script type="text/javascript" src="../../slinkfiles/d3_all.js"></script>

<script type="text/javascript" src="../../slinkfiles/jquery-1.6.4min.js"></script>

<link rel="stylesheet" href="../../slinkfiles/main.css" media="screen" />

</head>

<body>

<form type="get">

<div id="txt">(you can click and drag stuff)

<select name="limit">

'''

for b in range(2,201):

print '<option value="%d">%d</option>' % (b,b)

print '''</select>

<input type="submit" value="go">

</div>

</form>

<div id="chart"></div>

<div id="low" class="low">Default text, if "url" GET param not specified : <a href="http://www.gutenberg.org/cache/epub/14838/pg14838.txt" target="_blank">http://www.gutenberg.org/cache/epub/14838/pg14838.txt</a></div>

<script type="text/javascript" src="../../slinkfiles/main.js"></script>

</body>

</html>

'''

Main js shittez

var w = 960,

h = 500,

fill = d3.scale.category20(),

nodes = [],

links = [];

var vis = d3.select("#chart").append("svg:svg")

.attr("width", w)

.attr("height", h);

vis.append("svg:rect")

.attr("width", w)

.attr("height", h);

var force = d3.layout.force()

.distance(30)

.nodes(nodes)

.links(links)

.size([w, h]);

force.on("tick", function() {

vis.selectAll("line.link")

.attr("x1", function(d) { return d.source.x; })

.attr("y1", function(d) { return d.source.y; })

.attr("x2", function(d) { return d.target.x; })

.attr("y2", function(d) { return d.target.y; });

vis.selectAll("circle.node")

.attr("cx", function(d) { return d.x; })

.attr("cy", function(d) { return d.y; });

vis.selectAll("text.label")

.attr("x", function(d) { return d.x+5; })

.attr("y", function(d) { return d.y+5; });

});

//declare shittez

forlater = false;

a = 0;

dcounter=0;

data = [];

function deliver(data){

if(dcounter<data.length){

var f = function(){deliver(data)}

setTimeout(f,200);

anew(data[dcounter][2],data[dcounter][0],data[dcounter][1]);

dcounter++;

}

}

function findmatches(no,myid){

m_list = []

for(q=0;q<nodes.length;q++){

if(nodes[q].t==no && q!=myid){

m_list.push(q)

}

}

return m_list;

}

jQuery.getJSON("words",function(data){

deliver(data);

});

function node_exists(t){

found = false

for(c=0;c<nodes.length;c++){

if(nodes[c].sou==t){

found = c;

}

}

return found;

}

function anew(txt,sou,tar){

exists = node_exists(sou);

if(!exists){

node = {x: w/2, y: h/2, sou:sou, tar:tar};

n = nodes.push(node)

vis.append("svg:text")

.attr("x",nodes[a]['x'])

.attr("y",nodes[a]['y'])

.attr("class", "label")

.style("fill", "black")

.style("font-size", "10px")

.style("font-family", "Arial")

.text(function(d) { return txt; });

if(a>0){

links.push({source: nodes[a], target: nodes[a-1]})

}

if(forlater){

links.push({source: nodes[forlater], target: nodes[a]})

links.push({source: nodes[forlater], target: nodes[a-1]})

forlater = false;

}

a++;

}else{

//stupid shit...can't create a link to a node that doesn't exist can we?

//why do something now when we can do it later huh

forlater = exists;

}

restart();

}

restart();

function restart() {

force.start();

vis.selectAll("line.link")

.data(links)

.enter().insert("svg:line", "circle.node")

.attr("class", "link")

.attr("x1", function(d) { return d.source.x; })

.attr("y1", function(d) { return d.source.y; })

.attr("x2", function(d) { return d.target.x; })

.attr("y2", function(d) { return d.target.y; });

vis.selectAll("circle.node")

.data(nodes)

.enter().insert("svg:circle", "circle.cursor")

.attr("class", "node")

.attr("cx", function(d) { return d.x; })

.attr("cy", function(d) { return d.y; })

.attr("r", 3.5)

.call(force.drag);

//er...wtf?

vis.selectAll("text.label")

.data(nodes)

.enter().insert("svg:text", "text.label")

.attr("class", "label")

.attr("x", function(d) { return d.x; })

.attr("y", function(d) { return d.y; })

.call(force.drag);

}

Example JSON shittez

[[109, 345, "produced"], [345, 146, "by"], [146, 365, "robert"], [365, 98, "cicconetti"], [98, 185, "ronald"], [185, 192, "holder"], [192, 311, "and"], [311, 280, "the"], [280, 203, "pg"], [203, 223, "online"], [223, 167, "distributed"], [167, 25, "proofreading"], [25, 100, "team"], [100, 311, "(http//wwwpgdpnet)"], [311, 102, "the"], [102, 348, "tale"], [348, 335, "of"], [335, 70, "peter"], [70, 345, "rabbit"], [345, 63, "by"], [63, 165, "beatrix"], [165, 212, "potter"], [212, 33, "frederick"], [33, 212, "warne"], [212, 33, "frederick"], [33, 161, "warne"], [161, 52, "first"], [52, 56, "published"], [56, 212, "1902"], [212, 33, "frederick"], [33, 59, "warne"], [59, 268, "&"], [268, 56, "co"], [56, 351, "1902"], [351, 192, "printed"], [192, 354, "and"], [354, 292, "bound"], [292, 48, "in"], [48, 386, "great"], [386, 345, "britain"], [345, 398, "by"], [398, 184, "william"], [184, 0, "clowes"], [0, 205, "limited"], [205, 192, "beccles"], [192, 110, "and"], [110, 405, "london"], [405, 300, "once"], [300, 180, "upon"], [180, 404, "a"], [404, 362, "time"], [362, 190, "there"], [190, 5, "were"], [5, 170, "four"], [170, 380, "little"], [380, 192, "rabbits"], [192, 174, "and"], [174, 55, "their"], [55, 253, "names"], [253, 269, "were--"], [269, 339, "flopsy"], [339, 320, "mopsy"], [320, 192, "cotton-tail"], [192, 335, "and"], [335, 128, "peter"], [128, 281, "they"], [281, 374, "lived"], [374, 174, "with"], [174, 310, "their"], [310, 292, "mother"], [292, 180, "in"], [180, 312, "a"], [312, 127, "sand-bank"], [127, 311, "underneath"], [311, 49, "the"], [49, 348, "root"], [348, 180, "of"], [180, 18, "a"], [18, 322, "very"], [322, 314, "big"], [314, 131, "fir-tree"], [131, 79, "now"], [79, 143, "my"], [143, 249, "dears"], [249, 328, "said"], [328, 213, "old"], [213, 70, "mrs"], [70, 166, "rabbit"], [166, 140, "one"], [140, 395, "morning"], [395, 96, "you"], [96, 6, "may"], [6, 353, "go"], [353, 311, "into"], [311, 237, "the"], [237, 350, "fields"], [350, 356, "or"], [356, 311, "down"], [311, 400, "the"], [400, 209, "lane"], [209, 163, "but"], [163, 6, "dont"], [6, 353, "go"], [353, 77, "into"], [77, 256, "mr"], [256, 16, "mcgregors"]]