User:Tash/grad prototyping3: Difference between revisions

No edit summary |

No edit summary |

||

| (19 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

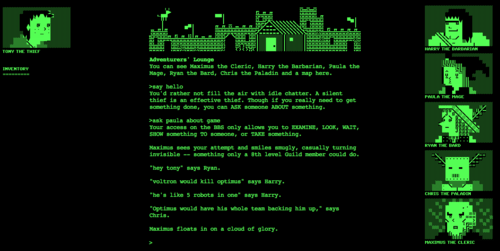

[[File:Guildedyouth-100006853-orig.png|500px|thumbnail|right|Guilded Youth, text-based adventure game]] | [[File:Guildedyouth-100006853-orig.png|500px|thumbnail|right|Guilded Youth, text-based adventure game]] | ||

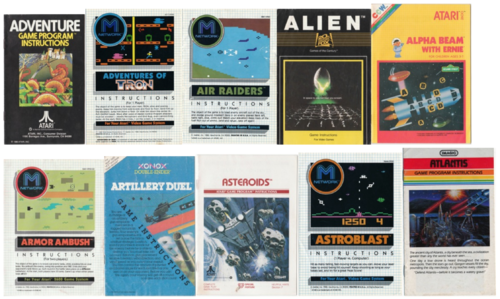

[[File:atari-game-manuals.png|500px|thumbnail|right|Guilded Youth, text-based adventure game]] | [[File:atari-game-manuals.png|500px|thumbnail|right|Guilded Youth, text-based adventure game]] | ||

'''Takeaways from last assessment:''' | '''Takeaways from last assessment:''' | ||

| Line 8: | Line 7: | ||

* installation at exhibition should situate the game within warnet culture | * installation at exhibition should situate the game within warnet culture | ||

* cards may not be the most suitable format? | * cards may not be the most suitable format? | ||

* option 1: game manual / player's handbook | * option 1: game manual / player's handbook | ||

** print element, places it again within copyshop / warnet context | ** print element, places it again within copyshop / warnet context | ||

** physical element, to counterbalance online aspect of the game | ** physical element, to counterbalance online aspect of the game | ||

** references the tactical handbook (military, troll handbooks, counterculture manuals etc) | ** references the tactical handbook (military, troll handbooks, counterculture manuals etc) | ||

* option 2: text-based browser game | * option 2: text-based browser game | ||

** brings it into the realm of videogames | ** brings it into the realm of videogames | ||

** more immersive gameplay | ** more immersive gameplay | ||

** technically difficult (how to do multiplayer? how to incorporate into wiki? | ** technically difficult (how to do multiplayer? how to incorporate into wiki? | ||

* questions: | * questions: | ||

** how to keep the tension between chance vs choice ? | ** how to keep the tension between chance vs choice ? | ||

| Line 22: | Line 27: | ||

'''Planning''' | '''Planning''' | ||

* April: finalize formats | * April: finalize formats & develop game | ||

* April - May: 2 more play sessions (with Marlies / shayan / jacintha?) | |||

* | |||

* June: final documentation and production | * June: final documentation and production | ||

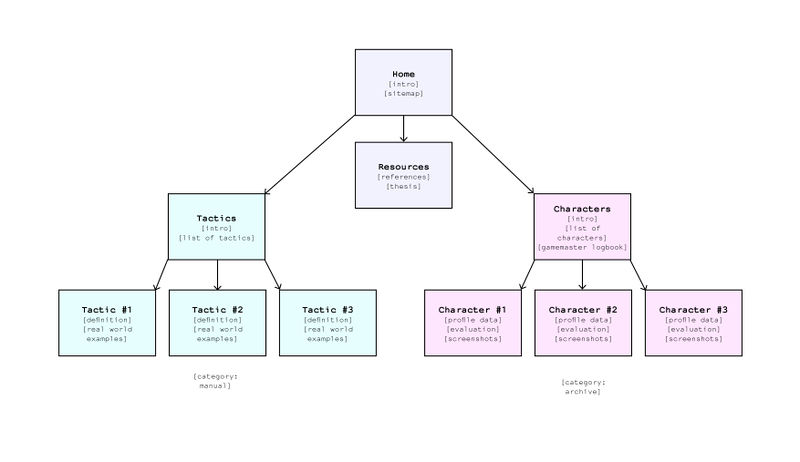

== Wiki sitemap == | |||

[[File:Tash-Wiki-sitemap.jpg|800px|frameless|center]] | |||

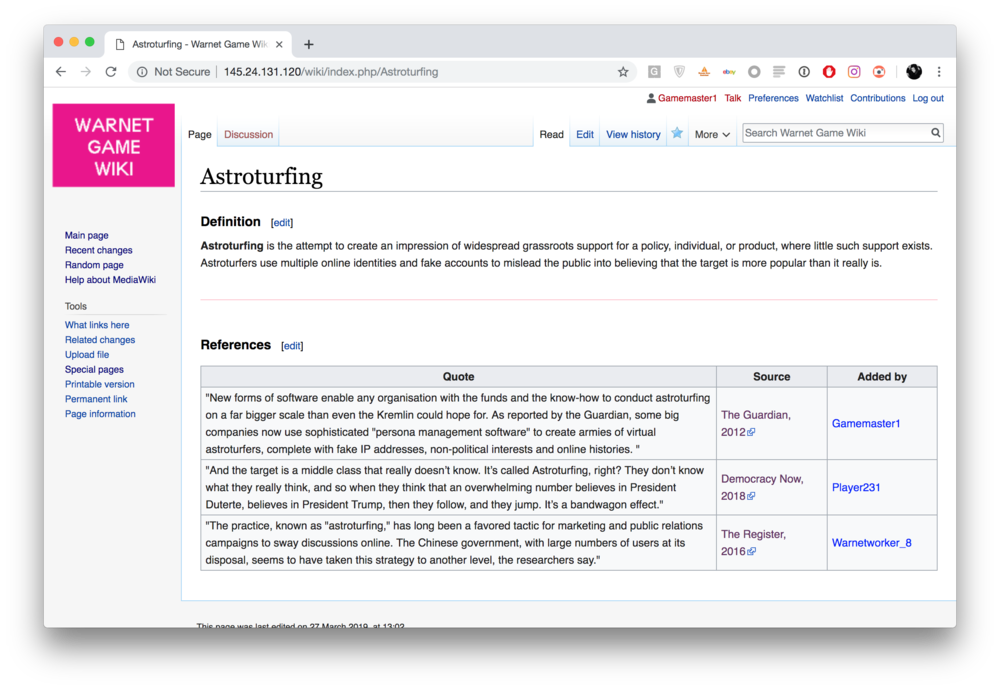

== Wiki development: 27 March == | |||

[[File:Screen Shot 2019-03-27 at 14.02.56.png|1000px|frameless|left]] | |||

[[File:Screen_Shot_2019-03-27_at_14.04.03.png|1000px|frameless|left]] | |||

<div style="clear:both;"></div> | |||

== To-do (Test play 2) == | |||

* <s> Finalize Plan A and Plan B for test location and time </s> | |||

* <s> Test archiving script over at least 10 mins of activity </s> | |||

* <s> Gameplay: prepare at least 4 email addresses </s> | |||

* <s> Gameplay: prepare cards & print </s> | |||

* <s> Gameplay: make handbook version & print </s> | |||

* <s> Wiki: make & populate all action / tactic pages </s> | |||

* <s> Wiki: make at least 3 characters to choose from </s> | |||

* <s> Buy papers & stationery </s> | |||

== Prototyping 06 May == | |||

=== Debugging Mediawiki === | |||

* problem: mediawiki categories were not saving onto database | |||

* running the maintenance script rebuildall.php was a temp solution | |||

* more research: turns out there seems to a be a problem in the php extension settings, and errors with looking up certain shared database files | |||

* working on it with Andre: perhaps the sqlite database is creating errors | |||

* solution: reinstall wiki with mysql database, move content to new wiki | |||

* export using backupDump.php --current --output dump.xml | |||

* sudo php importDump.php < /home/pi/dump.xml | |||

* with the new database, jobs seem to run smoothly, categories are updating! | |||

=== Wiki to print pipelines === | |||

* using as starting point: https://github.com/wdka-publicationSt/print-kiosk-ii (LaTex) and https://gitlab.com/Mondotheque/RadiatedBook/blob/master/download_wiki.py (weasyprint) | |||

* option 1: download html files from wiki using API, order them into 1 html and then make PDF file with weasyprint | |||

* option 2: possibly using ICML files and InDesign, which may be an 'in-between' solution | |||

'''Code: Import wiki content & save images''' <br> | |||

<source lang=python> | |||

#! /usr/bin/env python | |||

# -*- coding: utf-8 -*- | |||

from mwclient import Site | |||

from urllib.parse import urlparse | |||

from argparse import ArgumentParser | |||

import time | |||

from datetime import datetime | |||

import os | |||

from urllib.request import urlopen | |||

from urllib.request import quote | |||

from bs4 import BeautifulSoup | |||

#### | |||

# Arguments | |||

#### | |||

p = ArgumentParser() | |||

p.add_argument("--host", default="hub.xpub.nl") | |||

p.add_argument("--path", default="/warnet/", help="nb: should end with /") | |||

p.add_argument("--category", "-c", nargs="*", default=[["Tactics"]], action="append", help="category to query") | |||

p.add_argument("--download", "-d", default="htmlpages", help="What you want to download: htmlpages; images; wikipages") | |||

args = p.parse_args() | |||

#print args | |||

######### | |||

# defs | |||

######### | |||

def mwsite(host, path): #returns wiki site object | |||

site = Site(('http',host), path) | |||

return site | |||

def mw_cats(site, categories): #returns pages member of categories | |||

last_names = None | |||

for cat in categories: | |||

for ci, cname in enumerate(cat): | |||

cat = site.Categories[cname] | |||

pages = list(cat.members()) | |||

if last_names == None: | |||

results = pages | |||

else: | |||

results = [p for p in pages if p.name in last_names] | |||

last_names = set([p.name for p in pages]) | |||

results = list(results) | |||

return [p.name for p in results] | |||

def mw_page(site, page): | |||

page = site.Pages[page] | |||

return page | |||

def mw_page_imgsurl(site, page, imgdir): | |||

#all the imgs in a page | |||

#returns dict {"imagename":{'img_url':..., 'timestamp':... }} | |||

imgs = page.images() #images in page | |||

imgs = list(imgs) | |||

imgs_dict = {} | |||

if len(imgs) > 0: | |||

for img in imgs: | |||

if 'url' in img.imageinfo.keys(): #prevent empty images | |||

imagename = img.name | |||

imagename = (imagename.capitalize()).replace(' ','_') | |||

imageinfo = img.imageinfo | |||

imageurl = imageinfo['url'] | |||

timestamp = imageinfo['timestamp'] ## is .isoformat() | |||

imgs_dict[imagename]={'img_url': imageurl, | |||

'img_timestamp': timestamp } | |||

os.system('wget {} -N --directory-prefix {}'.format(imageurl, imgdir)) | |||

# wget - preserves timestamps of file | |||

# -N the decision as to download a newer copy of a file depends on the local and remote timestamp and size of the file. | |||

return imgs_dict | |||

else: | |||

return None | |||

def write_plain_file(content, filename): | |||

edited = open(filename, 'w') #write | |||

edited.write(content.decode('utf-8')) | |||

edited.close() | |||

# ------- Action: Import HTML ------- | |||

site = mwsite(args.host, args.path) | |||

html_dir="htmls" | |||

img_dir="{}/imgs".format(html_dir) | |||

memberpages=mw_cats(site, args.category) | |||

print (memberpages) | |||

memberpages | |||

for pagename in memberpages: | |||

if args.download == 'htmlpages': | |||

url = u"http://hub.xpub.nl/warnet/index.php?action=render&title="+quote(pagename) | |||

response = urlopen(url) | |||

print ('Download html source from', pagename) | |||

htmlsrc = response.read() | |||

pagename = (pagename.replace(" ", "_")) | |||

write_plain_file(htmlsrc, "{}/{}.html".format(html_dir, pagename.replace('/','_')) ) | |||

print ('Download images from', pagename) | |||

page = mw_page(site, pagename) | |||

page_imgs = mw_page_imgsurl(site, page, img_dir) | |||

time.sleep(3) | |||

# ------- Action: Modify HTML using BeautifulSoup ------- | |||

path = './htmls/' | |||

for filename in os.listdir(path): | |||

if filename.endswith(".html"): | |||

print (filename) | |||

htmlsrc = open('./htmls/{0}'.format(filename), "rb") | |||

soup = BeautifulSoup(htmlsrc, "html5lib") | |||

for el in soup.find_all(class_='thumb tright'): | |||

el.decompose() | |||

counter = 0 | |||

for el in soup.find_all('p'): | |||

counter= counter+1 | |||

if counter > 0: | |||

el.decompose() | |||

for el in soup.find_all(class_='button'): | |||

print(el) | |||

for el in soup.find_all('h3'): | |||

el.decompose() | |||

for el in soup.find_all('table'): | |||

el.decompose() | |||

for el in soup.find_all('hr'): | |||

el.decompose() | |||

for el in soup.find_all(attrs={"title": "Tactics"}): | |||

el.decompose() | |||

new = soup.prettify() | |||

filename = filename.replace('.html', '') | |||

with open("./htmls/edits/{0}.html".format(filename), 'w') as edited: | |||

edited.write(new) | |||

htmlsrc.close() | |||

</source> | |||

'''Code: Make PDFs for cards using Weasyprint''' <br> | |||

<source lang=python> | |||

from weasyprint import HTML, CSS | |||

import os | |||

## Make pdfs ## | |||

path = './htmls/edits/' | |||

for filename in os.listdir(path): | |||

title = filename.replace('.html', '') | |||

if filename.endswith(".html"): | |||

HTML('./htmls/edits/{0}'.format(filename)).write_pdf('./print/odd/{0}.pdf'.format(title), | |||

stylesheets=[CSS('./resources/style.pdf.css')]) | |||

</source> | |||

== Wiki development: 16 May== | |||

[[File:Screen Shot 2019-05-16 at 01.00.54.png|1000px|frameless|left]] | |||

[[File:Screen_Shot_2019-05-16_at_01.01.00.png|1000px|frameless|left]] | |||

[[File:Screen_Shot_2019-05-16_at_01.01.04.png|1000px|frameless|left]] | |||

<div style="clear:both;"></div> | |||

== Wiki development: 7 June== | |||

[[File:Screen_Shot_2019-06-05_at_17.52.22.png|1000px|frameless|left]] | |||

[[File:Screen_Shot_2019-06-05_at_17.57.59.png|1000px|frameless|left]] | |||

[[File:Screen_Shot_2019-06-05_at_17.58.20.png|1000px|frameless|left]] | |||

Latest revision as of 08:26, 7 June 2019

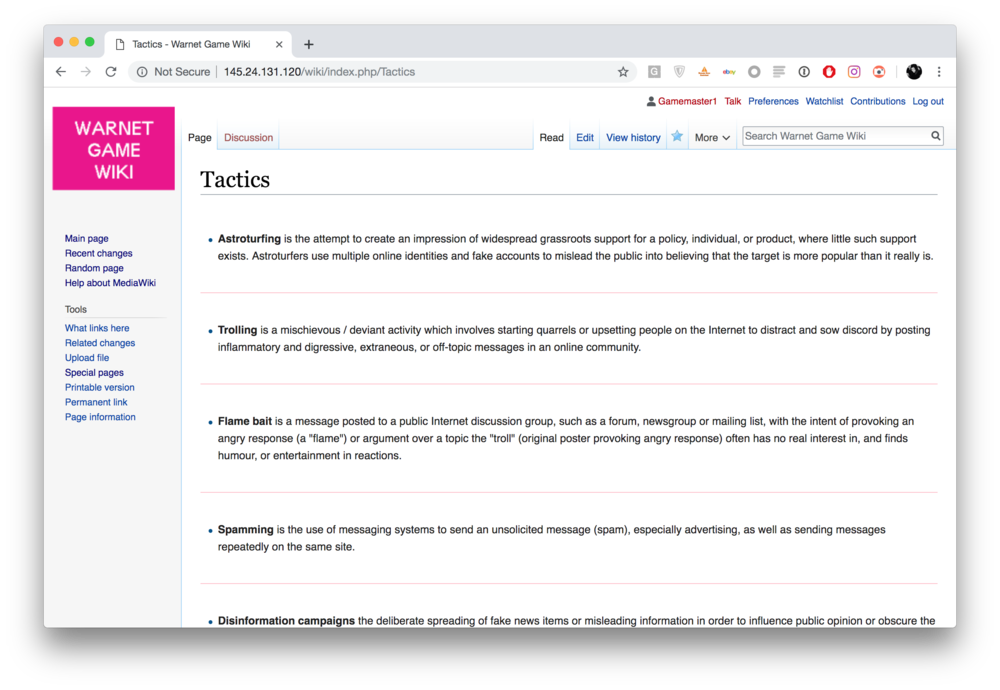

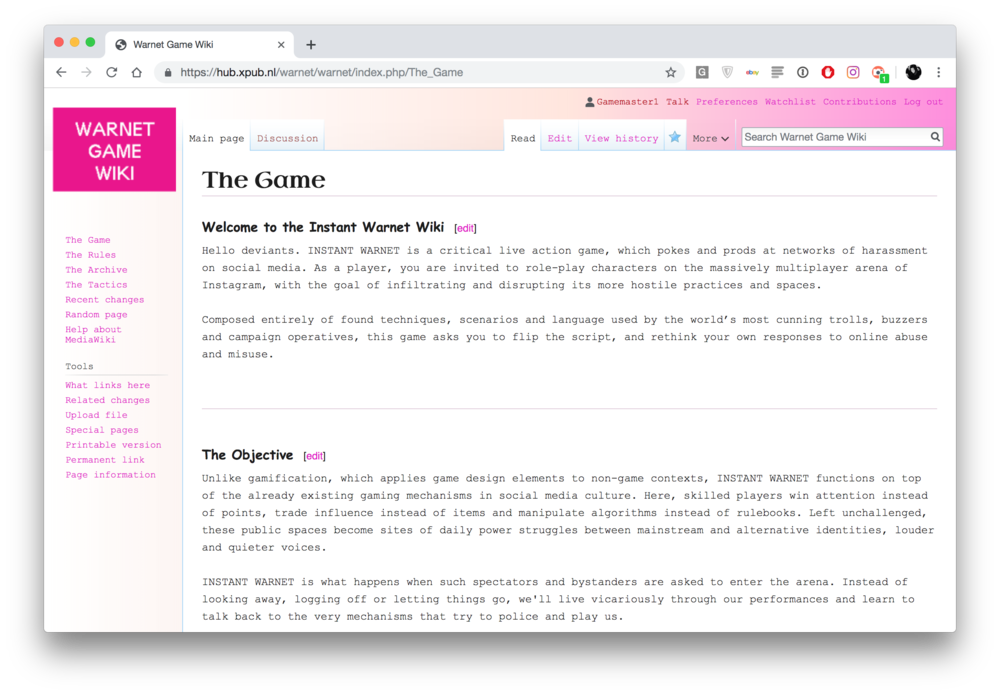

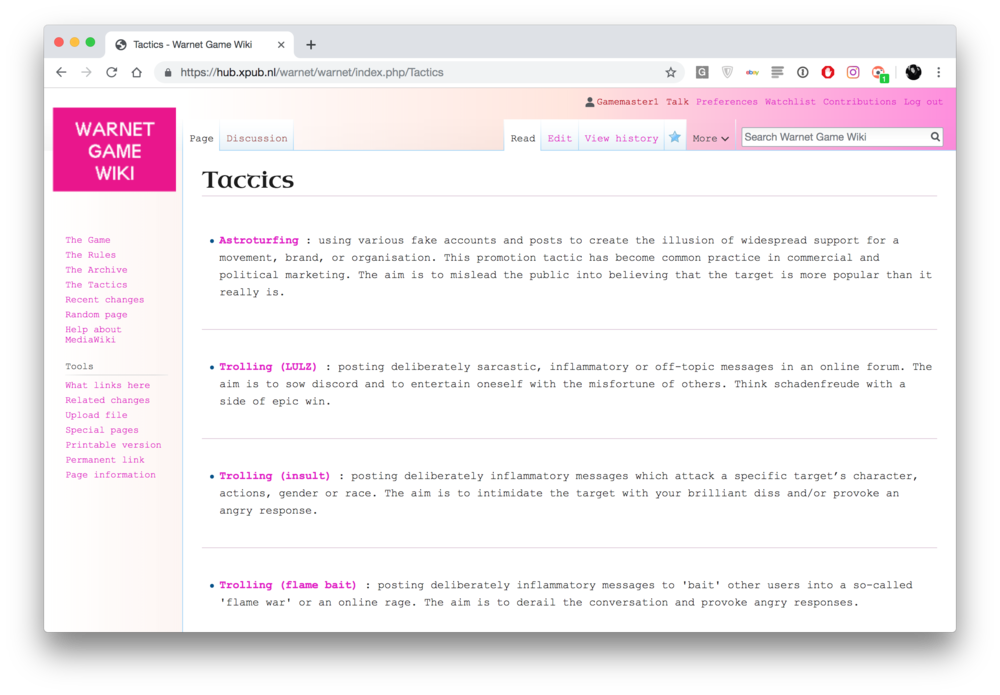

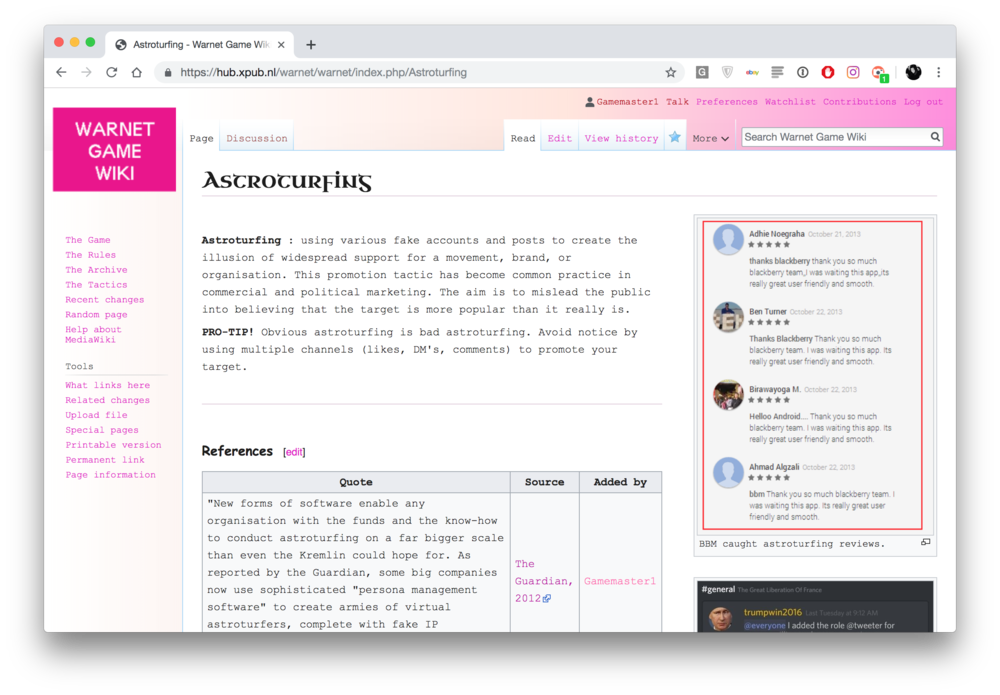

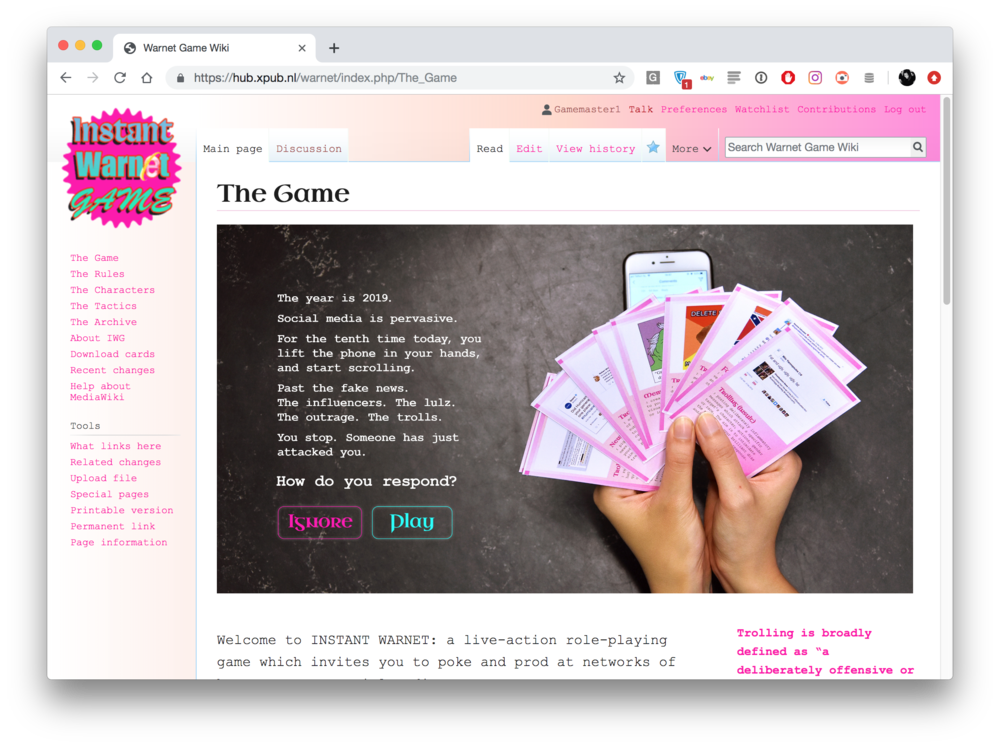

Prototyping 09 April

Takeaways from last assessment:

- important to build context & urgency

- installation at exhibition should situate the game within warnet culture

- cards may not be the most suitable format?

- option 1: game manual / player's handbook

- print element, places it again within copyshop / warnet context

- physical element, to counterbalance online aspect of the game

- references the tactical handbook (military, troll handbooks, counterculture manuals etc)

- option 2: text-based browser game

- brings it into the realm of videogames

- more immersive gameplay

- technically difficult (how to do multiplayer? how to incorporate into wiki?

- questions:

- how to keep the tension between chance vs choice ?

- roles of different project elements: wiki as rabbithole and input point, handbook / cards / text-based prompts as tools and the game as performance

Planning

- April: finalize formats & develop game

- April - May: 2 more play sessions (with Marlies / shayan / jacintha?)

- June: final documentation and production

Wiki sitemap

Wiki development: 27 March

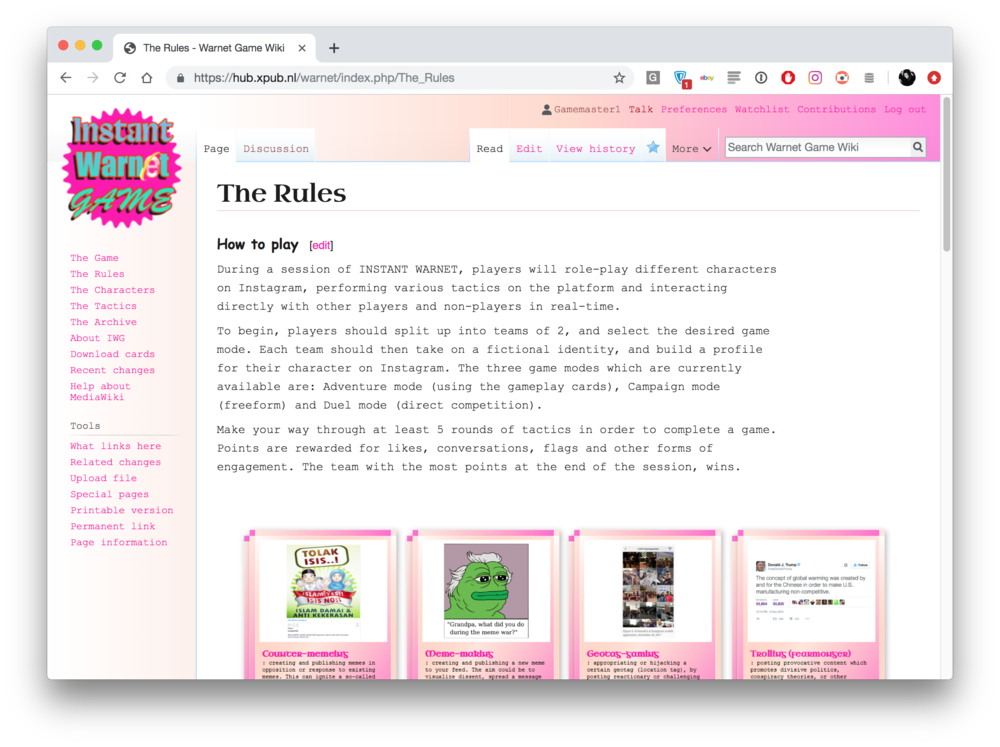

To-do (Test play 2)

Finalize Plan A and Plan B for test location and timeTest archiving script over at least 10 mins of activityGameplay: prepare at least 4 email addressesGameplay: prepare cards & printGameplay: make handbook version & printWiki: make & populate all action / tactic pagesWiki: make at least 3 characters to choose fromBuy papers & stationery

Prototyping 06 May

Debugging Mediawiki

- problem: mediawiki categories were not saving onto database

- running the maintenance script rebuildall.php was a temp solution

- more research: turns out there seems to a be a problem in the php extension settings, and errors with looking up certain shared database files

- working on it with Andre: perhaps the sqlite database is creating errors

- solution: reinstall wiki with mysql database, move content to new wiki

- export using backupDump.php --current --output dump.xml

- sudo php importDump.php < /home/pi/dump.xml

- with the new database, jobs seem to run smoothly, categories are updating!

Wiki to print pipelines

- using as starting point: https://github.com/wdka-publicationSt/print-kiosk-ii (LaTex) and https://gitlab.com/Mondotheque/RadiatedBook/blob/master/download_wiki.py (weasyprint)

- option 1: download html files from wiki using API, order them into 1 html and then make PDF file with weasyprint

- option 2: possibly using ICML files and InDesign, which may be an 'in-between' solution

Code: Import wiki content & save images

#! /usr/bin/env python

# -*- coding: utf-8 -*-

from mwclient import Site

from urllib.parse import urlparse

from argparse import ArgumentParser

import time

from datetime import datetime

import os

from urllib.request import urlopen

from urllib.request import quote

from bs4 import BeautifulSoup

####

# Arguments

####

p = ArgumentParser()

p.add_argument("--host", default="hub.xpub.nl")

p.add_argument("--path", default="/warnet/", help="nb: should end with /")

p.add_argument("--category", "-c", nargs="*", default=[["Tactics"]], action="append", help="category to query")

p.add_argument("--download", "-d", default="htmlpages", help="What you want to download: htmlpages; images; wikipages")

args = p.parse_args()

#print args

#########

# defs

#########

def mwsite(host, path): #returns wiki site object

site = Site(('http',host), path)

return site

def mw_cats(site, categories): #returns pages member of categories

last_names = None

for cat in categories:

for ci, cname in enumerate(cat):

cat = site.Categories[cname]

pages = list(cat.members())

if last_names == None:

results = pages

else:

results = [p for p in pages if p.name in last_names]

last_names = set([p.name for p in pages])

results = list(results)

return [p.name for p in results]

def mw_page(site, page):

page = site.Pages[page]

return page

def mw_page_imgsurl(site, page, imgdir):

#all the imgs in a page

#returns dict {"imagename":{'img_url':..., 'timestamp':... }}

imgs = page.images() #images in page

imgs = list(imgs)

imgs_dict = {}

if len(imgs) > 0:

for img in imgs:

if 'url' in img.imageinfo.keys(): #prevent empty images

imagename = img.name

imagename = (imagename.capitalize()).replace(' ','_')

imageinfo = img.imageinfo

imageurl = imageinfo['url']

timestamp = imageinfo['timestamp'] ## is .isoformat()

imgs_dict[imagename]={'img_url': imageurl,

'img_timestamp': timestamp }

os.system('wget {} -N --directory-prefix {}'.format(imageurl, imgdir))

# wget - preserves timestamps of file

# -N the decision as to download a newer copy of a file depends on the local and remote timestamp and size of the file.

return imgs_dict

else:

return None

def write_plain_file(content, filename):

edited = open(filename, 'w') #write

edited.write(content.decode('utf-8'))

edited.close()

# ------- Action: Import HTML -------

site = mwsite(args.host, args.path)

html_dir="htmls"

img_dir="{}/imgs".format(html_dir)

memberpages=mw_cats(site, args.category)

print (memberpages)

memberpages

for pagename in memberpages:

if args.download == 'htmlpages':

url = u"http://hub.xpub.nl/warnet/index.php?action=render&title="+quote(pagename)

response = urlopen(url)

print ('Download html source from', pagename)

htmlsrc = response.read()

pagename = (pagename.replace(" ", "_"))

write_plain_file(htmlsrc, "{}/{}.html".format(html_dir, pagename.replace('/','_')) )

print ('Download images from', pagename)

page = mw_page(site, pagename)

page_imgs = mw_page_imgsurl(site, page, img_dir)

time.sleep(3)

# ------- Action: Modify HTML using BeautifulSoup -------

path = './htmls/'

for filename in os.listdir(path):

if filename.endswith(".html"):

print (filename)

htmlsrc = open('./htmls/{0}'.format(filename), "rb")

soup = BeautifulSoup(htmlsrc, "html5lib")

for el in soup.find_all(class_='thumb tright'):

el.decompose()

counter = 0

for el in soup.find_all('p'):

counter= counter+1

if counter > 0:

el.decompose()

for el in soup.find_all(class_='button'):

print(el)

for el in soup.find_all('h3'):

el.decompose()

for el in soup.find_all('table'):

el.decompose()

for el in soup.find_all('hr'):

el.decompose()

for el in soup.find_all(attrs={"title": "Tactics"}):

el.decompose()

new = soup.prettify()

filename = filename.replace('.html', '')

with open("./htmls/edits/{0}.html".format(filename), 'w') as edited:

edited.write(new)

htmlsrc.close()

Code: Make PDFs for cards using Weasyprint

from weasyprint import HTML, CSS

import os

## Make pdfs ##

path = './htmls/edits/'

for filename in os.listdir(path):

title = filename.replace('.html', '')

if filename.endswith(".html"):

HTML('./htmls/edits/{0}'.format(filename)).write_pdf('./print/odd/{0}.pdf'.format(title),

stylesheets=[CSS('./resources/style.pdf.css')])

Wiki development: 16 May