Twitter Bot: Difference between revisions

Andre Castro (talk | contribs) m (Andre Castro moved page PythonTwitter to Twitter Bot) |

Andre Castro (talk | contribs) No edit summary |

||

| (One intermediate revision by the same user not shown) | |||

| Line 1: | Line 1: | ||

==''Twitter Bot Encyclopedia''' by Elizaveta Pritychenko.== | |||

http://leeeeza.com/twitter-bot-encyclopedia.html | |||

http://p-dpa.net/work/twitter-bot-encyclopedia/ | |||

[[File:twitter_bot_001.jpg|400px]] | |||

== MrRetweet @proust2000 by Jonas Lund== | |||

https://twitter.com/proust2000 | |||

[[User:Jonas_Lund/MrRetweet]] | |||

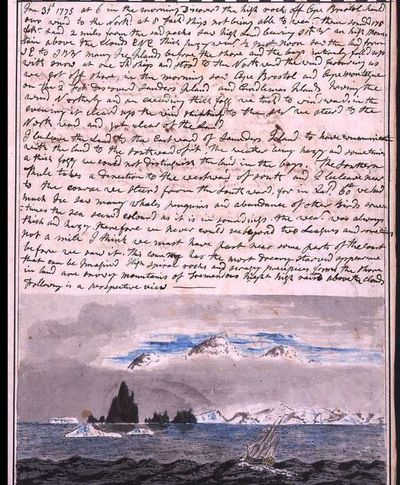

=='' Captain Tweet'' by Inge Hoonte== | |||

[https://twitter.com/tweet_captain @tweet_captain] | |||

[[User:Inge_Hoonte/captain_tweet|Project wiki page]] | |||

Follows the Captain's James Cook logs, posting its day to day entries. | |||

<blockquote>Welcome on board of the Weymouth. This is the captain's log for the journey between Portsmouth, England, and Algoa Bay, South Africa. Departure July 10, 1819.</blockquote> | |||

[[File:Capt_cook.jpg|400px]] | |||

=Code= | |||

Lots of data is available from Twitter via a public API (so using an API key may not be required, depending on your use) | Lots of data is available from Twitter via a public API (so using an API key may not be required, depending on your use) | ||

Latest revision as of 12:16, 4 February 2019

Twitter Bot Encyclopedia' by Elizaveta Pritychenko.

http://leeeeza.com/twitter-bot-encyclopedia.html

http://p-dpa.net/work/twitter-bot-encyclopedia/

MrRetweet @proust2000 by Jonas Lund

https://twitter.com/proust2000

Captain Tweet by Inge Hoonte

Follows the Captain's James Cook logs, posting its day to day entries.

Welcome on board of the Weymouth. This is the captain's log for the journey between Portsmouth, England, and Algoa Bay, South Africa. Departure July 10, 1819.

Code

Lots of data is available from Twitter via a public API (so using an API key may not be required, depending on your use)

Print the latest tweets from a particular user

See https://dev.twitter.com/docs/api/1/get/statuses/user_timeline

import urllib2, json

screen_name = "TRACKGent"

url = "http://api.twitter.com/1/statuses/user_timeline.json?screen_name=" + screen_name

data = json.load(urllib2.urlopen(url))

print len(data), "tweets"

tweet = data[0]

print tweet.keys()

for tweet in data:

print tweet['text']

Other examples

You can also use feedparser:

import feedparser

url = "http://search.twitter.com/search.atom?q=feel"

feed = feedparser.parse(url)

for e in feed.entries:

print e.title.encode("utf-8")

import feedparser

url = "http://search.twitter.com/search.atom?q=feel"

feed = feedparser.parse(url)

for e in feed.entries:

for word in e.title.split():

print word.encode("utf-8")

An older example using JSON:

from urllib import urlencode

import urllib2

import json

def openURL (url, user_agent="Mozilla/5.0 (X11; U; Linux x86_64; fr; rv:1.9.1.5) Gecko/20091109 Ubuntu/9.10 (karmic) Firefox/3.5.5"):

"""

Returns: tuple with (file, actualurl)

sets user_agent & follows redirection if necessary

realurl maybe different than url in the case of a redirect

"""

request = urllib2.Request(url)

if user_agent:

request.add_header("User-Agent", user_agent)

pagefile=urllib2.urlopen(request)

realurl = pagefile.geturl()

return (pagefile, realurl)

def getJSON (url):

(f, url) = openURL(url)

return json.loads(f.read())

TWITTER_SEARCH = "http://search.twitter.com/search.json"

data = getJSON(TWITTER_SEARCH + "?" + urlencode({'q': 'Rotterdam'}))

for r in data['results']:

print r['text']