PythonLabZalan: Difference between revisions

No edit summary |

No edit summary |

||

| (15 intermediate revisions by the same user not shown) | |||

| Line 45: | Line 45: | ||

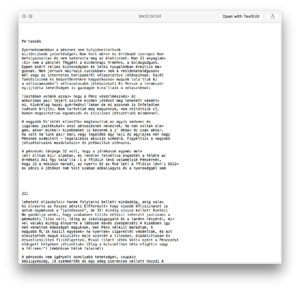

[[File:Screen Shot 2018-03-24 at 16.12.30.png|thumb|NLTK Analysis outcome]] | [[File:Screen Shot 2018-03-24 at 16.12.30.png|thumb|NLTK Analysis outcome]] | ||

To be able to understand how NLTK works I did an intensive python beginners learning week from 26.02. | To be able to understand how NLTK works I did an intensive python beginners learning week from 26.02. – 04.03.2018. | ||

Find my tutorial script notes [[File:Terminal tutorials.pdf|here]] | |||

== Natural Language Tool Kit == | == Natural Language Tool Kit == | ||

| Line 742: | Line 743: | ||

#print(lettercounter) | #print(lettercounter) | ||

</syntaxhighlight> | |||

'''Another Data Analysis Script with taking out the stop words''' | |||

<syntaxhighlight lang="python" line='line'> | |||

from nltk.corpus import stopwords | |||

from nltk.tokenize import word_tokenize | |||

from nltk import FreqDist | |||

import re, datetime | |||

text = open ("output.txt") | |||

content = text.read() | |||

#print(content) | |||

words = content.split(" ") | |||

splitting_statistic = sorted (set (words)) | |||

#print(splitting_statistic) | |||

example_sent = (words) | |||

stop_words = set(stopwords.words ('english')) | |||

#word_tokens = word_tokenize(example_sent) | |||

filtered_sentence = [w for w in example_sent if not w in stop_words] | |||

filtered_sentence = [] | |||

for w in example_sent: | |||

if w not in stop_words: | |||

filtered_sentence.append(w) | |||

#print(example_sent) | |||

#print(filtered_sentence) | |||

fdist = FreqDist(words) | |||

#print(fdist.most_common(100)) | |||

mylist = (words) #init the list | |||

print('Your input file has year dates =' ) | |||

for l in mylist: | |||

match = re.match(r'.*([1-3][0-9]{3})', l) | |||

if match is not None: | |||

#then it found a match! | |||

print(match.group(1)) | |||

s = open('output.txt','r').read() # Open the input file | |||

# Program will count the characters in text file | |||

num_chars = len(s) | |||

# Program will count the lines in the text file | |||

num_lines = s.count('\n') | |||

# Program will call split with no arguments | |||

words = s.split() | |||

d = {} | |||

for w in words: | |||

if w in d: | |||

d[w] += 1 | |||

else: | |||

d[w] = 1 | |||

num_words = sum(d[w] for w in d) | |||

lst = [(d[w],w) for w in d] | |||

lst.sort() | |||

lst.reverse() | |||

# Program assumes user has downloaded an imported stopwords from NLTK | |||

from nltk.corpus import stopwords # Import the stop word list | |||

from nltk.tokenize import wordpunct_tokenize | |||

stop_words = set(stopwords.words('english')) # creating a set makes the searching faster | |||

print ([word for word in lst if word not in stop_words]) | |||

# Program will print the results | |||

print('Your input file has characters = '+str(num_chars)) | |||

print('Your input file has lines = '+str(num_lines)) | |||

print('Your input file has the following words = '+str(num_words)) | |||

print('\n The 100 most frequent words are /n') | |||

i = 1 | |||

for count, word in lst[:100]: | |||

print('%2s. %4s %s' %(i,count,word)) | |||

i+= 1 | |||

from nltk.corpus import stopwords | |||

from nltk.tokenize import word_tokenize | |||

example_sent = (content) | |||

stop_words = set(stopwords.words('english')) | |||

word_tokens = word_tokenize(example_sent) | |||

filtered_sentence = [w for w in word_tokens if not w in stop_words] | |||

filtered_sentence = [] | |||

for w in word_tokens: | |||

if w not in stop_words: | |||

filtered_sentence.append(w) | |||

#print(word_tokens) | |||

#print(filtered_sentence) | |||

fdist = FreqDist(filtered_sentence) | |||

#print(fdist.most_common(100)) | |||

import nltk | |||

with open('output.txt', 'r') as f: | |||

sample = f.read() | |||

sentences = nltk.sent_tokenize(sample) | |||

tokenized_sentences = [nltk.word_tokenize(sentence) for sentence in sentences] | |||

tagged_sentences = [nltk.pos_tag(sentence) for sentence in tokenized_sentences] | |||

chunked_sentences = nltk.ne_chunk_sents(tagged_sentences, binary=True) | |||

def extract_entity_names(t): | |||

entity_names = [] | |||

if hasattr(t, 'label') and t.label: | |||

if t.label() == 'NE': | |||

entity_names.append(' '.join([child[0] for child in t])) | |||

else: | |||

for child in t: | |||

entity_names.extend(extract_entity_names(child)) | |||

return entity_names | |||

entity_names = [] | |||

for tree in chunked_sentences: | |||

# Print results per sentence | |||

# print extract_entity_names(tree) | |||

entity_names.extend(extract_entity_names(tree)) | |||

# Print all entity names | |||

print(entity_names) | |||

# Print unique entity names | |||

#print (set(entity_names)) | |||

dic1 =([len (i) for i in entity_names]) | |||

print(dic1) | |||

double_numbers1 = [] | |||

for n in dic1: | |||

double_numbers1.append(n*100) | |||

print(double_numbers1) | |||

div_numbers1= [] | |||

for n in dic1: | |||

div_numbers1.append(n/100) | |||

print(div_numbers1) | |||

list(zip(*(iter([double_numbers1]),)*3)) | |||

#group = lambda t, n: zip(*[t[i::n] for i in range(n)]) | |||

#group([1, 2, 3, 4], 2) | |||

#print(group) | |||

input = [double_numbers1] | |||

[input[i:i+n] for i in range(0, len(input), n)] | |||

</syntaxhighlight> | </syntaxhighlight> | ||

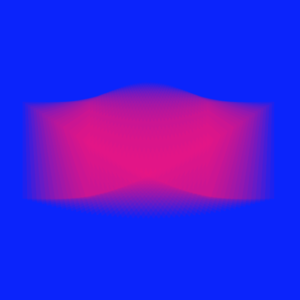

='''DrawBot'''= | ='''DrawBot'''= | ||

[[File: | [[File:Screen Shot 2018-03-24 at 17.09.02.png|thumb|DrawBot experiment 1]] | ||

[[File:Screen Shot 2018-03-06 at 23.45.23.png|thumb|DrawBot experiment 2]] | [[File:Screen Shot 2018-03-06 at 23.45.23.png|thumb|DrawBot experiment 2]] | ||

[[File:Screen Shot 2018-03-06 at 13.22.35.png|thumb|DrawBot experiment 3]] | [[File:Screen Shot 2018-03-06 at 13.22.35.png|thumb|DrawBot experiment 3]] | ||

[[File:Screen Shot 2018-03-07 at 17.30.58.png|thumb|DrawBot experiment generated through random Data input from Python3]] | [[File:Screen Shot 2018-03-07 at 17.30.58.png|thumb|DrawBot experiment generated through random Data input from Python3]] | ||

To be able to generate geometric shapes based on the analysis of the textual content I needed to connect Python3 to the drawing software [http://www.drawbot.com/ DrawBot], which works on python script. | To be able to generate geometric shapes based on the analysis of the textual content I needed to connect Python3 to the drawing software [http://www.drawbot.com/ DrawBot], which works on python script. | ||

'''Rotative Shape Gif in DrawBot''' | |||

Based on the [https://vimeo.com/149247423 tutorial] from [http://dailydrawbot.tumblr.com/ Jost van Rossum] | |||

<syntaxhighlight lang="python" line='line'> | |||

CANVAS = 500 | |||

SQUARESIZE = 158 | |||

NSQUARES = 50 | |||

SQUAREDIST = 6 | |||

width = NSQUARES * SQUAREDIST | |||

NFRAMES = 50 | |||

for frame in range(NFRAMES): | |||

newPage(CANVAS, CANVAS) | |||

frameDuration(1/20) | |||

fill(0, 0, 1, 1) | |||

rect(0, 0, CANVAS, CANVAS) | |||

phase = 2 * pi * frame / NFRAMES # angle in radians | |||

startAngle = 90 * sin(phase) | |||

endAngle = 90 * sin(phase + 20 *pi) | |||

translate(CANVAS/2 - width / 2, CANVAS/2) | |||

fill(1, 0, 0.5, 0.1) | |||

for i in range(NSQUARES + 1): | |||

f = i / NSQUARES | |||

save() | |||

translate(i * SQUAREDIST, 0) | |||

scale(0.7, 1) | |||

rotate(startAngle + f * (endAngle - startAngle)) | |||

rect(-SQUARESIZE/2, -SQUARESIZE/2, SQUARESIZE, SQUARESIZE) | |||

restore() | |||

#saveImage("StackOfSquares7.gif") | |||

</syntaxhighlight> | |||

'''Geometry generated based on random data input from Python''' | '''Geometry generated based on random data input from Python''' | ||

<syntaxhighlight lang="python" line='line'> | <syntaxhighlight lang="python" line='line'> | ||

import json | import json | ||

from random import randint, random | from random import randint, random | ||

| Line 775: | Line 1,008: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

'''Random data import with json in DrawBot''' | |||

<syntaxhighlight lang="python" line='line'> | |||

import json | |||

data = json.load(open("rdata.json")) | |||

# print (data) | |||

for x,y,w,h r, g, b, a in data: | |||

print(x,y) | |||

fill(r, g, b, max(a, 05)) | |||

rect(x, y, w, h) | |||

</syntaxhighlight> | |||

Latest revision as of 11:08, 25 March 2018

Terminal

Firstly I looked into basic command line functions File:Commands terminal.pdf and their operations for creating a solid base for Python3.

Optical character recognition + Tesseract

Secondarily I experimented in Terminal how to translate PDF or JPG to .txt files with tesseract and imagemagick (convert).

Tesseract (with languages you will be using)

- Mac

brew install tesseract --all-languages

imagemagick

- Mac

brew install imagemagick

How to use it?

tesseract - png - name of the txt file

tesseracttest SZAKACS$ tesseract namefile.png text2.txt

Getting 1 page from PDF file with PDFTK burst

pdftk yourfile.pdf burst

Or use imagemagick

convert -density 300 Typewriter\ Art\ -\ Riddell\ Alan.pdf Typewriter-%03d.tiff

Chose page you want to convert

Convert PDF to bit-map using imagemagick, with some options to optimize OCR

convert -density 300 page.pdf -depth 8 -strip -background white -alpha off ouput.tiff-density 300resolution 300DPI. Lower resolutions will create errors :)-depth 8number of bits for color. 8bit depth == grey-scale-strip -background white -alpha offremoves alpha channel (opacity), and makes the background whiteoutput.tiffin previous versions Tesseract only accepted images as tiffs, but currently more bitmap formats are accepted

Python3

To be able to understand how NLTK works I did an intensive python beginners learning week from 26.02. – 04.03.2018.

Find my tutorial script notes File:Terminal tutorials.pdf

Natural Language Tool Kit

For the NLTK text analysis I used one of pages of my reader. First NLTK Analysis in python3 (see below) to get different data from the textual input such as (see NLTK analysis outcome):

NLTK Analysis

- Amount of words

- The number of lowercase letters

- The number of uppercase letters

- 10 most common characters

- 10 most common words

- more than 15 character long words of the text

- Amount of Verbs

- Amount of Nouns

- Amount of Adverbs

- Amount of Pronouns

- Amount of Adjectives

- Amount of lines

NLTK Analysis Script

import nltk

from nltk import word_tokenize

from nltk import FreqDist

from nltk.tokenize import sent_tokenize

from sys import stdin,stdout

import re

import sys, string

#importing nltk library word_tokenize

from collections import Counter

text = open ("readertest.txt")

content = text.read()

#importing and reading the content

#print(content)

words = content.split(" ")

#the string content needs to signifier - needs to be splitted to be able to read it, it detects if a new words begins based on the " "

splitting_statistic = sorted (set (words))

# the content is splitted

#print(splitting_statistic)

wordsamount_statistic = f'{len(words)} Amount of the words'

#amount of the words

print(wordsamount_statistic)

string=(content)

count1=0

count2=0

for i in string:

if(i.islower()):

count1=count1+1

elif(i.isupper()):

count2=count2+1

print("The number of lowercase characters is:")

print(count1)

print("The number of uppercase characters is:")

print(count2)

#counts the lowercase and uppercase letters in the text

fdist = FreqDist(content)

print("10 most common characters:")

print(fdist.most_common(10))

#print out the 10 most common letters

fdist = FreqDist(words)

print("10 most common words:")

print(fdist.most_common(10))

#print out the 10 most common words

#new_list = fdist.most_common()

#print(new_list)

#for word, _ in new_list: #_ ignores the second variable, dictionary (key, value)

#print(' ',_)

#prints a list of the most common words - how to make it better in one line

def vowel_or_consonants (c):

if not c.isalpha():

return 'Neither'

vowels = 'aeiou'

if c.lower() in vowels:

return 'Vowel'

else:

return 'Consonant'

#for c in (content):

#print(c, vowel_or_consonants(c))

#print(sent_tokenize(content))

#splitting text into sentences

#for word in (words):

#print(word)

#control structure, each word in a seperate line

#fdist = FreqDist(words)

#print("hapaxes:")

#print(fdist.hapaxes())

#words that occur once only, the so-called hapaxes

V = set(words)

long_words = [w for w in V if len(w) > 15]

print("printing the more than 15 character long words of the text")

print(sorted(long_words))

#printing the more than 15 character long words of the text

tokenized_content = word_tokenize(content)

#the content is tokenized (nltk library)

statistic3 = nltk.pos_tag(tokenized_content)

#each word becomes a tag if is a verb, noun, adverb, pronoun, adjective)

#print(statistic3)

verbscounter = 0

verblist = []

for word, tag in statistic3:

if tag in {'VB','VBD','VBG','VBN','VBP','VBZ'}:

verbscounter = verbscounter + 1

verblist.append(word)

verb_statistic = f'{verbscounter} Verbs'

# shows the amount of verbs in the text

print(verb_statistic)

print(verblist)

#creating a list from the verb counter

#creating a dictionary from a list

nouncounter = 0

nounlist = []

for word, tag in statistic3:

if tag in {'NNP','NNS','NN', 'NNPS'}:

nouncounter = nouncounter + 1

nounlist.append(word)

nouns_statistic = f'{nouncounter} Nouns'

#shows the amount of nouns in the text

print(nouns_statistic)

print(nounlist)

verblist2 = verblist

nounlist2 = nounlist

verb_noun_dictionary = {}

for i in range (len(verblist2)):

verb_noun_dictionary[verblist2[i]] = nounlist2 [i]

verblist_and_nounlists = zip (verblist2, nounlist2)

verb_noun_dictionary = dict(verblist_and_nounlists)

verblist_and_nounlists = dict(zip(verblist2, nounlist2))

print(verblist_and_nounlists)

print(len(verblist))

characters = [words]

#print(words)

'''from itertools import groupby

def n_letter_dictionary(string):

result = {}

for key, group in groupby(sorted(string.split(), key = lambda x: len(x)), lambda x: len(x)):

result[key] = list(group)

return result

print(n_letter_dictionary)'''

adverbscounter = 0

adverblist = []

for word, tag in statistic3:

if tag in {'RB','RBR','RBS','WRB'}:

adverbscounter = adverbscounter + 1

adverblist.append(word)

adverb_statistic = f'{adverbscounter} Adverbs'

#shows the amount of adverbs in the text

print(adverb_statistic)

print(adverblist)

pronounscounter = 0

pronounslist = []

for word, tag in statistic3:

if tag in {'PRP','PRP$'}:

pronounscounter = pronounscounter + 1

pronounslist.append(word)

pronoun_statistic = f'{pronounscounter} Pronouns'

#shows the amount of pronouns in the text

print(pronoun_statistic)

print(pronounslist)

adjectivscounter = 0

adjectivslist = []

for word, tag in statistic3:

if tag in {'JJ','JJR','JJS'}:

adjectivscounter = adjectivscounter + 1

adjectivslist.append(word)

adjectiv_statistic = f'{adjectivscounter} Adjectives'

#shows the amount of adjectives in the text

print(adjectiv_statistic)

print(adjectivslist)

coordinating_conjuction_counter = 0

for word, tag in statistic3:

if tag in {'CC'}:

coordinating_conjuction_counter = coordinating_conjuction_counter + 1

coordinating_conjuction_statistic = f'{coordinating_conjuction_counter} Coordinating conjuctions'

#shows the amount of coordinating_conjuction in the text

print(coordinating_conjuction_statistic)

cardinal_number = 0

for word, tag in statistic3:

if tag in {'CC'}:

cardinal_number = cardinal_number + 1

cardinal_number_statistic = f'{cardinal_number} Cardinal numbers'

#shows the amount of cardinal_number in the text

print(cardinal_number_statistic)

determiner_counter = 0

for word, tag in statistic3:

if tag in {'D'}:

determiner_counter = determiner_counter + 1

determiner_statistic = f'{determiner_counter} Determiners'

#shows the amount of Determiners in the text

print(determiner_statistic)

existential_there_counter = 0

for word, tag in statistic3:

if tag in {'EX'}:

existential_there_counter = existential_there_counter + 1

existential_there_statistic = f'{existential_there_counter} Existential there'

#shows the amount of Existential there in the text

print(existential_there_statistic)

foreing_words_counter = 0

for word, tag in statistic3:

if tag in {'FW'}:

foreing_words_counter = foreing_words_counter + 1

foreing_words_statistic = f'{foreing_words_counter} Foreing words'

#shows the amount of foreing words in the text

print(foreing_words_statistic)

preposition_or_subordinating_conjunctionlist = []

preposition_or_subordinating_conjunction_counter = 0

for word, tag in statistic3:

if tag in {'IN'}:

preposition_or_subordinating_conjunction_counter = preposition_or_subordinating_conjunction_counter + 1

preposition_or_subordinating_conjunctionlist.append(word)

preposition_or_subordinating_conjunction_statistic = f'{preposition_or_subordinating_conjunction_counter} Preposition or subordinating conjunctions'

#shows the amount of preposition_or_subordinating_conjunction in the text

print(preposition_or_subordinating_conjunction_statistic)

print(preposition_or_subordinating_conjunctionlist)

list_item_marker_counter = 0

for word, tag in statistic3:

if tag in {'LS'}:

list_item_marker_counter = list_item_marker_counter + 1

list_item_marker_statistic = f'{list_item_marker_counter} List item markers'

#shows the amount of list item markers in the text

print(list_item_marker_statistic )

modals_counter = 0

for word, tag in statistic3:

if tag in {'LS'}:

modals_counter = modals_counter + 1

modals_statistic = f'{modals_counter} Modals'

#shows the amount of modals in the text

print(modals_statistic)

Predeterminer_counter = 0

for word, tag in statistic3:

if tag in {'PDT'}:

Predeterminer_counter = Predeterminer_counter + 1

Predeterminer_statistic = f'{Predeterminer_counter } Predeterminers'

#shows the amount of Predeterminers in the text

print(Predeterminer_statistic)

Possessive_ending_counter = 0

for word, tag in statistic3:

if tag in {'PDT'}:

Possessive_ending_counter = Possessive_ending_counter + 1

Possessive_ending_statistic = f'{Possessive_ending_counter} Possessive endings'

#shows the amount of Possessive endings in the text

print(Possessive_ending_statistic)

particle_counter = 0

for word, tag in statistic3:

if tag in {'RP'}:

Particle_counter = particle_counter + 1

particle_statistic = f'{particle_counter} Particles'

#shows the amount of Particles endings in the text

print(particle_statistic)

symbol_counter = 0

for word, tag in statistic3:

if tag in {'SYM'}:

symbol_counter = symbol_counter + 1

symbol_statistic = f'{symbol_counter} Symbols'

#shows the amount of symbols in the text

print(symbol_statistic)

to_counter = 0

for word, tag in statistic3:

if tag in {'TO'}:

to_counter = to_counter + 1

to_statistic = f'{to_counter} to'

#shows the amount of to in the text

print(to_statistic)

interjection_counter = 0

for word, tag in statistic3:

if tag in {'TO'}:

interjection_counter = interjection_counter + 1

interjection_statistic = f'{interjection_counter} Interjections'

#shows the amount of interjections in the text

print(interjection_statistic)

Wh_determiner_counter = 0

for word, tag in statistic3:

if tag in {'TO'}:

Wh_determiner_counter = Wh_determiner_counter + 1

Wh_determiner_statistic = f'{Wh_determiner_counter} Wh determiners'

#shows the amount of Wh determiners in the text

print(Wh_determiner_statistic)

Wh_pronoun_counter = 0

for word, tag in statistic3:

if tag in {'TO'}:

Wh_pronoun_counter = Wh_pronoun_counter + 1

Wh_pronoun_statistic = f'{Wh_pronoun_counter} Wh pronouns'

#shows the amount of Wh pronouns in the text

print(Wh_pronoun_statistic)

Possessive_wh_pronoun_counter = 0

for word, tag in statistic3:

if tag in {'TO'}:

Possessive_wh_pronoun_counter = Possessive_wh_pronoun_counter + 1

Possessive_wh_pronoun_statistic = f'{Possessive_wh_pronoun_counter} Possessive wh pronouns'

#shows the amount of Possessive wh pronouns in the text

print(Possessive_wh_pronoun_statistic)

dic1 =([len (i) for i in verblist])

print(dic1)

dic2=([len (i) for i in nounlist])

print(dic2)

dic3=([len (i) for i in adjectivslist])

print(dic3)

dic4=([len (i) for i in preposition_or_subordinating_conjunctionlist])

print(dic4)

#print([len (i) for i in verblist_and_nounlists])

#print([len (i) for i in words])

double_numbers1 = []

for n in dic1:

double_numbers1.append(n*100)

print(double_numbers1)

double_numbers2 = []

for n in dic2:

double_numbers2.append(n*100)

print(double_numbers2)

double_numbers3 = []

for n in dic3:

double_numbers3.append(n*100)

print(double_numbers3)

double_numbers4 = []

for n in dic4:

double_numbers4.append(n*100)

print(double_numbers4)

div_numbers1= []

for n in dic1:

div_numbers1.append(n/100)

print(div_numbers1)

div_numbers2= []

for n in dic2:

div_numbers2.append(n/100)

print(div_numbers2)

div_numbers3= []

for n in dic3:

div_numbers3.append(n/100)

print(div_numbers3)

div_numbers4= []

for n in dic4:

div_numbers4.append(n/100)

print(div_numbers4)

'''lst1 = [[double_numbers1], [double_numbers2], [double_numbers3], [double_numbers4]]

print((zip(*lst1))[0])'''

'''lst1 = [[double_numbers1], [double_numbers2], [double_numbers3], [double_numbers4]]

lst2 = []

lst2.append([x[0]for x in lst1])

print(lst2 [0])'''

'''lst1 = [[double_numbers1], [double_numbers2], [double_numbers3], [double_numbers4]]

outputlist = []

for values in lst1:

outputlist.append(values[-1])

print(outputlist)'''

n1 = double_numbers1

n1_a = (n1[0])

print(n1_a)

n2 = double_numbers2

#print(n2[0])

n3 = double_numbers3

#print(n3[0])

n4 = double_numbers4

#print(n4[0])

n5 = double_numbers1

#print(n5[1])

n6 = double_numbers2

#print(n6[1])

n7 = double_numbers3

#print(n7[1])

n8 = double_numbers3

#print(n8[1])

print((n1[0], n2[0]), (n3[0], n4[0]), (n5[1], n6[1]), (n7[1], n8[1]))

n1a = div_numbers1

#print(n1a[0])

n2a = div_numbers2

#print(n2a[0])

n3a = div_numbers3

#print(n3a[0])

n4a = div_numbers4

#print(n4a[0])

print(n1a[0], n2a[0], n3a[0], n4a[0])

text_file = open ("Output.txt", "w")

text_file.write(n1_a)

text_file.close()

wordsnumber_statistic = len(content.split())

#number of words

#print(wordsnumber_statistic)

numberoflines_statistic = len(content.splitlines())

#number of lines

print("Number of lines:")

print(numberoflines_statistic)

numberofcharacters_statistic = len(content)

#number of characters

print("Number of characters:")

print(numberofcharacters_statistic)

d ={}

for word in words:

d[word] = d.get(word, 0) + 1

#how many times a word accuers in the text, not sorted yet(next step)

#print(d)

word_freq =[]

for key, value in d.items():

word_freq.append((value, key))

#sorted the word count - converting a dictionary into a list

#print(word_freq)

lettercounter = Counter(content)

#counts the letters in the text

#print(lettercounter)

Another Data Analysis Script with taking out the stop words

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

from nltk import FreqDist

import re, datetime

text = open ("output.txt")

content = text.read()

#print(content)

words = content.split(" ")

splitting_statistic = sorted (set (words))

#print(splitting_statistic)

example_sent = (words)

stop_words = set(stopwords.words ('english'))

#word_tokens = word_tokenize(example_sent)

filtered_sentence = [w for w in example_sent if not w in stop_words]

filtered_sentence = []

for w in example_sent:

if w not in stop_words:

filtered_sentence.append(w)

#print(example_sent)

#print(filtered_sentence)

fdist = FreqDist(words)

#print(fdist.most_common(100))

mylist = (words) #init the list

print('Your input file has year dates =' )

for l in mylist:

match = re.match(r'.*([1-3][0-9]{3})', l)

if match is not None:

#then it found a match!

print(match.group(1))

s = open('output.txt','r').read() # Open the input file

# Program will count the characters in text file

num_chars = len(s)

# Program will count the lines in the text file

num_lines = s.count('\n')

# Program will call split with no arguments

words = s.split()

d = {}

for w in words:

if w in d:

d[w] += 1

else:

d[w] = 1

num_words = sum(d[w] for w in d)

lst = [(d[w],w) for w in d]

lst.sort()

lst.reverse()

# Program assumes user has downloaded an imported stopwords from NLTK

from nltk.corpus import stopwords # Import the stop word list

from nltk.tokenize import wordpunct_tokenize

stop_words = set(stopwords.words('english')) # creating a set makes the searching faster

print ([word for word in lst if word not in stop_words])

# Program will print the results

print('Your input file has characters = '+str(num_chars))

print('Your input file has lines = '+str(num_lines))

print('Your input file has the following words = '+str(num_words))

print('\n The 100 most frequent words are /n')

i = 1

for count, word in lst[:100]:

print('%2s. %4s %s' %(i,count,word))

i+= 1

from nltk.corpus import stopwords

from nltk.tokenize import word_tokenize

example_sent = (content)

stop_words = set(stopwords.words('english'))

word_tokens = word_tokenize(example_sent)

filtered_sentence = [w for w in word_tokens if not w in stop_words]

filtered_sentence = []

for w in word_tokens:

if w not in stop_words:

filtered_sentence.append(w)

#print(word_tokens)

#print(filtered_sentence)

fdist = FreqDist(filtered_sentence)

#print(fdist.most_common(100))

import nltk

with open('output.txt', 'r') as f:

sample = f.read()

sentences = nltk.sent_tokenize(sample)

tokenized_sentences = [nltk.word_tokenize(sentence) for sentence in sentences]

tagged_sentences = [nltk.pos_tag(sentence) for sentence in tokenized_sentences]

chunked_sentences = nltk.ne_chunk_sents(tagged_sentences, binary=True)

def extract_entity_names(t):

entity_names = []

if hasattr(t, 'label') and t.label:

if t.label() == 'NE':

entity_names.append(' '.join([child[0] for child in t]))

else:

for child in t:

entity_names.extend(extract_entity_names(child))

return entity_names

entity_names = []

for tree in chunked_sentences:

# Print results per sentence

# print extract_entity_names(tree)

entity_names.extend(extract_entity_names(tree))

# Print all entity names

print(entity_names)

# Print unique entity names

#print (set(entity_names))

dic1 =([len (i) for i in entity_names])

print(dic1)

double_numbers1 = []

for n in dic1:

double_numbers1.append(n*100)

print(double_numbers1)

div_numbers1= []

for n in dic1:

div_numbers1.append(n/100)

print(div_numbers1)

list(zip(*(iter([double_numbers1]),)*3))

#group = lambda t, n: zip(*[t[i::n] for i in range(n)])

#group([1, 2, 3, 4], 2)

#print(group)

input = [double_numbers1]

[input[i:i+n] for i in range(0, len(input), n)]

DrawBot

To be able to generate geometric shapes based on the analysis of the textual content I needed to connect Python3 to the drawing software DrawBot, which works on python script.

Rotative Shape Gif in DrawBot

Based on the tutorial from Jost van Rossum

CANVAS = 500

SQUARESIZE = 158

NSQUARES = 50

SQUAREDIST = 6

width = NSQUARES * SQUAREDIST

NFRAMES = 50

for frame in range(NFRAMES):

newPage(CANVAS, CANVAS)

frameDuration(1/20)

fill(0, 0, 1, 1)

rect(0, 0, CANVAS, CANVAS)

phase = 2 * pi * frame / NFRAMES # angle in radians

startAngle = 90 * sin(phase)

endAngle = 90 * sin(phase + 20 *pi)

translate(CANVAS/2 - width / 2, CANVAS/2)

fill(1, 0, 0.5, 0.1)

for i in range(NSQUARES + 1):

f = i / NSQUARES

save()

translate(i * SQUAREDIST, 0)

scale(0.7, 1)

rotate(startAngle + f * (endAngle - startAngle))

rect(-SQUARESIZE/2, -SQUARESIZE/2, SQUARESIZE, SQUARESIZE)

restore()

#saveImage("StackOfSquares7.gif")

Geometry generated based on random data input from Python

import json

from random import randint, random

data=[]

for i in range(100):

x=randint(0, 1000)

y=randint(0, 1000)

w=randint(0, 1000)

h=randint(0, 1000)

r=random()

g=random()

b=random()

a=random()

data.append( (x,y,w,h,r,g,b,a) )

print (json.dumps(data, indent=2))

Random data import with json in DrawBot

import json

data = json.load(open("rdata.json"))

# print (data)

for x,y,w,h r, g, b, a in data:

print(x,y)

fill(r, g, b, max(a, 05))

rect(x, y, w, h)