User:Renee Oldemonnikhof/technicalcourse2

Technical course trimester 2

First assignment

Feed.py

#Looking inside the RSS Feed

import feedparser

# Calling the information from the website and giving it the name "newwork"

newwork = feedparser.parse("http://feeds.feedburner.com/newwork")

import pprint

#pprint.pprint(newwork)

#print(newwork.entries[4]["title"])

print """

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<title>feedbook</title>

<link type="text/css" rel="stylesheet" media="all" href="stylesheet.css" />

</head>

<body>"""

for e in newwork.entries:

print"<h1>"

print e["title"].replace("&", "&").encode("utf-8")

print"</h1>"

print"<p>"

print e["summary"].replace("&", "&").encode("utf-8")

print"</p>"

print

print"""

</body>

</html>"""

#(in the terminal to save it as an html) python feed.py > output.html

Makebook.sh

python feed.py > feedbook/OEBPS/content.html

cd feedbook

zip -0Xq feedbook.epub mimetype

zip -Xr9Dq feedbook.epub *

lucidor feedbook.epub

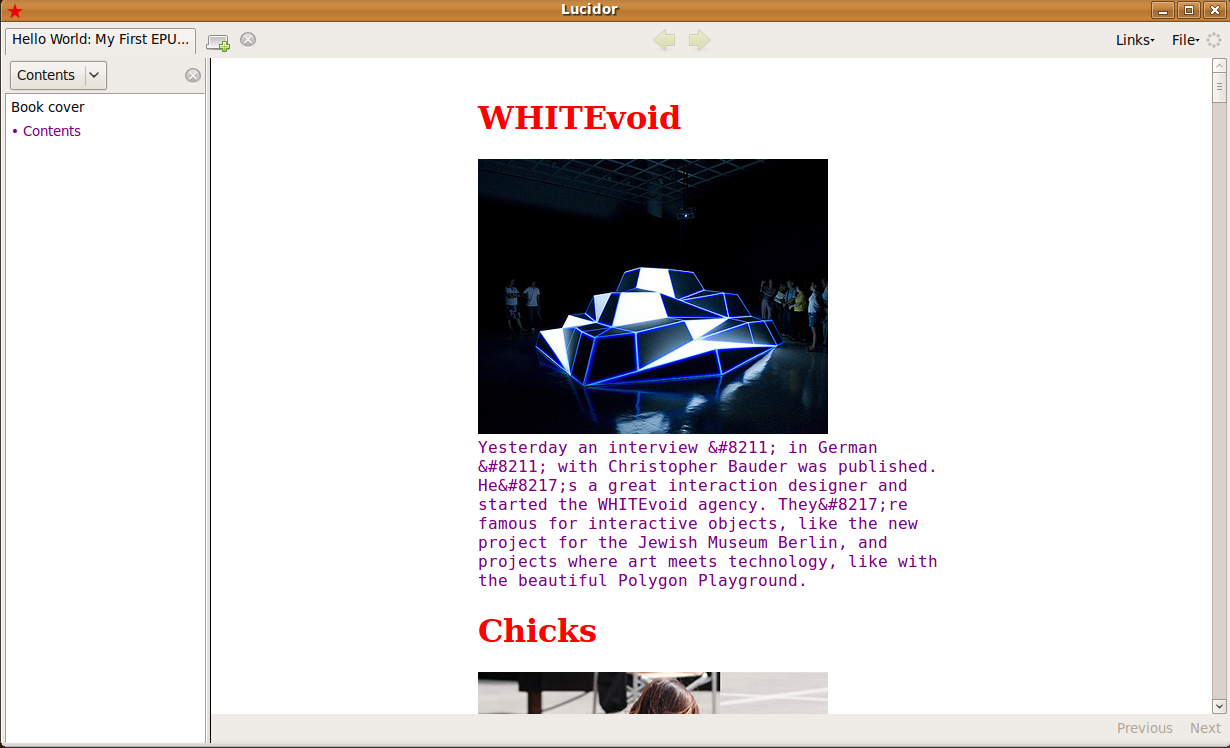

Visual representation of the epub

Second Assignment

a. PALINDROME HEADLINES

import feedparser

newwork = feedparser.parse("http://feeds.feedburner.com/newwork")

for entry in newwork.entries:

text = entry["title"]

words = text.split()

wordstwo = text.split()

wordstwo.reverse()

words = words[0:-1]

print " ".join(words + wordstwo)

Result is:

MTV Rocks MTV

Beauty In Engineering In Beauty

LET’S PLAY A GAME A PLAY LET’S

Demoscene strikes back strikes Demoscene

NY- Z NY-

Mind Control Mind

Walt goes open source open goes Walt

Segmentus

Alex Varanese Alex

Limbo

Milk it! Milk

Friends of Type of Friends

This Too Shall Pass Shall Too This

Stylo

Hello World Hello

Black Box Black

MONOCHRON

New FWA site FWA New

Champion Sound Champion

Radiant Child Radiant

How Your Money Works Money Your How

Vimeo vertising Vimeo

FITC leader FITC

Joe Danger Joe

Givolution

Grindin’

Nozzman app Nozzman

I’M SORRY I’M

Choco snow Choco

Red Bull Red

b. Chicken Comic

import urllib2, urlparse, html5lib

def absolutizeURL (href, base):

if not href.lower().startswith("http://"):

return urlparse.urljoin(base, href)

return href

def openURL (url):

"""

returns (page, actualurl)

sets user_agent and resolves possible redirection

realurl maybe different than url in the case of a redirect

"""

request = urllib2.Request(url)

user_agent = "Mozilla/5.0 (X11; U; Linux x86_64; fr; rv:1.9.1.5) Gecko/20091109 Ubuntu/9.10 (karmic) Firefox/3.5.5"

request.add_header("User-Agent", user_agent)

pagefile=urllib2.urlopen(request)

realurl = pagefile.geturl()

return (pagefile, realurl)

parser = html5lib.HTMLParser(tree=html5lib.treebuilders.getTreeBuilder("dom"))

(f,bamiurl)=openURL("http://images.google.com/images?hl=en&source=hp&q=bami&gbv=2&aq=f&aqi=g10&aql=&oq=")

tree1 = parser.parse(f)

f.close()

tree1.normalize()

bamiimgs = tree1.getElementsByTagName("img")

# print tags

(f,naziurl)=openURL("http://images.google.nl/images?q=chicken&oe=utf-8&rls=com.ubuntu:en-US:unofficial&client=firefox-a&um=1&ie=UTF-8&sa=N&hl=nl&tab=wi")

tree2 = parser.parse(f)

f.close()

tree2.normalize()

naziimgs = tree2.getElementsByTagName("img")

# print tags

print """<html><body>"""

for (bami, nazi) in zip (bamiimgs, naziimgs):

var=bami.getAttribute("src")

var=absolutizeURL(var,bamiurl)

if var != "http://images.google.com/images/nav_logo7.png":

print "<img src='" + var + "' height='200' />"

var=nazi.getAttribute("src")

var=absolutizeURL(var,naziurl)

if var != "http://images.google.com/images/nav_logo7.png":

print "<img src='" + var + "' height='200' />"

print """</html></body>"""

Result is:

c. Regular Expressions

import re

text = open("romeo_and_juliet.txt").read()

You = re.findall(r"\bYou [a-z']+\b", text)

Me = re.findall(r"\bI [a-z']+\b", text)

The = re.findall(r"\bthe [a-z']+", text)

You = list(set(You))

Me = list(set(Me))

import random

random.shuffle(You)

random.shuffle(Me)

random.shuffle(The)

for (x,y,z) in zip(You,Me,The):

print x,z,"-",y,z