User:Lieven Van Speybroeck/Disoriented thoughts/detournement

Start

The initial idea at the start of this trimester was to create an 'empty' webpage that would, each time it gets refreshed, generate its content and layout by elements scraped from other sites on the web. This would result in endless unexpected combinations of text, imagery and layout through which new (possibly interesting or completely uninteresting) contexts and interrelations are established. A self-generating internet found-footage collage so to speak.

The 'limitlessness' characteristic of this idea asked for a more confined starting point that could then, if the results were satisfactory and it would be technically possible, be expanded. Somehow, the search and disclosure of 'hidden' image's on the web came out as the kickoff.

I quickly found out that scraping for images based on their HTML markup and CSS-properties was more complicated than first anticipated. I think these were the necessary steps to get it done:

- Go to a (randomly picked) webpage (or link within a previously visited page)

- look for embedded, inline or external css properties

- look for classes or id's that had a display: none-property

- if not found: go back to step 1

- if found: remember the css-class or id

- go through the html page and look for elements with that class/id

- if the element is not an image or doesn't contain any: go back to step 1

- if the element is an image or contains any: save the image

- go back to step 1

Although this might look simple (and I reckon it's probably not thát hard), I somehow lacked the scraping skills to get it done. I simply didn't know how and where to start. I searched for some possibilities online – the main JavaScript-library of Firebug seemed to hold some parts that were useful for html and css processing – but I got stuck pretty fast. I needed some more scraping support...

Changing routes: API

During one of Michael's prototyping sessions I signed up for the "raw" scraping group (top left corner), since that sounded like my way to go. This cgi-script to scrape (visible) images on webpages was the result of an afternoon of tweaking, tuning and following Mr. Murtaugh's tips:

#!/usr/bin/env python

#-*- coding:utf-8 -*-

print('Content-type: text/html; charset=utf-8')

# need to divide the content type with a blank line from the head

print

print('<div style="text-align:center">')

import cgitb; cgitb.enable()

import random

import urllib2, urlparse, html5lib, lxml

from lxml.cssselect import CSSSelector

parser = html5lib.HTMLParser(tree=html5lib.treebuilders.getTreeBuilder("lxml"), namespaceHTMLElements=False)

nieuwsblad = []

standaard = []

request = urllib2.Request('http://www.nieuwsblad.be')

request.add_header("User-Agent", "Mozilla/5.0 (X11; U; Linux x86_64; fr; rv:1.9.1.5) Gecko/20091109 Ubuntu/9.10 (karmic) Firefox/3.5.5")

f=urllib2.urlopen(request)

page = parser.parse(f)

for links in CSSSelector('img[src]')(page):

img = urlparse.urljoin(f.geturl(), links.attrib['src'])

nieuwsblad.append(img)

request = urllib2.Request('http://www.degentenaar.be')

request.add_header("User-Agent", "Mozilla/5.0 (X11; U; Linux x86_64; fr; rv:1.9.1.5) Gecko/20091109 Ubuntu/9.10 (karmic) Firefox/3.5.5")

f=urllib2.urlopen(request)

page = parser.parse(f)

for links in CSSSelector('img[src]')(page):

img = urlparse.urljoin(f.geturl(), links.attrib['src'])

standaard.append(img)

for (n,s) in (zip(nieuwsblad,standaard)):

print('<img style="border:solid 16px blue" src="'+n+'" />')

print('<img style="border:solid 16px red" src="'+s+'" /><br/>')

print('</div>')

I had new hopes to get the hidden variant up and running, but again, I seemed to hit the wall on the technical part. As I noticed from the workshops (and from Michael's advice), API's offered very powerful ways to scrape content that involved a lot less technical knowledge. I wanted to give it a try to get a better understanding of how the scraping works and see if I could use this experience to actually accomplish my initial idea. Staying in the realm of images, I chose to look into the Flickr API.

Time and an awful lot of junk

At the same time, I was working on the (modest) development of an alternative time-system for a project at Werkplaats Typografie: Speeltijd. It's basically a clock that runs 12/7 faster than normal time, resulting in 12-day weeks. Since I knew I'd probably need JavaScript for my scraper (Michael mentioned JQuery as one of the tools I'd probably have to look at), I thought it might be a nice introduction to JavaScript and JQuery. All and all, this went pretty well, and I liked the JavaScript-way of coding! Also, the concept of time seemed like an interesting thing to keep in mind for my thematic project.

So, I started experimenting with the Flickr API and managed to get a scraper running:

import flickrapi, random, urllib2, time, os, sys

useragent = "Mozilla/5.001 (windows; U; NT4.0; en-US; rv:1.0) Gecko/25250101"

api_key = '109fc258301afdfa9ad746118b0c1f0f'

flickr = flickrapi.FlickrAPI(api_key)

start='2010-01-01'

stop='2010-01-02'

n = 0

i = 1

pg = 1

width = 1

height = 1

ext = '.jpg'

folder = 'sky'

# what are you searching for?

tagSearch = 'sky scraper'

total = flickr.photos_search(content_type='1', min_taken_date=start, max_taken_date=stop,tags=tagSearch,sort="date-taken-asc",tag_mode="all")

perPage = flickr.photos_search(content_type='1',min_taken_date=start,max_taken_date=stop,tags=tagSearch,sort="date-taken-asc",tag_mode="all",page=pg)

# amount of pages

pgs = total[0].attrib['pages']

print '=== total pages: '+str(pgs)+' ==='

while True:

try:

if pg <= int(pgs):

for photo in perPage[0]:

if n < 100:

p = flickr.photos_getInfo(photo_id=photo.attrib['id'])

# http://farm{farm-id}.static.flickr.com/{server-id}/{id}_{secret}.jpg

href = 'http://farm'+p[0].attrib['farm']+'.static.flickr.com/'+p[0].attrib['server']+'/'+p[0].attrib['id']+'_'+p[0].attrib['secret']+'.jpg'

request = urllib2.Request(href, None, {'User-Agent': useragent})

remotefile = urllib2.urlopen(request)

print 'Downloading: photo '+str(i)+' - pg '+str(pg)+'\n('+href+')'

# create/open folder save image

if not os.path.exists(folder):

os.makedirs(folder)

os.makedirs(folderEdit)

localfile = open(folder+'/'+str(i)+ext, "wb")

localfile.write(remotefile.read())

localfile.close()

i += 1

n += 1

n = 0

print '--- page '+str(pg)+' processed ---\n--- starting page '+str(pg+1)+'---'

pg += 1

perPage = flickr.photos_search(content_type='1',min_taken_date=start,max_taken_date=stop,tags=tagSearch,sort="date-taken-asc",page=pg)

except Exception, theError:

print '>>>>> Error >>>>>\n'+str(theError)+'\n<<<<< Error <<<<<'

sys.exc_clear()

Due to a certain fixation on time (the Speeltijd was haunting me), I was looking at ways to create an "image" clock, based on the taken_date and geo information of the photos I scraped. The results gave me a nice overview on the amount of junk that gets uploaded every minute and the inaccuracy of the taken_date format. It was clear to me pretty fast that this route was a dead end.

Astounded by the vast amount of pictures that get added on Flickr each day, I got interested in somehow reducing that mass into a certain 'essence'. Working with Python Image Library, I added a piece to my code that reduced the images I scraped (based on tagging this time) to a 1x1 pixel. These pixels have the average color value of the whole image.

from PIL import Image

import flickrapi, random, urllib2, time, os, sys

useragent = "Mozilla/5.001 (windows; U; NT4.0; en-US; rv:1.0) Gecko/25250101"

api_key = '109fc258301afdfa9ad746118b0c1f0f'

flickr = flickrapi.FlickrAPI(api_key)

start='2010-01-01'

stop='2010-01-02'

n = 0

i = 1

pg = 1

width = 1

height = 1

ext = '.jpg'

folder = 'sky'

folderEdit= 'sky_edit'

# what are you searching for?

tagSearch = 'sky scraper'

total = flickr.photos_search(content_type='1', min_taken_date=start, max_taken_date=stop,tags=tagSearch,sort="date-taken-asc",tag_mode="all")

perPage = flickr.photos_search(content_type='1',min_taken_date=start,max_taken_date=stop,tags=tagSearch,sort="date-taken-asc",tag_mode="all",page=pg)

# amount of pages

pgs = total[0].attrib['pages']

print '=== total pages: '+str(pgs)+' ==='

while True:

try:

if pg <= int(pgs):

for photo in perPage[0]:

if n < 100:

p = flickr.photos_getInfo(photo_id=photo.attrib['id'])

# http://farm{farm-id}.static.flickr.com/{server-id}/{id}_{secret}.jpg

href = 'http://farm'+p[0].attrib['farm']+'.static.flickr.com/'+p[0].attrib['server']+'/'+p[0].attrib['id']+'_'+p[0].attrib['secret']+'.jpg'

request = urllib2.Request(href, None, {'User-Agent': useragent})

remotefile = urllib2.urlopen(request)

print 'Downloading: photo '+str(i)+' - pg '+str(pg)+'\n('+href+')'

# create/open folder save original image

if not os.path.exists(folder):

os.makedirs(folder)

os.makedirs(folderEdit)

localfile = open(folder+'/'+str(i)+ext, "wb")

localfile.write(remotefile.read())

localfile.close()

# open image and resize to 1x1px

im = Image.open(folder+'/'+str(i)+ext)

im1 = im.resize((width,height), Image.ANTIALIAS)

im1.save(folderEdit+'/'+str(i)+ext)

i += 1

n += 1

n = 0

print '--- page '+str(pg)+' processed ---\n--- starting page '+str(pg+1)+'---'

pg += 1

perPage = flickr.photos_search(content_type='1',min_taken_date=start,max_taken_date=stop,tags=tagSearch,sort="date-taken-asc",page=pg)

except Exception, theError:

print '>>>>> Error >>>>>\n'+str(theError)+'\n<<<<< Error <<<<<'

sys.exc_clear()

This time, the results indicated people's love for tags, lots of them, the more the merrier, whether they really apply to the image or not. The idea was to use these pixels to create an image that resembled the essence of a huge collection, and maybe distinguish patterns depending on time, place or tag. Although this was definitely possible, the concept of a mere (and probably not very successful) data-visualization occurred to me as rather insufficient and uninteresting. Also, I had clearly drifted away from my initial idea. It felt like I was pretty lost at sea (accompanied by Inge, who was looking for sea-monsters apparently) .

Crawling back

In an attempt to get back (or at least closer) to my initial idea, I started looking for possible routes that related to collage techniques and found-footage. Somehow, the words crawling and scraping occurred to me as something really physical, as if you would break something, get on the floor look for the scattered bits and pieces and scrape them back together. From this perspective, I thought it might be nice to look at things that get cut up and spread (over the Internet or through other means), and ways in which these pieces get 'glued' back together, sampled, remixed to new forms. With the concept of time still in the back of my head I stumbled upon Christian Marclay's The Clock, an artwork that combines clips from thousands of films into a 24-hour video collage that also functions as a working clock:

{{#ev:youtube|Y8svkK7d7sY|500}}

The re-usage of existing text and imagery into new artpieces brought me to the User Guide to Détournement of Guy Debord and Gil J Wolman. While reading this text, the idea of the self-generating webpage that creates new combinations out of found and decontextualized elements kept coming back to me.

Any elements, no matter where they are taken from, can be used to make new combinations. The discoveries of modern poetry regarding the analogical structure of images demonstrate that when two objects are brought together, no matter how far apart their original contexts may be, a relationship is always formed. (Debord & Wolman, 1956)

Since this text was written in a pre-digital era, I wondered what the implications are when you apply it to a world in which digital images and text are omnipresent. Particularly when you look at the description of what Debord and Wolman call minor détournement:

Minor détournement is the détournement of an element which has no importance in itself and which thus draws all its meaning from the new context in which it has been placed. (Debord & Wolman, 1956)

This made me think of my 1px Flickr images. Ultimately, pixels are the most neutral elements to which a digital image can be reduced and that draw their meaning from the context (their relation to and combination with other pixels and their position in a grid) in which they have been placed. In Détournement as Negation and Prelude, Debord (and in general the Situationist International) writes about the reuse of the “detournable bloc” as material for other ensembles express the search for a vaster construction, a new genre of creation at a higher level (Situationist International, 1959).

Decision time

Currently I'm still figuring out which direction to take this. The web-détournement page still seems like a valuable option, but it has proven to be, for me at least, a hard nut to crack. I have also been experimenting (although it's still very green) with Python's Image Library to extract pixel data from images with the option of using this data to recompose other images. More specifically I was thinking of ways to use the pixels of frames from different movies to recompose frames of another. A kind of digital meta-détournement (awful term, i know).

Update

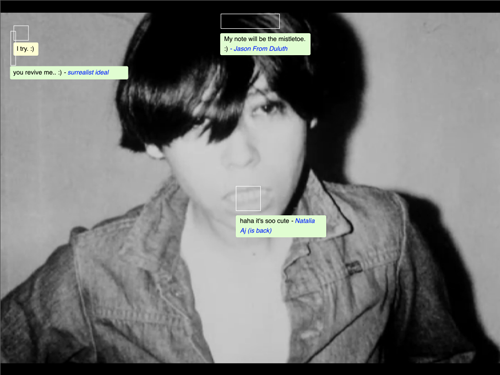

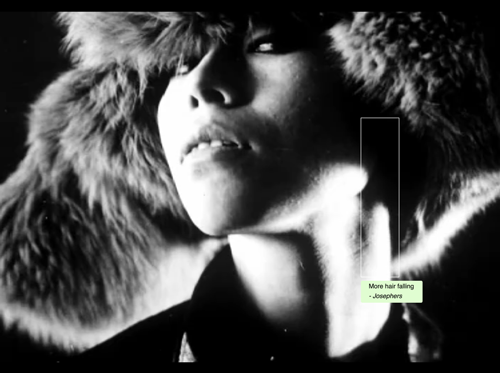

As I started reading more and more on the SI, making decisions seemed to become harder and harder. I eventually ended up trying out all kinds of things, none of which brought either satisfying results or had interesting conceptual potential. There was a shift from my initial idea to work with the images and their pixeldata to working with all the information (comments, description, exif data, ...) that surround it, instead of the image itself. I wanted to recreate Debord's movie Society of the Spectacle in a way that, to me, seemed more relevant for today's notion of the spectacle: a social relationship between people that is mediated through media that is for a huge part constructed by the audience itself. The focus is still very much on 'the image' here, but maybe not as 'scopic' as Debord pictured it. To do this I wanted to use all the parts on Flickr that are being produced by the viewers: notes and comments. Using the original movie as a base, there were some ideas:

- putting notes on top, getting rid of the image and rebuilding the movie purely with notes and comments (looking at the characteristics of the scenes)

- using Debord's soundtrack as a base to search for images

- blur movieframes based on the amount of comments and notes

- masking areas of the movie based on note dimensions and positions of flickr images (search based on movie soundtrack)

- posting moviestills from Society of The Spectacle on flickr to get them commented and noted and then recompose the movie using only the comments and notes

- ...

Some snippets of code:

notes and their positions

import flickrapi, random, urllib2, time, os, sys, json

useragent = "Mozilla/5.001 (windows; U; NT4.0; en-US; rv:1.0) Gecko/25250101"

api_key = '109fc258301afdfa9ad746118b0c1f0f'

flickr = flickrapi.FlickrAPI(api_key)

q = 0

pg = 1

n=0

c=0

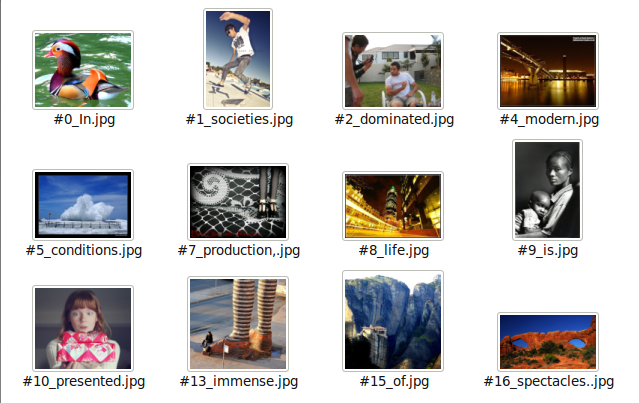

textSearch = 'societies, dominated, conditions, production, life, accumulation, spectacles'

total = flickr.photos_search(content_type='1',tags=textSearch,sort='relevance')

perPage = flickr.photos_search(content_type='1',tags=textSearch,sort='relevance',page=pg)

pgs = total[0].attrib['pages']

print '=== total pages: '+str(pgs)+' ==='

while True:

try:

if pg <= int(pgs):

for photo in perPage[0]:

if q < 100:

p = flickr.photos_getInfo(photo_id=photo.attrib['id'])

# NOTES REQUEST

nUrl = "http://api.flickr.com/services/rest/?method=flickr.photos.getInfo&photo_id="+p[0].attrib['id']+"&format=json&nojsoncallback=1&api_key=" + api_key

nQry = json.load(urllib2.urlopen(nUrl))

# COMMENTS REQUEST

cUrl = "http://api.flickr.com/services/rest/?method=flickr.photos.comments.getList&photo_id="+p[0].attrib['id']+"&format=json&nojsoncallback=1&api_key=" + api_key

cQry = json.load(urllib2.urlopen(cUrl))

# NOTES PROCESSING

notes = nQry['photo']['notes']

title = nQry['photo']['title']['_content']

time = "".join(str(n) for n in nQry['photo']['dates']['taken']).split(" ")[1]

for k,v in notes.iteritems():

if not v:

# print "======================================================\n[ title ] : "+title+"\n[ date ] : "+time+"\n======================================================"

print 'NO NOTES'

else:

print "======================================================\n[ title ] : "+title+"\n[ date ] : "+time+"\n======================================================"

for note in v:

n+=1

note_id = note['id']

content = note['_content']

author = note['author']

authorname = note['authorname']

width = note['w']

height = note['h']

top = note['x']

left = note['y']

print "[ note ] : #"+note_id+"\ncontent : "+content+"\nauthor : "+author+"\nauthorname : "+authorname+"\nwidth : "+width+"\nheight : "+height+"\ntop : "+top+"\nleft : "+left+"\n______________________________________________________\n++++++++++++++++++++++++++++++++++++++++++++++++++++++\n"

# COMMENTS PROCESSING

comments = cQry['comments']['comment']

for comment in comments:

c+=1

commentContent = comment['_content']

print "COMMENT #"+str(c)+"\n*************************************************************\n"+commentContent+"\n*************************************************************"

print "TOTAL NOTES: "+str(n)+"\nTOTAL COMMENTS: "+str(c)+"\n\n"

c=0

n=0

print "picture "+str(q)+" (id="+p[0].attrib['id']+") processed"

q += 1

q = 0

print '--- page '+str(pg)+' processed ---\n--- starting page '+str(pg+1)+'---'

# go to next page

pg += 1

perPage = flickr.photos_search(content_type='1',tags=textSearch,sort='relevance',page=pg)

except Exception, theError:

print '>>>>> Error >>>>>\n'+str(theError)+'\n<<<<< Error <<<<<'

sys.exc_clear()

I wanted to use this data to put it on top of the SotS movie:

The notes would pop up and disappear as the movie plays and the frames change. The search would be based on the description of the stills/scenes.

notes, comments, favorites and image sizes

Calculating a 'productivity-level' for the image to then rebuild images where visibility is based on the amount of comments, favorites, notes, ...

import flickrapi, random, urllib2, time, os, sys, json

from decimal import Decimal

useragent = "Mozilla/5.001 (windows; U; NT4.0; en-US; rv:1.0) Gecko/25250101"

# WHAT ARE YOU SEARCHING FOR?

textSearch = 'spectacle'

# VARIABLES

q = 0

pg = 1

n=0

c=0

px = 0

# API-SPECIFIC VARIABLES

api_key = '109fc258301afdfa9ad746118b0c1f0f'

flickr = flickrapi.FlickrAPI(api_key)

total = flickr.photos_search(content_type='1',tags=textSearch,sort='relevance')

perPage = flickr.photos_search(content_type='1',tags=textSearch,sort='relevance',page=pg)

pgs = total[0].attrib['pages']

print '=== total pages: '+str(pgs)+' ==='

while True:

try:

if pg <= int(pgs):

for photo in perPage[0]:

if q < 100:

p = flickr.photos_getInfo(photo_id=photo.attrib['id'])

urlPt1 = "http://api.flickr.com/services/rest/?method=flickr.photos."

urlPt2 = "&photo_id="+p[0].attrib['id']+"&format=json&nojsoncallback=1&api_key=" + api_key

# SCRAPE DIMENSIONS + CALCULATE PXs

URL = urlPt1+"getSizes"+urlPt2

QRY = json.load(urllib2.urlopen(URL))

picture = 'http://farm'+p[0].attrib['farm']+'.static.flickr.com/'+p[0].attrib['server']+'/'+p[0].attrib['id']+'_'+p[0].attrib['secret']+'.jpg'

sizes = QRY['sizes']['size']

for size in sizes:

if size['label'] == 'Medium':

size_width = size['width']

size_height = size['height']

px = int(size_width)*int(size_height)

# SCRAPE NOTES

URL = urlPt1+"getInfo"+urlPt2

QRY = json.load(urllib2.urlopen(URL))

notes = QRY['photo']['notes']

title = QRY['photo']['title']['_content']

time = "".join(str(n) for n in QRY['photo']['dates']['taken']).split(" ")[1]

for k,v in notes.iteritems():

if v:

for note in v:

n+=1

# In case we'd want to use the note info:

# note_id = note['id']

# content = note['_content']

# author = note['author']

# authorname = note['authorname']

# width = note['w']

# height = note['h']

# top = note['x']

# left = note['y']

# SCRAPE COMMENTS

URL = urlPt1+"comments.getList"+urlPt2

QRY = json.load(urllib2.urlopen(URL))

comments = QRY['comments']

for k,v in comments.iteritems():

if v:

for comment in v:

c+=1

# In case we'd want to use the comment content:

# commentContent = comment['_content']

# SCRAPE FAVORITES

URL = urlPt1+"getFavorites"+urlPt2

QRY = json.load(urllib2.urlopen(URL))

f = QRY['photo']['total']

# CALCULATE PRODUCTIVITY LEVEL

if n != 0 and c!= 0 and f!= 0:

pr = int(n)*int(c)*int(f)

elif (n != 0) and (c != 0) and (f == 0):

pr = int(n)*int(c)

elif (n != 0) and (c == 0) and (f != 0):

pr = int(n)*int(f)

elif (n == 0) and (c != 0) and (f != 0):

pr = int(c)*int(f)

elif (n == 0) and (c == 0) and (f == 0):

pr = 0

print (px/pr)

# CALCULATE VISIBILITY PERCENTAGE

print "TITLE: "+title

print "id : "+str(p[0].attrib['id'])

print "pixels: "+str(px)

print "notes: "+str(n)

print "comments: "+str(c)

print "favorites: "+str(f)

print "level of production: "+str(pr)

# print "visibility % : "+str(round((pr/px,5)*100))

print "---------------------------------"

# RESET COUNTERS + GO TO NEXT ITEM

c=0

n=0

q += 1

# END OF FIRST PAGE (100 items) - CHANGE PAGE

print '--- page '+str(pg)+' processed ---\n--- starting page '+str(pg+1)+'---'

pg += 1

q = 0

perPage = flickr.photos_search(content_type='1',tags=textSearch,sort='relevance',page=pg)

# KEEP GOING IF SOMETHING GOES WRONG

except Exception, theError:

print '>>>>> Error >>>>>\n'+str(theError)+'\n<<<<< Error <<<<<'

sys.exc_clear()

applied to the movie it would be something like this:

scraping only for 'text'

import flickrapi, random, urllib2, time, os, sys, json

from decimal import Decimal

useragent = "Mozilla/5.001 (windows; U; NT4.0; en-US; rv:1.0) Gecko/25250101"

# WHAT ARE YOU SEARCHING FOR?

# VARIABLES

pg = 1

n=0

c=0

px = 0

q = 0

folder = 'dump'

processed = []

# API-SPECIFIC VARIABLES

api_key = '109fc258301afdfa9ad746118b0c1f0f'

flickr = flickrapi.FlickrAPI(api_key)

#total = flickr.photos_search(content_type='1',text=textSearch,sort='relevance')

#perPage = flickr.photos_search(content_type='1',text=textSearch,sort='relevance',page=pg)

#pgs = total[0].attrib['pages']

f = open('debord', 'r')

s = f.read().split(" ")

f.close()

listfile = open('list.txt', 'a')

for i in s:

n+=1

textSearch = str(i)

total = flickr.photos_search(content_type='1',text=textSearch,sort='relevance')

pgs = total[0].attrib['pages']

print 'Searching for text: '+i+'\nPage results: '+str(pgs)

listfile.write('Results for '+i+': '+str(pgs)+'\n')

try:

photo = total[0][q]

p = flickr.photos_getInfo(photo_id=photo.attrib['id'])

urlPt1 = "http://api.flickr.com/services/rest/?method=flickr.photos."

urlPt2 = "&photo_id="+p[0].attrib['id']+"&format=json&nojsoncallback=1&api_key=" + api_key

# SCRAPE NOTES

URL = urlPt1+"getInfo"+urlPt2

QRY = json.load(urllib2.urlopen(URL))

notes = QRY['photo']['notes']

title = QRY['photo']['title']['_content']

time = "".join(str(n) for n in QRY['photo']['dates']['taken']).split(" ")[1]

processed.append(title)

pic = processed.count(title)

print str(pic)

print title

for k,v in notes.iteritems():

if v:

for note in v:

# note_id = note['id']

content = note['_content']

# author = note['author']

# authorname = note['authorname']

width = note['w']

height = note['h']

top = note['x']

left = note['y']

# SCRAPE COMMENTS

URL = urlPt1+"comments.getList"+urlPt2

QRY = json.load(urllib2.urlopen(URL))

# comments = QRY['comments']['comment']

# for content in comments:

# if content:

# commentContent = content['_content']

href = 'http://farm'+p[0].attrib['farm']+'.static.flickr.com/'+p[0].attrib['server']+'/'+p[0].attrib['id']+'_'+p[0].attrib['secret']+'.jpg'

request = urllib2.Request(href, None, {'User-Agent': useragent})

remotefile = urllib2.urlopen(request)

print 'Downloading: photo '+str(i)+"_#"+str(n)+' - pg '+str(pg)+'\n('+href+')'

# create/open folder save the image

localfile = open(folder+'/#'+str(n)+"_"+str(i)+'.jpg', "wb")

localfile.write(remotefile.read())

localfile.close()

print '---'

# KEEP GOING IF SOMETHING GOES WRONG

except Exception, theError:

print '>>>>> Error >>>>>\n'+str(theError)+'\n<<<<< Error <<<<<'

sys.exc_clear()

listfile.close()

scrape images based on the soundtrack of the movie

First make a list of how many times an image is the result of a specific word, and then use this list to avoid multiple instances of the same image throughout the text

# -*- coding: utf-8 -*-

import flickrapi, random, urllib2, time, os, sys, json

useragent = "Mozilla/5.001 (windows; U; NT4.0; en-US; rv:1.0) Gecko/25250101"

# VARIABLES

pg = 1

c=0

px = 0

q = 0

folder = 'dump2'

processed = []

counter = []

# API-SPECIFIC VARIABLES

api_key = '109fc258301afdfa9ad746118b0c1f0f'

flickr = flickrapi.FlickrAPI(api_key)

f = open('debord', 'r')

s = f.read().split(" ")

f.close()

# TITLE FREQUENCY COUNTER

n=0

counterfile = open('counter', 'a')

for i in s:

n+=1

print str(n)

textSearch = str(i)

total = flickr.photos_search(content_type='1',text=textSearch,sort='relevance')

print textSearch

try:

photo = total[0][0]

p = flickr.photos_getInfo(photo_id=photo.attrib['id'])

urlPt1 = "http://api.flickr.com/services/rest/?method=flickr.photos."

urlPt2 = "&photo_id="+p[0].attrib['id']+"&format=json&nojsoncallback=1&api_key=" + api_key

URL = urlPt1+"getInfo"+urlPt2

QRY = json.load(urllib2.urlopen(URL))

title = QRY['photo']['title']['_content']

print title

processed.append(title)

pic = processed.count(title)

print 'appeared '+str(pic-1)+' times'

counterfile.write(str(pic-1)+'\n')

print '---'

# KEEP GOING IF SOMETHING GOES WRONG

except Exception, theError:

print '>>>>> Error >>>>>\n'+str(theError)+'\n<<<<< Error <<<<<'

counterfile.write('0\n')

sys.exc_clear()

counterfile.close()

# GET IMAGE INFORMATION

f = open('counter', 'r')

c = f.read().split('\n')

f.close

n=0

for i in s:

textSearch = str(i)

total = flickr.photos_search(content_type='1',text=textSearch,sort='relevance')

try:

q = int(c[n])

print 'search image for the word: '+textSearch+' #'+str(q))

photo = total[0][q]

p = flickr.photos_getInfo(photo_id=photo.attrib['id'])

urlPt1 = "http://api.flickr.com/services/rest/?method=flickr.photos."

urlPt2 = "&photo_id="+p[0].attrib['id']+"&format=json&nojsoncallback=1&api_key=" + api_key

href = 'http://farm'+p[0].attrib['farm']+'.static.flickr.com/'+p[0].attrib['server']+'/'+p[0].attrib['id']+'_'+p[0].attrib['secret']+'.jpg'

request = urllib2.Request(href, None, {'User-Agent': useragent})

remotefile = urllib2.urlopen(request)

print 'Downloading: photo '+str(i)+"_#"+str(n)+' - pg '+str(pg)+'\n('+href+')'

# create/open folder save the image

localfile = open(folder+'/#'+str(n)+"_"+str(i)+'.jpg', "wb")

localfile.write(remotefile.read())

localfile.close()

print '---'

n+=1

# KEEP GOING IF SOMETHING GOES WRONG

except Exception, theError:

print '>>>>> Error >>>>>\n'+str(theError)+'\n<<<<< Error <<<<<'

n+=1

sys.exc_clear()

scrape only images that have the title 'Unique'

#!/usr/bin/python

import flickrapi, random, urllib2, time, os, sys, json

useragent = "Mozilla/5.001 (windows; U; NT4.0; en-US; rv:1.0) Gecko/25250101"

folder = 'unique'

textSearch = 'unique'

i = 0

q = 0

pg = 1

api_key = '109fc258301afdfa9ad746118b0c1f0f'

flickr = flickrapi.FlickrAPI(api_key)

total = flickr.photos_search(content_type='1',text=textSearch,sort='relevance')

page = flickr.photos_search(content_type='1',text=textSearch,sort='relevance',page=pg)

pgs = total[0].attrib['pages']

print '=== total pages: '+str(pgs)+' ==='

while True:

try:

if pg <= int(pgs):

for photo in page[0]:

if q < 100:

print i

p = flickr.photos_getInfo(photo_id=photo.attrib['id'])

pic = 'http://farm'+p[0].attrib['farm']+'.static.flickr.com/'+p[0].attrib['server']+'/'+p[0].attrib['id']+'_'+p[0].attrib['secret']+'.jpg'

URL = "http://api.flickr.com/services/rest/?method=flickr.photos.getInfo&photo_id="+p[0].attrib['id']+"&format=json&nojsoncallback=1&api_key="+api_key

QRY = json.load(urllib2.urlopen(URL))

pic_title = QRY['photo']['title']['_content']

print pic_title

pic_id = QRY['photo']['id']

print pic_id+'\n---'

if pic_title == 'Unique' or pic_title == 'unique':

i+=1

request = urllib2.Request(pic, None, {'User-Agent': useragent})

remotefile = urllib2.urlopen(request)

print 'Downloading: picture '+str(i)+' - pg '+str(pg)+'\n('+pic+')'

# create/open folder save the image

if not os.path.exists(folder):

os.makedirs(folder)

localfile = open(folder+'/'+str(i)+'_'+pic_id+'.jpg', "wb")

localfile.write(remotefile.read())

localfile.close()

q += 1

# END OF FIRST PAGE (100 items) - CHANGE PAGE

print '--- page '+str(pg)+' processed ---\n--- starting page '+str(pg+1)+'---'

pg += 1

q = 0

page = flickr.photos_search(content_type='1',text=textSearch,sort='relevance',page=pg)

# KEEP GOING IF SOMETHING GOES WRONG

except Exception, theError:

print '>>>>> Error >>>>>\n'+str(theError)+'\n<<<<< Error <<<<<'

sys.exc_clear()

Research to practice conversion: a dead end

When working on this, I felt the conceptual part remained very weak, and somehow I couldn't get Society of the Spectacle out of my head. I realized it was very hard to work with this material and get something really strong out of it (perhaps because the movie is so strong in itself). Also, the fact that I got stuck using an API of a service in which I have little interest, originally to 'get some scraping going' and explore scraping methods is a major problem. It's a very literal interpretation of 'the image', which may be good, but it doesn't cover the load when talking about contemporary notions of 'the spectacle'. Some parts of Debord's theory on the spectacle still hold (maybe even more than ever) today, others have shifted. The spectacle is still very much a social relationship between people that is mediated through images, and it's a rather passive one. But on the other hand, the audience has gained the ability to produce, too (which can emphasize on the passive nature of relationships through all kinds of platforms though). So a successful project would be to show these shifts, create a 'contemporary' Society of the Spectacle as a detournement of the original, and at the same time emphasize on how strong the core of Debord's idea of 'Spectacle' is applicable to today. Although this research has been very helpful (both coding-wise as content-wise), I struggled to pour an essence of it into a strong, practical project. At this stage, I feel like I would have to start over with a clean slate and a different approach angle to get out of the dead end this research has become on a conceptual level.